Automated user interface tests are a topic that even experienced developers are wary of. At the same time, the technology of such testing does not constitute anything extraordinary, and in the case of Visual Studio Coded UI Tests, it is an extension of the built-in unit testing system of Visual Studio Team Test. In this article I want to dwell both on the topic of UI testing in general, and on our particular experience of using Visual Studio Coded UI Tests in working on the PVS-Studio static analyzer.

The basics

To begin, let's try to figure out why UI tests are not as popular among developers as, for example, the classic Unit tests.

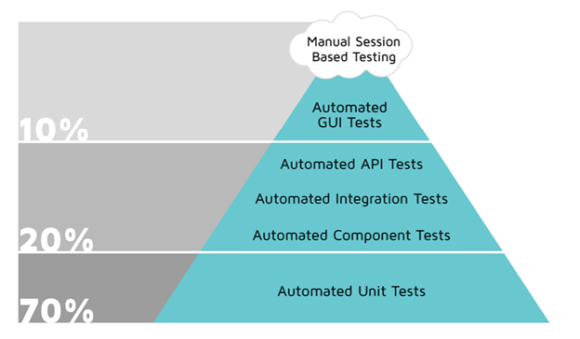

There are many so-called “test pyramids” in the network, which show the optimal recommended distribution of the number of tests across application layers. All the pyramids are similar and contain a general idea: as many tests as possible should be as close as possible to the code. And vice versa. I will give an example of one of these pyramids, which contains additional recommendations on the ratio of the number of tests in percent.

At the base of the pyramid are the Unit tests. Indeed, such tests are easier to do at the design stage, and they will be very fast. On the other hand, automated interface tests are at the top of the pyramid. These tests should not be much, since the complexity of their creation, as well as the execution time are quite large. In addition, it is not clear whom to entrust the creation of UI-tests. After all, in fact, we are talking about emulation of user actions. All this is very far from the application code, so developers are reluctant to do such work. And to make high-quality automated interface tests without participation (or with minimal participation) of programmers, the use of paid tools is required. The combination of all these factors often leads to the fact that UI-tests do not do at all, limited to one-time manual testing of new functionality. In addition, interface tests are extremely costly, not only during the development phase, but also during the further life cycle of the application. Even a slight change in the user interface can lead to errors in the execution of many tests and the need to modify them.

I note that at present our test system, by and large, follows the recommendations. The number of automated GUI tests (45) is approximately one-tenth of the total number of PVS-Studio tests. At the same time, the number of Unit tests is not so great, but they are supplemented with a mass of other test systems:

- Tests of the quality of the analyzers (C / C ++ / C # / Java), during which a large pool of test projects is tested on different operating systems (Windows, Linux, macOS) and the logs of the new warnings are compared with the reference ones;

- Tests of specific features (tracking the launch of compilers, etc.);

- External tests command-line applications;

- Tests of the correctness of the assembly, installation and deployment;

- Documentation tests.

At the initial stage of its development, the PVS-Studio analyzer was an application for finding errors when porting C / C ++ code to a 64-bit platform. Yes, and he was called at that time in a different way, "Viva64". The history of the product creation can be found in the article "

How the PVS-Studio project began 10 years ago ". After integration into Visual Studio 2005, the analyzer acquired a graphical user interface, in fact, the interface of Visual Studio IDE itself, in which, after installing the plugin, an additional menu appeared to access the functionality of the analyzer. The menu consisted of two or three items, so there was nothing to test there. Yes, and Visual Studio at that time did not contain any built-in tools for testing GUI.

Visual Studio Coded UI Tests and Alternatives

Everything changed with the release of Visual Studio 2010, in which the integrated UI test creation system appeared: Visual Studio Coded UI Tests (CUIT). Based on the unit testing system of Visual Studio Team Test, MSAA (Microsoft Active Accessibility) technology was originally used to access the visual elements of the interfaces in CUIT. In the future, the technology was improved and is currently an advanced user interface automation model for testing the UIA code (UI Automation). It allows the test system to access open fields (the name of the object, the internal name of the object class, the current state of the object, its place in the hierarchical interface structure, etc.) of COM and .NET UI elements, and the system allows to emulate the effects on these elements through standard input devices (mouse, keyboard). Immediately “out of the box” supports the recording of user actions when interacting with the interface (by analogy with Visual Studio macros), automating the construction of an “interface card” (properties of controls, parameters of their search and access to them), together with automatic generation of control code. In the future, all the accumulated information is easy to modify and maintain up to date, as well as to customize test sequences at will, while possessing minimal programming skills.

Moreover, as I said earlier, now when creating complex intelligent UI tests, you can generally do without any programming skills, provided you use specialized paid tools. Well, if there is no desire or ability to use proprietary test environments, there are lots of free products and frameworks. The Visual Studio Coded UI Tests system is an intermediate solution that allows you not only to automate the process of creating UI tests as much as possible. With its help it is easy to create arbitrary test sequences in the programming languages C # or VB.

All this can significantly reduce the cost of creating and maintaining the relevance of GUI tests. The framework used is simple to understand and in general terms can be represented as a diagram.

Of the key elements, the so-called “interface adapters” should be noted, which are at the lowest level of abstraction. This layer interacts with the end user interface elements, and its capabilities can be extended with the help of additional adapters. Above is a layer that hides GUI access technologies from the rest of the code, including the programmatic access interfaces and the actual test application code, including all the necessary elements to automate the testing. The technology is extensible, each level can be supplemented with the necessary elements within the framework of the framework capabilities.

The main features of CUIT from Microsoft include:

- Functional testing of user interfaces. Supports classic Windows-based applications, web applications and services, WPF

- Code generation (including automatic) in VB / C # languages

- Ability to integrate into the assembly process

- Local or remote launches, reporting

- Availability of recording and reproducing test sequences

- Good extensibility

Despite a number of difficulties associated with CUIT, the use of this testing technology is preferable for several reasons:

- Effective interaction between developers and testers in a single tool and programming language

- Additional features of working with the “interface card”, allowing identification of controls “on the fly”, synchronization of elements and recording of test sequences

- Fine-tuning the playback mechanism, which allows, along with basic settings, such as the delay between operations, the search timeout for an element, etc., to use specialized mechanisms in the code. For example, blocking the current thread until the control is activated (visualized) using the WaitForControlExist or WaitForReady methods with the enumeration WaitForReadyLevel , etc.

- Ability to program unlimitedly complex tests

I will not go further into the theoretical aspects of the technology Visual Studio Coded UI Tests, they are all set out in detail in the relevant documentation. There you can also find detailed step by step instructions for creating a simple UI test based on this system. And yes, the system is not free, you will need Visual Studio Enterprise to work with it.

The technology described is not the only one on the market. There are many other solutions. All alternative UI testing systems can be divided into paid and free. In this case, the choice of a particular system will not always depend on its price. For example, an important factor may be the possibility of creating tests without the need for programming, but at the same time, tests may not be sufficiently flexible. Also important is the support of the necessary test environment - operating systems and applications. Finally, the choice may be influenced by the purely specific features of the application and its interface. I will give several popular systems and technologies for testing GUI.

Paid :

TestComplete (SmartBear),

Unified Functional Testing (Micro Focus),

Squish (froglogic),

Automated Testing Tools (Ranorex),

Eggplant Functional (eggplant), etc.

Free :

AutoIt (windows),

Selenium (web),

Katalon Studio (web, mobile),

Sahi (web),

Robot Framework (web),

LDTP (Linux Desktop Testing Project), Open source frameworks:

TestStack.White +

UIAutomationVerify ,. NET Windows automation library , etc.

Of course, this list is not complete. Nevertheless, it is obvious that free systems are usually focused on a specific operating system or testing technology. The general rule is that among paid systems you will find something appropriate for your needs much faster, development and maintenance of tests will be easier, and the list of supported test environments is exhaustive.

In our case, there was no problem of choice: with the release of Visual Studio 2010 with the addition of Coded UI Tests, it became possible to easily add a set of functional tests to our test environment to test the user interface of the PVS-Studio plugin for Visual Studio.

PVS-Studio UI Tests

So, GUI tests in our company have been used for more than 6 years. The initial set of UI tests for Visual Studio 2010 was based on the only available at that time technology MSAA (Microsoft Active Accessibility). With the release of Visual Studio 2012, MSAA technology has gained significant development and is now called UIA (UI Automation). It was decided to use UIA in the future, and leave the tests based on MSAA to test the plug-in for Visual Studio 2010 (we support and test plug-ins for all versions of Visual Studio, starting with Visual Studio 2010).

As a result, we have formed two “branches” of UI tests. At the same time, in the test project both of these branches used a common interface map and shared code. In the code, it looked like this (method for resetting Visual Studio settings to standard before running the test):

public void ResetVSSettings(TestingMode mode) { .... #region MSAA Mode if (mode == TestingMode.MSAA) { .... return; } #endregion

When making changes to the plug-in interface, it was necessary to make changes to both branches of the UI tests, and adding new functionality made it necessary to duplicate the interface element in the map: that is, to create two different elements for each of the MSAA and UIA technologies. All this required a lot of effort not only to create or modify tests, but also to maintain the test environment in a stable state. I will dwell on this aspect in more detail.

According to my observations, the stability of the test environment when testing the GUI is a significant problem. This is mainly due to the strong dependence of such testing on a variety of external factors. After all, in fact, emulation of user actions is performed: pressing the keys, moving the mouse cursor, clicking the mouse, etc. There are a lot of things that can "go wrong." For example, if during the test, someone will interact with the keyboard connected to the test server. Also, unexpectedly, the monitor resolution may not be sufficient to display any control, and it will not be found by the test environment.

Expectation:

Reality:

Carelessly tuned (and not found later) element of the map interface - almost the leader of the problem behavior. For example, the Visual Studio Coded UI Tests interface mapping wizard when creating a new control uses for it search criteria of the type “Equals” by default. That is, an exact correspondence of the property names to the specified values is required. This usually works, but sometimes the test execution stability can be significantly improved using the less stringent search criteria “Contains” instead of “Equals”. This is just one example of "tweaking" that you may encounter when working on UI tests.

Finally, some of your tests may consist in performing some action and further waiting for the result, which, for example, is associated with the display of the window. In this case, the problem of finding the item will be added to the issues of setting the playback delay until the window appears and the subsequent synchronization of work. Some of these problems can be solved by standard framework methods (

WaitForControlExist , etc.), and for others, you will have to invent ingenious algorithms.

We encountered a similar task during the work on one of the tests of our plugin. In this test, first an empty Visual Studio environment is opened, then a certain test solution is loaded there, which is completely checked using PVS-Studio (menu “PVS-Studio” -> “Check” -> “Solution”). The problem was determining the moment when the check would be completed. Depending on a number of conditions, the check may not always take the same time, so simple timeouts do not work here. You also can not use the regular mechanisms for suspending the test flow and waiting for the appearance (or hiding) of any control, because there is nothing to be attached to. During the test, a window appears with the status of work, but this window can be hidden, and the test will continue. Those. this window cannot be oriented (besides, it has the setting “Do not close after analysis is completed”). I wanted to make the algorithm more general in order to use it for the various tests related to checking projects and waiting for the completion of this process. A solution was found. After launching the check and until its completion, the same menu item “PVS-Studio” -> “Check” -> “Solution” is inactive. After a certain time interval, we had to check the “Enabled” property of this menu item (through the interface map object) and, if it is detected that the item has become active, consider the decision verification process completed.

Thus, in the case of UI testing, it is not enough just to create tests. Requires fine and scrupulous adjustment in each case. It is necessary to understand and synchronize the entire sequence of actions performed. For example, the context menu item will not be found until this menu is displayed on the screen, etc. Careful preparation of the test environment is also required. In this case, you can count on a stable test performance and adequate results.

Let me remind you that the system of UI-tests in our company has evolved since 2010. During this time, several dozen test sequences were created and a lot of auxiliary code was written. Over time, tests of the Standalone application have been added to the plug-in tests. At this point, the old testing branch of the plug-in for Visual Studio 2010 lost its relevance and was abandoned, but it was impossible to simply cut out this “dead” code from the project. First, as I showed earlier, the code was rather deeply integrated into test methods. And secondly, more than half of the elements of the existing interface card belonged to the old MSAA technology, but were reused (instead of duplication) in many new tests along with UIA elements (this is possible due to the continuity of technologies). At the same time, the mass of both automatically generated code and the contents of test methods was tied to the “old” elements.

By the fall of 2017, there is a need to improve the system of UI-tests. In general, the tests worked fine, but from time to time, some test "fell" for unknown reasons. More precisely, the reason usually was to find the control. In each case, you had to go through the tree of the interface map to a specific element and check its search criteria and other settings. Sometimes it helped to programmatically reset these settings before running the test. Considering the interface card that has grown to this moment (and in many respects redundant), as well as the presence of a “dead” code, this process required considerable effort.

For some time, the task "waited for its hero", until finally it came to me.

I will not bore you with a description of the nuances. I can only say that the work was simple, but it required considerable perseverance and attention. Everything about everything took me about two weeks. I spent half of this time refactoring code and interface cards. In the remaining time, I was engaged in stabilizing the execution of tests, which basically came down to more fine-tuning of the search criteria for visual elements (editing the interface card), as well as some code optimization.

As a result, it was possible to reduce the amount of test methods code by about 30%, and the number of controls in the interface map tree was halved. But most importantly, UI tests began to show more stable performance and require less attention. And falls have become more frequent because of changes in the functionality of the analyzer or when inconsistencies (errors) are detected. Actually, for these purposes, and need a system of UI-tests.

Thus, at present, the system of automatic tests of the PVS-Studio interface has the following basic characteristics:

- Visual Studio Coded UI Test

- 45 scenarios

- 4,095 lines of test method code

- 19,889 lines of auto-generated code (excluding the size of the UI Map settings storage xml file)

- 1 hour 34 minutes of execution (average value of the results of the last 30 launches)

- Work on a dedicated server (run utility MSTest.exe)

- Work Monitoring and Review Report (Jenkins)

Conclusion

In conclusion, I want to give a list of criteria for the success of automated GUI tests, which is based on an analysis of our experience with this technology (some of the criteria are applicable to other testing technologies, for example, Unit tests).

Appropriate toolkit . Choose the environment for creating and executing CUIT in accordance with the features of your application, as well as the test environment. Not always there is a sense in paid solutions, but usually they help to solve the problem very effectively.

High-quality infrastructure setting . Do not save when developing an interface card. Simplify the framework when searching for items by carefully describing all their properties and specifying intelligent search criteria. Pay attention to the possibilities of further modification.

Minimization of manual labor . Where possible, be sure to use automatic code generation and sequence recording tools. So you significantly speed up development and minimize the likelihood of errors (it is not always easy to find the reason for the fall of the UI test, especially if an error is made in the code for working with the framework).

Simple and independent intellectual tests . The easier your tests are, the better. Try to do a separate test to check a specific control or simulated situation. Also ensure the independence of the tests from each other. The fall of one of the tests should not affect the whole process.

Clear names . Use prefixes in the names of tests of the same type. Many environments allow you to run tests with filtering by name. Also use test grouping whenever possible.

Isolated runtime . Ensure that tests are performed on a dedicated server with minimal external influences. Disconnect all external user input devices, provide the necessary screen resolution for your application, or use a hardware “stub” that simulates connecting a high-resolution monitor. Make sure that no other applications are running during the test run, for example interacting with the desktop and displaying messages. It is also necessary to plan the launch time and take into account the maximum duration of the tests.

Analysis of the issued reports . Provide a simple and intuitive form of reporting on the work done. Use continuous integration systems to dispatch tests, as well as to quickly receive and analyze test results.

If you want to share this article with an English-speaking audience, then please use the link to the translation: Sergey Khrenov.

Visual Studio Coded UI Tests: