The gc compiler uses a special Lisp-like domain-specific language ( DSL ) to describe the Static Single Assignment (SSA) optimization rules.

I propose to analyze the main elements of this language, its features and limitations.

As an exercise, add a compiler to Go to generate an instruction that it had not previously generated, optimizing the expression a*b+c .

This is the first article in the series about the insides of the Go SSA compiler backend , therefore, in addition to reviewing the DSL rule descriptions, we will look at related components to create the necessary basis for our next session.

Introduction

Frontend Go compiler ends at the time of generation of the SSA view from the annotated AST. The functions responsible for the conversion can be found in cmd / compile / internal / gc / ssa.go. The entry point to the SSA backend is the ssa.Compile function, defined in the cmd / compile / internal / ssa / compile.go file .

Terminology| En | Ru | Value |

|---|

| Compiler frontend | Frontend compiler | Parsing and lexical analysis, sometimes type resolution, intermediate representation is close to the source code, usually some annotated AST. |

| Compiler backend | Compiler backend | Lower-level optimizations and intermediate presentation, code generation. |

| Form | The form | Almost synonymous with the word "expression" (expression). Usually in Lisp form is a fairly common way to name a program element, be it a list or an atom. |

| Optimization pass | Optimization phase | Execution of a specific algorithm on the program. The word "pass" is somewhat ambiguous, because one phase can perform several passes, and / or use a code that is common with other phases. |

If, as you read the article, you have found a completely incomprehensible term for you, you should report it, perhaps it will be added to this table.

The Go Go SSA optimizer consists of several phases, each of which performs passes along the compiled function. Some phases use the so-called "rewrite rules", the rules for converting some SSA sequences to others, potentially more optimal.

Transformation rules are described using S-expressions . The elements of these expressions are ssa.Value . In the simplest case, these rules allow you to replace one ssa.Value with another.

For example, the code below collapses the multiplication of 8-bit constants:

(Mul8 (Const8 [c]) (Const8 [d])) -> (Const8 [int64(int8(c*d))])

There are two main categories of SSA values: high-level, almost independent of the target machine, and those that are architectural-specific (usually mapped to 1-in-1 machine instructions).

Optimizations are described in terms of these two categories. First, high-level and common to all architectures, then platform-oriented.

All code associated with the rules is in cmd / compile / internal / ssa / gen . We will consider two sets:

- genericOps.go - machine-independent operations.

- AMD64Ops.go - operations specific to

GOARCH=AMD64 (64-bit x86).

After the first few phases that work on an abstract machine, the so-called lowering is performed, as a result of which the transition from genericOps to a set of concrete architecture occurs. In our example, this will be AMD64Ops . After this point, all subsequent phases operate on a view from the second category.

After the optimizer, the code generator comes into play. For AMD64, the implementation of code generation can be found in the cmd / compile / internal / amd64 package. The task of the code generator is to replace ssa.Block and ssa.Value in the sequence obj.Prog , transmitted to the assembler . The assembler will collect the machine code, which will be ready for execution after linking .

Optimization rules

If files with operations definitions are named like " ${ARCH}Ops.go ", then the optimization rules are placed in " ${ARCH}.Rules ".

High-level rules perform simple transformations, most of the folding of constant expressions , as well as some transformations that simplify subsequent processing.

Each file with low-level rules consists of two parts:

- Lowering - replacing abstract operations with machine equivalents.

- Directly optimizations themselves.

An example of converting an operation to a machine:

(Const32 [val]) -> (MOVLconst [val]) // L - long, 32- (Const64 [val]) -> (MOVQconst [val]) // Q - quad, 64- | | generic op | AMD64 op

It is in low-level optimizations that the main number of important optimizations are performed, such as reducing the cost of operations , partial embedding and utilization of the capabilities of the memory addressing modes available in the processor.

Operations have a mnemonic name, which is usually called an opcode. Opcodes of architecture-dependent operations, as a rule, reflect the names of real instructions.

Rule Description Language Syntax

The basic grammar is described in rulegen.go :

Snippet translation above It is also worth mentioning that inside the .Rules files allowed " // " comments.

Let us analyze a simple example that contains all these elements:

Opcode=ADDLconst - 32- : AuxInt=c - , `x` : : (ADDLconst [c] x) && int32(c)==0 -> x | / | / | | / | / | | / | / | / ( `&&` ) ,

All these explanatory signatures are not part of a valid rule entry.

This rule converts x+0 to x . Everything inside the condition section is just Go code,

unless limited to expressions which result should be bool .

You can call predicates defined in rewrite.go .

In addition to the usual opcodes, combinations that generate several rules can be used:

(ADD(Q|L)const [off] x:(SP)) -> (LEA(Q|L) [off] x) // Q|L alternation: (ADDQconst [off] x:(SP)) -> (LEAQ [off] x) (ADDLconst [off] x:(SP)) -> (LEAL [off] x) // `x`: (ADDQconst [off] (SP)) -> (LEAQ [off] (SP)) (ADDLconst [off] (SP)) -> (LEAL [off] (SP))

(SP) is one of the operations in genericOps.go and expresses the loading of a pointer to a hardware stack. For architectures where there is no hardware SP , it is emulated.

Features of variables in templates (S-expressions to the left of -> ):

- Variables such as

x , without an expression over : capture anything - similar to a regular variable,

_ captures any value, but the result can be ignored

// : ADDQconst, // : (ADDQconst _) -> v (ADDQconst x) -> (ADDQconst x)

If AuxInt not specified (the expression in square brackets), then the rule will be triggered on any value of AuxInt . Similarly with the {} -parameters (about them below).

The name v means the outermost captured form.

For example, for the expression (ADDQconst (SUBQconst x)) outer form is ADDQconst .

Variables can be used several times, this allows you to require matching several parts of the S-expression to each other:

(ADDQconst [v] (ADDQconst [v] x)) // , , "x+2+2" (x+v+v).

Types in rules

In some cases, it is required to explicitly indicate the type of generated and / or compatible form.

The type is indicated in "triangular brackets", as a type argument in C ++ templates:

// typ.UInt32 - BTSLconst. // BSFL typ.UInt32, // . (Ctz16 x) -> (BSFL (BTSLconst <typ.UInt32> [16] x))

In addition to types, there are "characters" (or, more universally, Aux properties).

(StaticCall [argsWidth] {target} mem) -> (CALLstatic [argsWidth] {target} mem)

[argsWidth] - Value.AuxInt . For StaticCall , the total size of the arguments passed.{target} - Value.Aux . For StaticCall , the function being called<typ.UInt32> - Value.Type . Value type

The semantics of Aux and AuxInt vary greatly from operation to operation. The best source of documentation in this case are *Ops.go files. Each opData opcode opData has an aux field that describes how to interpret these fields.

To describe the types used package cmd / compile / internal / types . Some types are specific to the SSA backend, such as types.TypeFlags , others are common between cmd/compile/internal/gc and cmd/compile/internal/ssa .

Special types

There is a special types.TypeMem type, which performs several functions at once:

- Allows you to sort and group

ssa.Value by memory access patterns. In particular, it guarantees the correct order of execution within the base blocks (about them below). - Defines the state of the memory flow in the program If the instruction modifies the memory, a new SSA value with

types.TypeMem type will be generated as a result of this operation.

Like the special meaning of OpPhi , memory is interpreted in an exceptional way in many phases of the optimizer.

Little about PhiPhi has a role that varies slightly from phase to phase.

At the very beginning of the SSA compiler, Phi serves its classical purpose and expresses the choice of value depending on the execution path that led us to this value.

For example, if there are two jumps in a block, and both modify the memory, then the block assignment will receive a memory equal to (Phi mem1 mem2) . Loops also drag Phi value.

Another special type is the types.TypeFlags mentioned above. This type describes how a CPU instruction generates flags .

In this case, the instructions, like ADDQ , although generating flags, do not have types.Flags type, but are marked with the clobberFlags attribute.

types.Flags used to highlight the result of instructions like CMPQ , which do not write the result to any of their explicit operands, but only update the internal state of the processor, which can be used by the following instruction.

Instructions like SETL allow you to "read" the flags and return them in the form of ssa.Value , which can be placed in the register.

L-less than G-greater than | | (SETL (InvertFlags x)) -> (SETG x) | ,

SSA Inspection Program

Suppose we have such a program ( example.go ):

package example func fusedMulAdd(a, b, c float64) float64 { return a*c + b }

We can view the SSA code that is generated for the fusedMulAdd function:

$ GOSSAFUNC=fusedMulAdd go tool compile example.go > ssa.txt

Now check the working (current) directory:

ssa.txt contains a text dump.ssa.html automatically generated ssa.html contains the same information, but in a more interactive and conveniently readable format. Try to open in the browser.

Machine code for fusedMulAddThe ~r3 symbol is renamed ret for expressiveness.

v7 (4) MOVSD a(SP), X0 v11 (4) MOVSD c+16(SP), X1 v12 (4) MULSD X1, X0 v6 (4) MOVSD b+8(SP), X1 v13 (4) ADDSD X1, X0 v15 (4) MOVSD X0, ret+24(SP) b1 (4) RET

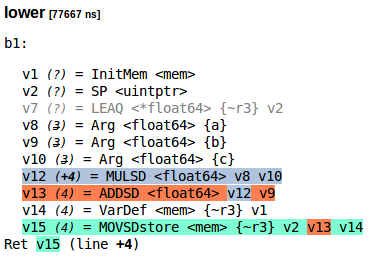

This is how the SSA program for fusedMulAdd after the lower phase (ssa.html):

Text format SSA programIf for some reason you wanted to copy it:

lower [77667 ns] b1: v1 (?) = InitMem <mem> v2 (?) = SP <uintptr> v7 (?) = LEAQ <*float64> {~r3} v2 v8 (3) = Arg <float64> {a} v9 (3) = Arg <float64> {b} v10 (3) = Arg <float64> {c} v12 (+4) = MULSD <float64> v8 v10 v13 (4) = ADDSD <float64> v12 v9 v14 (4) = VarDef <mem> {~r3} v1 v15 (4) = MOVSDstore <mem> {~r3} v2 v13 v14 Ret v15 (line +4)

Translating this into S-expressions:

(MOVQstore {~r3} (SP) (ADDSD (MULSD (Arg {a}) (Arg {c})) (Arg {b})))

SSA after regalloc phaseThis is the output of ssa.html for the regalloc phase.

regalloc [87237 ns] b1: v1 (?) = InitMem <mem> v14 (4) = VarDef <mem> {~r3} v1 v2 (?) = SP <uintptr> : SP v8 (3) = Arg <float64> {a} : a[float64] v9 (3) = Arg <float64> {b} : b[float64] v10 (3) = Arg <float64> {c} : c[float64] v7 (4) = LoadReg <float64> v8 : X0 v11 (4) = LoadReg <float64> v10 : X1 v12 (+4) = MULSD <float64> v7 v11 : X0 v6 (4) = LoadReg <float64> v9 : X1 v13 (4) = ADDSD <float64> v12 v6 : X0 v15 (4) = MOVSDstore <mem> {~r3} v2 v13 v14 Ret v15 (line +4)

Adding new optimization rules

On FMA processors, we can calculate a*c + b for one instruction instead of two.

Take as a basis CL117295 for the authorship of Ilya Tokar .

For your convenience, I have prepared a diff patch .

1. Adding a new operation - FMASD

In the file compile/internal/ssa/gen/AMD64Ops.go find the slice variable AMD64ops and add a new element there (to any place):

{

Since earlier (fp,fp,fp -> fp) there were no operations, you need to define a new specifier:

fp01 = regInfo{inputs: nil, outputs: fponly} fp21 = regInfo{inputs: []regMask{fp, fp}, outputs: fponly} + fp31 = regInfo{inputs: []regMask{fp, fp, fp}, outputs: fponly}

2. Adding an optimization rule

(ADDSD (MULSD xy) z) -> (FMASD zxy)

A better implementation would not be unconditional and would test the availability of FMA. We will assume that there is definitely an FMA on our target machine.

The compiler uses the config for such checks:

// config.useFMA=false, . (ADDSD (MULSD xy) z) && config.useFMA-> (FMASD zxy)

How to check FMA support?If lscpu is available in the system, then, for example:

$ lscpu | grep fma

3. Implementing code generation

Now, in the ssaGenValue function defined in the compile/internal/amd64/ssa.go , you need to add code generation for FMASD :

func ssaGenValue(s *gc.SSAGenState, v *ssa.Value) { switch v.Op { case ssa.OpAMD64FMASD: p := s.Prog(v.Op.Asm())

Now everything is ready to check the performance of our new optimization. It is very rare to add new instructions, usually new optimizations are done based on already defined SSA operations.

Checking results

The first step is to generate Go code from the updated gen/AMD64Ops.go and gen/AMD64.Rules .

Next you need to build our new compiler:

go install cmd/compile

Now, when compiling the same example, we get another machine code:

- v7 (4) MOVSD a(SP), X0 - v11 (4) MOVSD c+16(SP), X1 - v12 (4) MULSD X1, X0 - v6 (4) MOVSD b+8(SP), X1 - v13 (4) ADDSD X1, X0 - v15 (4) MOVSD X0, ret+24(SP) - b1 (4) RET + v12 (4) MOVSD b+8(SP), X0 + v7 (4) MOVSD a(SP), X1 + v11 (4) MOVSD c+16(SP), X2 + v13 (4) VFMADD231SD X2, X1, X0 + v15 (4) MOVSD X0, ret+24(SP) + b1 (4) RET

Basic blocks

Now that the most difficult work is done, let's talk about the basic blocks .

The values that we optimized above are in blocks, and the blocks are in function.

Blocks, like ssa.Value , are abstract and machine-dependent. All blocks have exactly one entry point and from 0 to 2 assignment blocks (depending on the type of block).

The simplest blocks are If , Exit and Plain :

Exit block has 0 assignment blocks. These are leaf blocks that make a non-local jump, for example, using panic .Plain block has 1 block-assignment. It can be considered as an unconditional transition after the execution of all instructions of a block to another block.If block has 2 destination blocks. The transition is carried out depending on the condition ( Block.Control ).

Here are some simple examples of rewriting abstract blocks into AMD64 blocks:

"then" ( ) | "else" ( ) | | (If (SETL cmp) yes no) -> (LT cmp yes no) (If (SETLE cmp) yes no) -> (LE cmp yes no)

The block theme will be discussed in more detail in the context of other optimizing phases in the SSA compiler part.

Limitations of optimization rules

SSA backend has its advantages. Some optimizations are feasible for O(1) . However, there are also disadvantages, due to which the SSA optimizer alone will not be enough, at least until some aspects of its implementation change.

Suppose you want to append :

xs = append(xs, 'a') xs = append(xs, 'b')

At the time when the SSA is generated, the high-level structure of the code is lost and the append , being a non-normal function, is already embedded in the body of the containing block. You will have to write a bulky rule that captures the entire sequence of operations generated for append .

Speaking specifically about .Rules , then this DSL is quite weak work with blocks ( ssa.Block ). Any nontrivial optimization associated with blocks is not possible for expression in this language. Partial update of the block is not possible. It is also impossible to throw out blocks (but there is a hack in the form of a First block, which is used to delete a dead code).

Even if part of the flaws are correctable, most compilers agree that there is no single and better intermediate form for representing code.

Go that goes faster

If you come up with some cool optimization rules, feel free to send it to go-review.googlesource.com . I would be happy to make a review (add to CC iskander.sharipov@intel.com ).

Happy hacking compiler!

Bonus material

Examples of good patches in Go that added or modified SSA rules:

A README document appeared recently to describe the SSA part of the compiler.

Recommended for reading.