Analysis of a binary code, that is, a code that is executed directly by the machine, is a non-trivial task. In most cases, if it is necessary to analyze a binary code, it is restored first by disassembling, and then decompiling into some high-level representation, and then it is already analyzed what happened.

Here it must be said that the code that was restored, in the textual representation, has little in common with the code that was originally written by the programmer and compiled into an executable file. It is impossible to recover exactly the binary file obtained from compiled programming languages such as C / C ++, Fortran, since this is an algorithmically non-formalized task. In the process of converting the source code, which the programmer wrote, into a program that the machine performs, the compiler performs irreversible transformations.

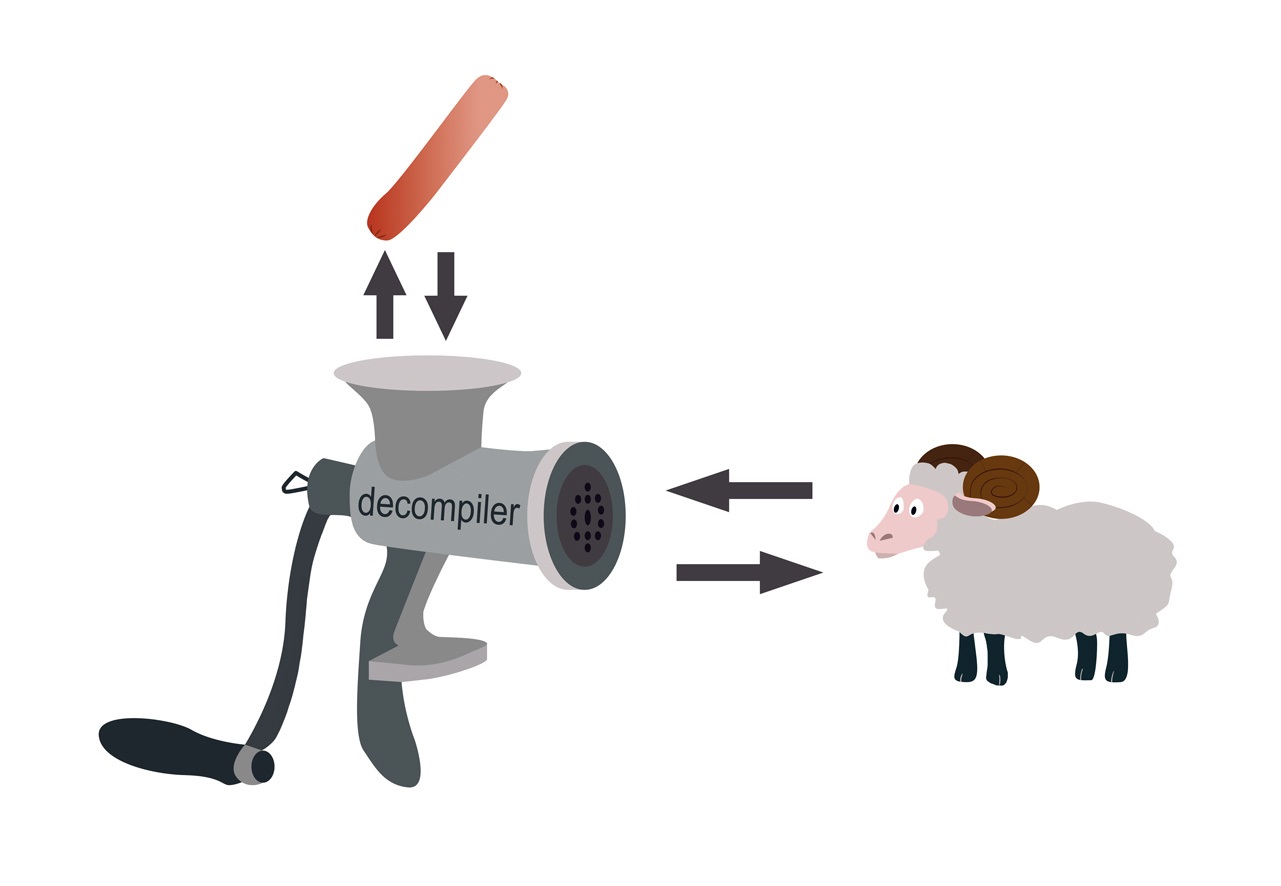

In the 90s of the last century, the judgment was spread that the compiler, as a meat grinder, grinds the original program, and the task of restoring it is similar to the task of recovering a ram from a sausage.

However, not so bad. In the process of getting sausages, the ram loses its functionality, while the binary program saves it. If the resulting sausage could run and jump, then the tasks would be similar.

So, since the binary program has retained its functionality, it can be said that it is possible to restore the executable code to a high-level representation so that the functionality of the binary program, which has no original representation, and the program, the textual representation of which we received, were equivalent.

By definition, two programs are functionally equivalent if, on the same input data, both complete or do not complete their execution and, if execution ends, produce the same result.

The task of disassembling is usually solved in a semi-automatic mode, that is, a specialist makes manual recovery using interactive tools, for example, an

IdaPro interactive disassembler,

radare or another tool. Further, decompilation is also performed in semi-automatic mode. As a decompilation tool to help a specialist, use

HexRays ,

SmartDecompiler or another decompiler that is suitable for solving this decompiling task.

Restoring the original text representation of the program from the byte-code can be made quite accurate. For interpreted languages like Java or languages of the .NET family that are translated into byte code, the decompile task is solved differently. We are not considering this question in this article.

So, binary programs can be analyzed by decompiling. Usually, such an analysis is performed in order to understand the behavior of the program in order to replace or modify it.

From the practice of working with legacy programs

Some software, written 40 years ago in a family of low-level C and Fortran languages, controls oil production equipment. Failure of this equipment may be critical for production, so changing software is highly undesirable. However, over the years, the source codes have been lost.

The new employee of the information security department, whose duties included understanding how things work, discovered that the sensor control program writes something to disk with some regularity, but it’s unclear what it writes and how this information can be used. He also had the idea that monitoring the operation of equipment can be displayed on one big screen. To do this, it was necessary to figure out how the program works, what and in what format it writes to disk, how this information can be interpreted.

To solve the problem, a decompilation technology was applied with subsequent analysis of the recovered code. We first disassembled the software components one by one, further localized the code that was responsible for the input-output of information, and gradually began to perform the recovery from this code, taking into account the dependencies. Then the logic of the program was restored, which allowed us to answer all the questions of the security service regarding the software being analyzed.

If the analysis of a binary program is required in order to restore the logic of its operation, partially or completely restore the logic of converting input data to the output, etc., it is convenient to do this using a decompiler.

In addition to such tasks, in practice there are problems of analyzing binary programs for information security requirements. In this case, the customer does not always have an understanding that this analysis is very time consuming. It would seem, make a decompilation and run the resulting code with a static analyzer. But as a result of qualitative analysis almost never succeed.

First, the found vulnerabilities must be able not only to find, but also to explain. If a vulnerability was found in a high-level language program, an analyst or code analysis tool shows in it which code fragments contain certain deficiencies that have caused the vulnerability. What if there is no source code? How to show which code caused the vulnerability?

The decompiler recovers code that is “littered” with recovery artifacts, and it’s useless to map the identified vulnerability to such code, it’s still not clear. Moreover, the recovered code is poorly structured and therefore poorly amenable to code analysis tools. It is also difficult to explain vulnerability in terms of a binary program, because the one for whom the explanation is being made must be well-versed in the binary representation of programs.

Secondly, a binary analysis of the requirements of information security must be carried out with an understanding of what to do with the result, as it is very difficult to fix the vulnerability in the binary code, but there is no source code.

Despite all the features and difficulties of conducting static analysis of binary programs for information security requirements, there are a lot of situations when such an analysis should be performed. If for some reason there is no source code, and the binary program performs the functionality that is critical for information security requirements, it should be checked. If vulnerabilities are found, such an application should be sent for revision, if possible, or an additional “shell” should be made for it, which will allow controlling the movement of sensitive information.

When the vulnerability is hidden in a binary file

If the code that executes the program has a high level of criticality, even with the source code of the program in a high-level language, it is useful to audit the binary file. This will help eliminate the features that the compiler can bring in by performing optimizing conversions. So, in September 2017, the optimization transformation performed by the Clang compiler was widely discussed. Its result was a call to a

function that should never be called.

#include <stdlib.h> typedef int (*Function)(); static Function Do; static int EraseAll() { return system("rm -rf /"); } void NeverCalled() { Do = EraseAll; } int main() { return Do(); }

As a result of optimization conversions, the compiler will receive such an assembler code. The example was compiled under Linux OS X86 with the -O2 flag.

.text .globl NeverCalled .align 16, 0x90 .type NeverCalled,@function NeverCalled: # @NeverCalled retl .Lfunc_end0: .size NeverCalled, .Lfunc_end0-NeverCalled .globl main .align 16, 0x90 .type main,@function main: # @main subl $12, %esp movl $.L.str, (%esp) calll system addl $12, %esp retl .Lfunc_end1: .size main, .Lfunc_end1-main .type .L.str,@object # @.str .section .rodata.str1.1,"aMS",@progbits,1 .L.str: .asciz "rm -rf /" .size .L.str, 9

The source code has

undefined behavior . The NeverCalled () function is called because of the optimization conversions that the compiler performs. In the process of optimization, he most likely performs an analysis of the

aliases , and as a result, the Do () function receives the address of the NeverCalled () function. And since the Do () function is called in the main () method, which is not defined, which is the standard undefined behavior (undefined behavior), we get the following result: the EraseAll () function is called, which executes the “rm -rf /” command.

The following example: as a result of compiler optimization conversions, we lost checking the pointer to NULL before dereferencing it.

#include <cstdlib> void Checker(int *P) { int deadVar = *P; if (P == 0) return; *P = 8; }

Since line 3 performs pointer dereferencing, the compiler assumes that the pointer is nonzero. Then line 4 was deleted as a result of the optimization

“deleting an unreachable code” , since the comparison is considered redundant, and after line 3 was deleted by the compiler as a result of the optimization

“dead code removal” (dead code elimination). Only line 5 remains. The assembler code resulting from the compilation of gcc 7.3 under Linux x86 OS with the -O2 flag is shown below.

.text .p2align 4,,15 .globl _Z7CheckerPi .type _Z7CheckerPi, @function _Z7CheckerPi: movl 4(%esp), %eax movl $8, (%eax) ret

The above examples of compiler optimization work are the result of the presence in the code of undefined behavior UB. However, this is quite normal code, which most programmers will take for safe. Today, programmers devote time to eliminating uncertain behavior in a program, whereas 10 years ago they did not pay attention to it. As a result, legacy code may contain vulnerabilities related to the presence of UB.

Most modern static source code analyzers do not detect UB errors. Therefore, if the code performs the functionality that is critical for the requirements of information security, it is necessary to check both its sources and the code itself that will be executed.