On Wednesday, the release of

Kubernetes 1.11 . We continue our tradition and talk about the most significant changes, based on data from

CHANGELOG-1.11 and numerous issues, pull requests and design proposals. What's new in K8s 1.11?

Network

Let's start with the networks, since the announcement of Kubernetes 1.11 marked the official stabilization

(i.e., transfer to the General Availability status) of two important innovations that were presented in previous releases. The first one is the

load balancing of services within the cluster, based

on IPVS (IP Virtual Server). This

opportunity came from

Huawei , which last spring presented to the community the results of its work to improve load balancing on 50+ thousand services using IPVS instead of iptables. This choice

was explained very logically: “If iptables are created for firewalls and are based on lists of rules in the kernel, then IPVS is created for load balancing and is based on hash tables in the kernel; In addition, IPVS supports more advanced load balancing algorithms than iptables, as well as a number of other useful features (for example, status checking, retry, etc.). ”

Slide from Huawei's “ Scaling Kubernetes to Support 50,000 Services ” presentation at KubeCon Europe 2017

Slide from Huawei's “ Scaling Kubernetes to Support 50,000 Services ” presentation at KubeCon Europe 2017What did it bring in practice? “Better network bandwidth, less software latency

[talking about the time it takes for new endpoints to be added to services,” approx. trans. ] and better scalability for the load balancer in the cluster ". The alpha version of the IPVS mode for kube-proxy appeared in

Kubernetes 1.8 and grew to stable with the current release 1.11: even if it is not enabled by default, it is already officially ready to serve the traffic in production clusters.

The second matured feature is

CoreDNS as the DNS server used by the Kubernetes cluster. We wrote about this solution in more detail in a

separate review , and in short, it is a flexible and easily expandable DNS server, originally based on the

Caddy web server, which became the successor to

SkyDNS (by the way, it is based on it and kube-dns itself, on a replacement for which comes CoreDNS) , written in the Go language and focused on the world of cloud (cloud native) applications. The coreDNS is also made noteworthy by the fact that it seems to be the only executable file and the only process in the system. Now this is not just another version of DNS for the Kubernetes cluster, but also the default option for

kubeadm . Instructions for using CoreDNS in Kubernetes are available

here (and for Cluster Federation

here ).

Among other updates in the network "world" Kubernetes:

- in NetworkPolicies, you can now specify certain submenus in other namespaces using the

namespaceSelector and podSelector ; - Services can now listen to the same host ports on different interfaces (specified in

--nodeport-addresses ); - Fixed a bug that caused Kubelet to stop reporting

ExternalDNS , InternalDNS and ExternalIP when using --node-ip .

Storage

Presented in

Kubernetes 1.9, the protection

feature for removing PVCs (

PersistentVolumeClaims ) used by any sub-bases, and PVs (

PersistentVolumes ) attached to PVCs, later

(in K8s 1.10) called

StorageProtection , was declared stable.

The ability to change the size of the volume (PVs) after restarting the pod was transferred to beta status, and within the alpha version it became possible

to resize the volume in real time , i.e. without the need to restart the hearth.

In support of AWS EBS and GCE PD, the limit for the maximum possible number of volumes connected to a node was increased, and in AWS EBS, Azure Disk, GCE PD and Ceph RBD plug-ins they

implemented support for dynamic provisioning of block raw-device volumes. For AWS EBS volumes

, the ability to use sub-feeds in

ReadOnly mode has also been

added .

In addition, Kubernetes 1.11 introduced an alpha version of

support for dynamic limits on volumes depending on the node type, and also

provides support for the API for block volumes in external storage drivers CSI (

Container Storage Interface - appeared in

Kubernetes 1.9 ). In addition, for CSI, they

implemented integration with the new registration mechanism for

Kubelet plug-ins.

Cluster nodes

The top 5 major changes to the Kubernetes 1.11 release also include the

translation into the beta status of the Kubelet dynamic configuration , which first appeared in

K8s 1.8 and requires multiple changes (you can track them in the

original Dynamic Kubelet Configuration ticket ). This feature allows you to roll out new

Kubelet configurations on live clusters (as opposed to the previous situation, when settings for

Kubelet were transferred via command line flags). To use it, you must set the option

--dynamic-config-dir (when you start

Kubelet ).

The

cri-tools project has been declared stable. It offers tools for system administrators, which allow analyzing and debugging the work of nodes in production, regardless of the executable environment used for containers. Packages with it (

crictl ) are now

shipped with other

kubeadm utilities (in DEB and RPM formats).

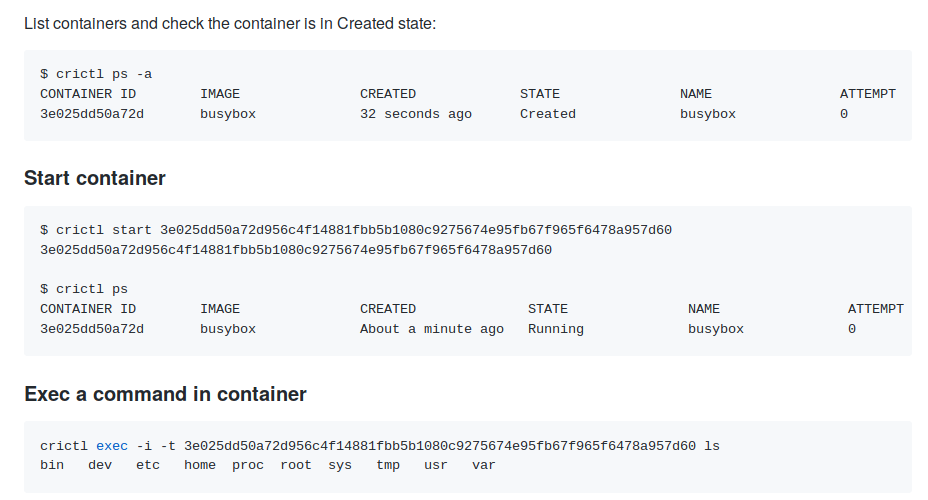

In more detail about the purpose and capabilities of crictl we wrote in a recent article about the integration of containerd with Kubernetes, replacing the “traditional” Docker. Examples of using

Examples of using crictl from project documentationExperimental

support for sysctls on Linux has

been converted to beta status (enabled by default using the

Sysctls feature flag). The

PodSecurityPolicy and

Pod objects have special fields for specifying / controlling

sysctls .

Also in

ResourceQuota , it

became possible to specify a priority class (in this case, the quota applies only to the submissions with this class - see the

design-projects for details), and the condition

ContainersReady added to the sub status.

Rights and Security

The

ClusterRole Aggregation feature introduced in K8s 1.9, which allows you to add

permissions to already existing (including automatically created) roles, is declared stable without receiving any changes. A separate role for cluster-autoscaler (

ClusterRole )

was also

added - it is used instead of the system role (

cluster-admin ).

A series of work was carried out in the direction of transparency of what (and why) occurs within the cluster. In particular, the RBAC information in audit-logs now

contains additional annotations to events :

authorization.k8s.io/decision - allow or forbid ;authorization.k8s.io/reason is the reason for the decision made by the person understandable.

Also in the audit-logs

added information about the admission from

PodSecurityPolicy in the form of annotations

podsecuritypolicy.admission.k8s.io/admit-policy and

podsecuritypolicy.admission.k8s.io/validate-policy (which policy allowed under?).

Console utilities

Many (not so significant, but useful!) Improvements are presented in the CLI-tools for Kubernetes:

- The new command

kubectl wait to kubectl wait for the moment when the specified resources are deleted or a certain condition is reached. - New

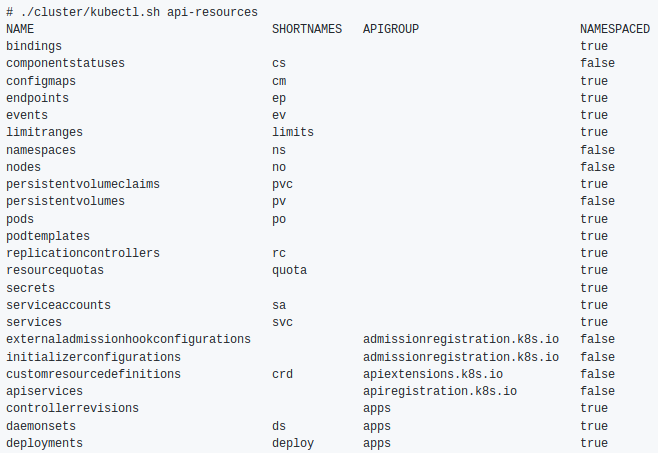

kubectl api-resources command to view resources:

kubectl cp support for kubectl cp .- In the kubectl go templates, the base64decode function has become available, the name of which speaks for itself.

- Support for the

--dry-run flag has --dry-run added to the kubectl patch . - The

--match-server-version flag --match-server-version become global - the kubectl version will also take it into account. - The

kubectl config view --minify now takes into account the global context flag. - Support for

CronJob resources has CronJob added to kubectl apply --prune CronJob .

Other changes

- The scheduler ( kube-scheduler ) learned how to schedule scrolls in

DaemonSet (alpha version). - The possibility of specifying a specific system user group (

RunAsGroup ) for containers in the pod (alpha version) is RunAsGroup . - Ability to remove orphans ( orphan delete ) for

CustomResources . - Improvements in support for Kubernetes API for hearths and containers on Windows - added metrics for hearths, containers and file system with logs, scurity-contexts

run_as_user , local persistent volumes and fstype for Azure disk. - Azure added support for standard SKU and public IP load balancer.

Compatibility

- The supported etcd version is 3.2.18 (in Kubernetes 1.10 was 3.1.12).

- Proven Docker versions range from 1.11.2 to 1.13.1 and 17.03.x (have not changed since the release of K8s 1.10).

- Go version - 1.10.2 (instead of 1.9.3), minimum supported - 1.9.1.

- CNI version is 0.6.0.

- The CSI version is 0.3.0 (instead of 0.2.0).

PS

Read also in our blog: