The history of the industrial economy is the history of the consumption of a limited resource. In the case of electricity, there was a pronounced evening peak, and therefore the owners of power plants lobbied modern urban transport and created from scratch, in fact, the consumer electronics industry. That is, they changed the lifestyle of millions so that the power plants were loaded more evenly.

With computing resources about a similar story. Few use them fully effectively. Let's talk about exploitation and a little about the next generation of programming for such environments where it is important to share resources very flexibly.

Seasonal consumption

Seasonal consumption at the usual customer looks like 11 months of calm and a month of double-tripled load. Everyone knows their peak. Retail freezes all activities for December and New Year sales, gets virtual machines. Everyone has their own season of discounts and big sales - someone for September 1, gifts for March 8, and so on. All B2C services have understandable seasonal activity just with working hours, for example, Internet banks. We at Technoserv Cloud do not plan service work for these periods.

Geologists come home from the expedition and start counting their fossils in the cloud. In large companies, by the end of the period, reports are collected from a heap of subsystems - somewhere efficiently, but somewhere with difficulty, almost to the impact of the base. Machine learning and analytics provide very heavy workloads, but they do it not permanently.

Summer peaks are a tourist area, but they do not have significant jumps, but there are DDoS seasonally, usually when people go to take tickets for the May holidays.

Normal consumption

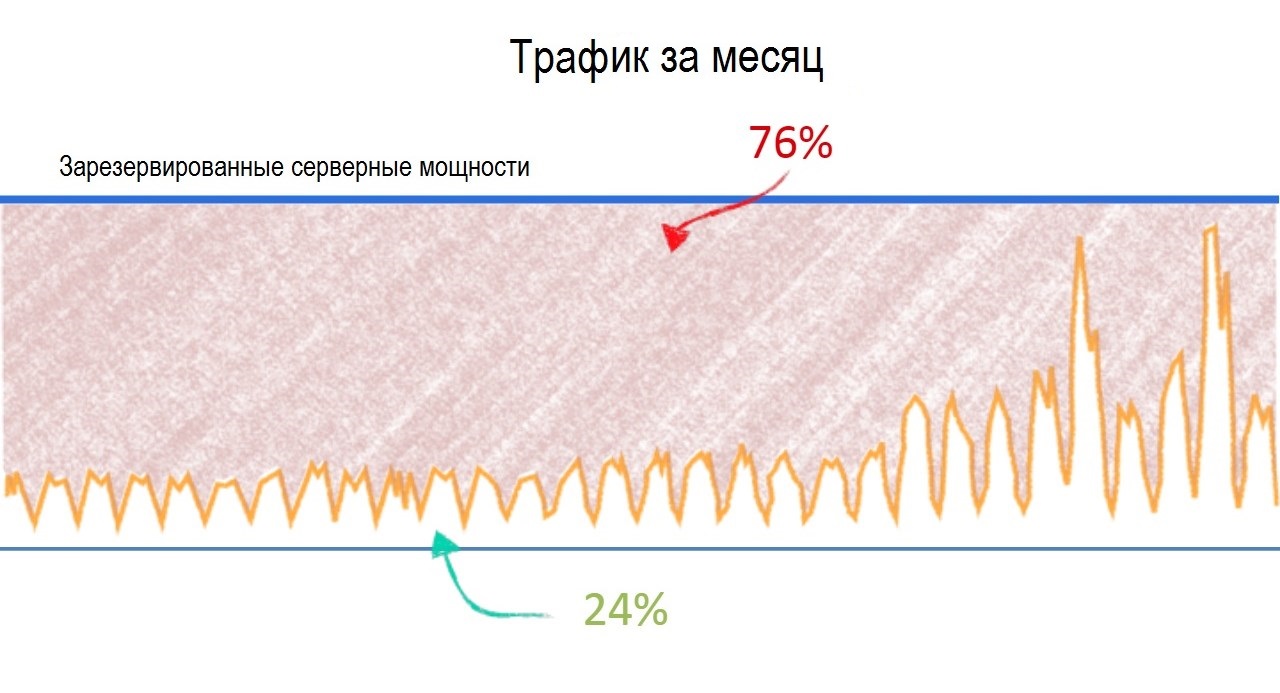

Our average customer in our cloud consumes resources "saw" by the day: in the morning with the beginning of the working day, the rise begins, at lunch a short decline, in the evening a decline of 2-3 times. At night, the system tasks are launched - backups, data overflow to analytics, in retail, warehouse and logistics inventory calculations, sales forecasts. Finance has different credit histories and other cash. There is no ABS in the cloud, they, at cellular operators and companies like railways, do clearing at night, that is, the daily balance for all operations is reduced. This is, relatively speaking, not to get into the night, but to collect similar transactions in packages and offset each other over a period.

In general, the usual office "saw." The most advanced admins already prescribe scaling, but for now we see only isolated examples.

In the simplest case, it looks like this: raise another virtual machine at 11:00, put it with services in the balancer, carefully migrate to 19:00 and redeem. That is, the payment is only for a third of the day, and at the peak the readiness is the same as with the constant rent of an additional virtual machine.

This is because we have clock sampling. Our customers are often interested in another variation of this script - autoscaling. When resource consumption rises to 80%, another machine rises. Falls to some limit - the car is turned off. In the financial contract, we have pay-as-you-go, that is, post-payment for actually consumed resources, quantization of VM by the hour. In the console, you can raise a certain number of machines yourself. The admin comes in and does it with his hands under load (for example, on Black Friday, when the load on the site grows), or else he writes a script that starts-extinguishes VM. There is one company, developers, conditionally, banking applications - they need auto-scaling for testing and peaks of database grinding. They have a very suitable architecture for automation, and with them we test a fully automated system when the cloud itself allocates the required number of VMs. That is, how, by itself - through a separate witness-server (dedicated VM or external machine), which is able to manage the load, application distribution and balancing through the API. They get autoscaling first, help to set priorities correctly. And while working for us testers, in fact. The product is very cheaply written for their needs: we do not have such a service in the cloud exactly as a connected service, but we are experimenting. So far, everything has been done manually, plus this alpha: we have all the reports and detailed conversations of the productologist with their developers for the work. This is how a product is born that will be needed by all such teams.

Architectural features of the software

Why few admins with autoscale, although it is more profitable? Because, in order to properly scale the load in the cloud, you must have a prepared application and database architecture. If a web-type application is usually easily scalable, then writing to the database is a more interesting question. Not all applications are simply broken into front-backs or microservices in order to spread them to different machines.

Who has this - they, of course, save. And they get a plus for stability, which we wrote about here in a post about

frequent architecture errors .

Naturally, under this need to rewrite the software. What is not always possible quickly and not always possible in principle.

But the next generation of cloud history looks even more interesting.

Container Virtualization

The next generation is containers. Now it seems like a kind of lightweight virtual machines with microservices that rise when accessed and unloaded from the RAM in the absence of activity. That is, they emerge and are being ousted as applications in the RAM and in the swap of modern operating systems — as they are used. It sounds simple, and many large players have already rewritten their architecture for containers.

That is, they are not even separate VMs, but simply processes on them, something like Cytriksov Xen applications. Either the good old VM in the shell of the container - that is, the same cars with deep autospeiling.

But this is only the first step. The fact is that the next is even more interesting thing - the containerization of functions. This is still a fantasy of architects, but if you write the code directly under the pop-up container system, you can wrap it in each one function. There is input, there is output and there is a “black box” - the container itself. The function was called in the code - the container “surfaced”, worked and went back to wait for the next call.

The code, of course, will have to be refactored and re-optimized all. But it is worth it, and it is one of the possible branches of the future. According to our estimates, the truth is very remote - three years at least until the first implementations of the giants and 15 years before industrial use in Russia.

In the meantime, container is alas, a product for geeks, because they do not work very well. And there are no requests for them in Russia, but there are requests for auto-scaling. And no new agreement is signed without pay-as-you-go.