At the datafest 2 in Minsk, Vladimir Iglovikov, a machine vision engineer at Lyft,

explained quite remarkably that the best way to learn Data Science is to take part in competitions, run someone else's solutions, combine them, achieve results and show your work. Actually, within the framework of this paradigm, I decided to take a closer look at the Home Credit credit risk assessment

competition and explain (to dateists to beginners and, first of all, to myself) how to analyze such data correctly and build models for them.

(picture

from here )

Home Credit Group is a group of banks and non-bank credit organizations that operates in 11 countries (including Russia as Home Credit and Finance Bank LLC). The goal of the competition is to create a methodology for assessing the creditworthiness of borrowers who do not have a credit history. What looks rather noble is that borrowers of this category often cannot get any credit from a bank and are forced to turn to scammers and microloans. Interestingly, the customer does not expose requirements for transparency and interpretability of the model (as is usually the case in banks), you can use anything, even neural networks.

The training sample consists of 300+ thousand records, there are a lot of signs - 122, among them there are many categorical (not numeric). Signs describe the borrower in some detail, right down to the material from which the walls of his dwelling are made. Part of the data is contained in 6 additional tables (data on the credit bureau, credit card balance and previous loans), these data must also be somehow processed and uploaded to the main one.

Competition looks like a standard classification task (1 in the TARGET field means any difficulties with payments, 0 means no difficulties). However, one should not predict 0/1, but the probability of problems (which, however, quite easily solve the probability predict_proba prediction methods that all complex models have).

At first glance, it’s pretty standard for machine learning tasks, the organizers offered a large prize of $ 70k, as a result, more than 2,600 teams are already participating in the competition, and the battle is taking place for thousandths of a percent. However, on the other hand, such popularity means that dataset has been studied far and wide and created many kernels with good EDA (Exploratory Data Analisys - research and analysis of data in the network, including graphical), Feature engineering (work with features) and with interesting models. (Kernel is an example of working with a dataset that anyone can post to show their work to other cahglers.)

Kernels deserve attention:

To work with the data is usually recommended the following plan, which we will try to follow.

- Understanding the problem and familiarization with the data

- Data cleaning and formatting

- EDA

- Basic model

- Model improvement

- Model Interpretation

In this case, you need to take the amendment to the fact that the data are quite extensive and you can not overpower it at once, it makes sense to act in stages.

Let's start with the import of libraries that we need in the analysis for working with data in the form of tables, graphing and working with matrices.

import pandas as pd import matplotlib.pyplot as plt import numpy as np import seaborn as sns %matplotlib inline

Load the data. Let's see what we all have. Such an arrangement in the "../input/" directory, by the way, is related to the requirement for placing its Kernels on Kaggle.

import os PATH="../input/" print(os.listdir(PATH))

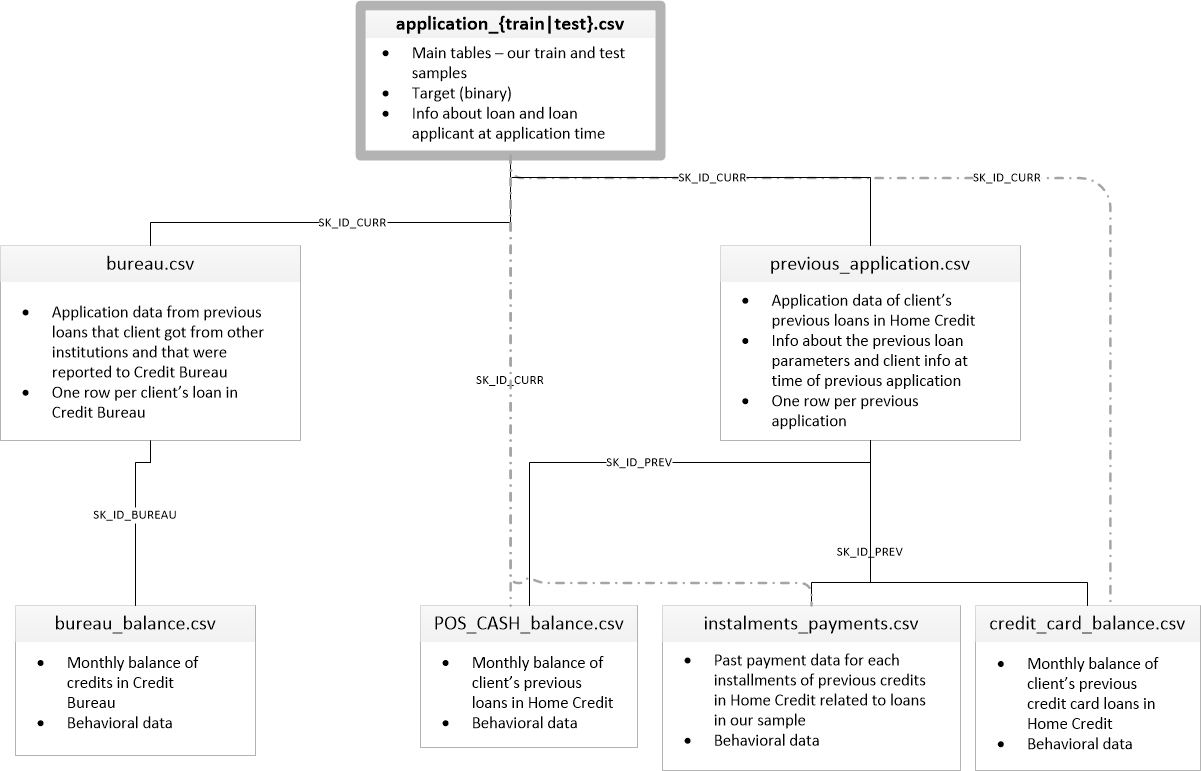

['application_test.csv', 'application_train.csv', 'bureau.csv', 'bureau_balance.csv', 'credit_card_balance.csv', 'HomeCredit_columns_description.csv', 'installments_payments.csv', 'POS_CASH_balance.csv', 'previous_application.csv']There are 8 data tables (not counting the HomeCredit_columns_description.csv table, which contains the description of the fields), which are related as follows:

application_train / application_test: Basic data, the borrower is identified by the SK_ID_CURR field

bureau: Data on previous loans in other credit institutions from the credit bureau

bureau_balance: Monthly data on previous loans by bureau. Each line is the month of using the loan.

previous_application: Previous applications for loans in Home Credit, each has a unique field SK_ID_PREV

POS_CASH_BALANCE: Monthly data on loans in Home Credit with cash and loans for purchases of goods

credit_card_balance: Monthly credit card balance data in Home Credit

installments_payment: Billing history of previous loans in Home Credit.

Let's focus for a start on the main data source and see what information can be extracted from it and which models to build. Download the basic data.

- app_train = pd.read_csv (PATH + 'application_train.csv',)

- app_test = pd.read_csv (PATH + 'application_test.csv',)

- print ("learning sample format:", app_train.shape)

- print ("test sample format:", app_test.shape)

- format of the training sample: (307511, 122)

- test sample format: (48744, 121)

Total we have 307 thousand records and 122 signs in the training sample and 49 thousand records and 121 signs in the test. The discrepancy is obviously caused by the fact that there is no target TARGET feature in the test sample, which we will predict.

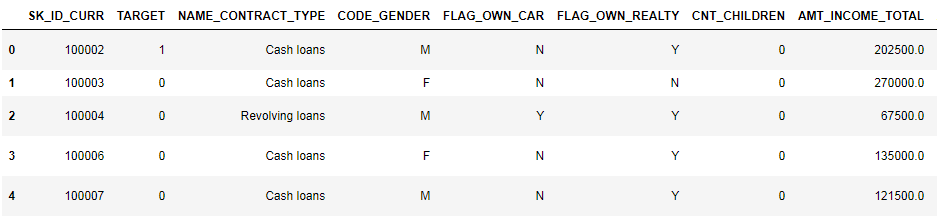

Look at the data carefully

pd.set_option('display.max_columns', None)

(first 8 columns shown)

It is quite difficult to watch data in this format. Let's look at the list of columns:

app_train.info(max_cols=122)

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 307511 entries, 0 to 307510

Data columns (total 122 columns):

SK_ID_CURR 307511 non-null int64

TARGET 307511 non-null int64

NAME_CONTRACT_TYPE 307511 non-null object

CODE_GENDER 307511 non-null object

FLAG_OWN_CAR 307511 non-null object

FLAG_OWN_REALTY 307511 non-null object

CNT_CHILDREN 307511 non-null int64

AMT_INCOME_TOTAL 307511 non-null float64

AMT_CREDIT 307511 non-null float64

AMT_ANNUITY 307499 non-null float64

AMT_GOODS_PRICE 307233 non-null float64

NAME_TYPE_SUITE 306219 non-null object

NAME_INCOME_TYPE 307511 non-null object

NAME_EDUCATION_TYPE 307511 non-null object

NAME_FAMILY_STATUS 307511 non-null object

NAME_HOUSING_TYPE 307511 non-null object

REGION_POPULATION_RELATIVE 307511 non-null float64

DAYS_BIRTH 307511 non-null int64

DAYS_EMPLOYED 307511 non-null int64

DAYS_REGISTRATION 307511 non-null float64

DAYS_ID_PUBLISH 307511 non-null int64

OWN_CAR_AGE 104582 non-null float64

FLAG_MOBIL 307511 non-null int64

FLAG_EMP_PHONE 307511 non-null int64

FLAG_WORK_PHONE 307511 non-null int64

FLAG_CONT_MOBILE 307511 non-null int64

FLAG_PHONE 307511 non-null int64

FLAG_EMAIL 307511 non-null int64

OCCUPATION_TYPE 211120 non-null object

CNT_FAM_MEMBERS 307509 non-null float64

REGION_RATING_CLIENT 307511 non-null int64

REGION_RATING_CLIENT_W_CITY 307511 non-null int64

WEEKDAY_APPR_PROCESS_START 307511 non-null object

HOUR_APPR_PROCESS_START 307511 non-null int64

REG_REGION_NOT_LIVE_REGION 307511 non-null int64

REG_REGION_NOT_WORK_REGION 307511 non-null int64

LIVE_REGION_NOT_WORK_REGION 307511 non-null int64

REG_CITY_NOT_LIVE_CITY 307511 non-null int64

REG_CITY_NOT_WORK_CITY 307511 non-null int64

LIVE_CITY_NOT_WORK_CITY 307511 non-null int64

ORGANIZATION_TYPE 307511 non-null object

EXT_SOURCE_1 134133 non-null float64

EXT_SOURCE_2 306851 non-null float64

EXT_SOURCE_3 246546 non-null float64

APARTMENTS_AVG 151450 non-null float64

BASEMENTAREA_AVG 127568 non-null float64

YEARS_BEGINEXPLUATATION_AVG 157504 non-null float64

YEARS_BUILD_AVG 103023 non-null float64

COMMONAREA_AVG 92646 non-null float64

ELEVATORS_AVG 143620 non-null float64

ENTRANCES_AVG 152683 non-null float64

FLOORSMAX_AVG 154491 non-null float64

FLOORSMIN_AVG 98869 non-null float64

LANDAREA_AVG 124921 non-null float64

LIVINGAPARTMENTS_AVG 97312 non-null float64

LIVINGAREA_AVG 153161 non-null float64

NONLIVINGAPARTMENTS_AVG 93997 non-null float64

NONLIVINGAREA_AVG 137829 non-null float64

APARTMENTS_MODE 151450 non-null float64

BASEMENTAREA_MODE 127568 non-null float64

YEARS_BEGINEXPLUATATION_MODE 157504 non-null float64

YEARS_BUILD_MODE 103023 non-null float64

COMMONAREA_MODE 92646 non-null float64

ELEVATORS_MODE 143620 non-null float64

ENTRANCES_MODE 152683 non-null float64

FLOORSMAX_MODE 154491 non-null float64

FLOORSMIN_MODE 98869 non-null float64

LANDAREA_MODE 124921 non-null float64

LIVINGAPARTMENTS_MODE 97312 non-null float64

LIVINGAREA_MODE 153161 non-null float64

NONLIVINGAPARTMENTS_MODE 93997 non-null float64

NONLIVINGAREA_MODE 137829 non-null float64

APARTMENTS_MEDI 151450 non-null float64

BASEMENTAREA_MEDI 127568 non-null float64

YEARS_BEGINEXPLUATATION_MEDI 157504 non-null float64

YEARS_BUILD_MEDI 103023 non-null float64

COMMONAREA_MEDI 92646 non-null float64

ELEVATORS_MEDI 143620 non-null float64

ENTRANCES_MEDI 152683 non-null float64

FLOORSMAX_MEDI 154491 non-null float64

FLOORSMIN_MEDI 98869 non-null float64

LANDAREA_MEDI 124921 non-null float64

LIVINGAPARTMENTS_MEDI 97312 non-null float64

LIVINGAREA_MEDI 153161 non-null float64

NONLIVINGAPARTMENTS_MEDI 93997 non-null float64

NONLIVINGAREA_MEDI 137829 non-null float64

FONDKAPREMONT_MODE 97216 non-null object

HOUSETYPE_MODE 153214 non-null object

TOTALAREA_MODE 159080 non-null float64

WALLSMATERIAL_MODE 151170 non-null object

EMERGENCYSTATE_MODE 161756 non-null object

OBS_30_CNT_SOCIAL_CIRCLE 306490 non-null float64

DEF_30_CNT_SOCIAL_CIRCLE 306490 non-null float64

OBS_60_CNT_SOCIAL_CIRCLE 306490 non-null float64

DEF_60_CNT_SOCIAL_CIRCLE 306490 non-null float64

DAYS_LAST_PHONE_CHANGE 307510 non-null float64

FLAG_DOCUMENT_2 307511 non-null int64

FLAG_DOCUMENT_3 307511 non-null int64

FLAG_DOCUMENT_4 307511 non-null int64

FLAG_DOCUMENT_5 307511 non-null int64

FLAG_DOCUMENT_6 307511 non-null int64

FLAG_DOCUMENT_7 307511 non-null int64

FLAG_DOCUMENT_8 307511 non-null int64

FLAG_DOCUMENT_9 307511 non-null int64

FLAG_DOCUMENT_10 307511 non-null int64

FLAG_DOCUMENT_11 307511 non-null int64

FLAG_DOCUMENT_12 307511 non-null int64

FLAG_DOCUMENT_13 307511 non-null int64

FLAG_DOCUMENT_14 307511 non-null int64

FLAG_DOCUMENT_15 307511 non-null int64

FLAG_DOCUMENT_16 307511 non-null int64

FLAG_DOCUMENT_17 307511 non-null int64

FLAG_DOCUMENT_18 307511 non-null int64

FLAG_DOCUMENT_19 307511 non-null int64

FLAG_DOCUMENT_20 307511 non-null int64

FLAG_DOCUMENT_21 307511 non-null int64

AMT_REQ_CREDIT_BUREAU_HOUR 265992 non-null float64

AMT_REQ_CREDIT_BUREAU_DAY 265992 non-null float64

AMT_REQ_CREDIT_BUREAU_WEEK 265992 non-null float64

AMT_REQ_CREDIT_BUREAU_MON 265992 non-null float64

AMT_REQ_CREDIT_BUREAU_QRT 265992 non-null float64

AMT_REQ_CREDIT_BUREAU_YEAR 265992 non-null float64

dtypes: float64(65), int64(41), object(16)

memory usage: 286.2+ MBI recall detailed annotations on the fields - in the file HomeCredit_columns_description. As you can see from info, part of the data is incomplete and part is categorical, they are displayed as object. Most models with such data do not work, we have to do something about it. At this initial analysis can be considered complete, go directly to the EDA

Exploratory Data Analysis or primary data mining

In the EDA process, we consider basic statistics and draw graphs to find trends, anomalies, patterns, and relationships within the data. The purpose of an EDA is to find out what the data can tell. Usually the analysis goes from top to bottom - from a general overview to the study of individual zones that attract attention and may be of interest. Subsequently, these findings can be used in the construction of the model, the choice of features for it and in its interpretation.

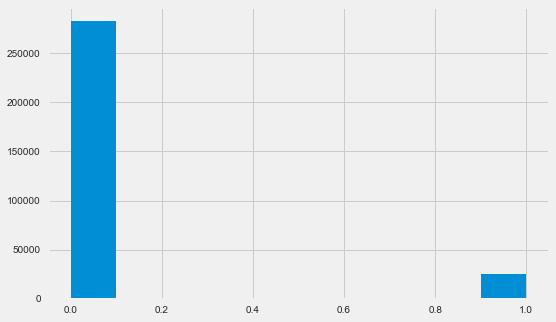

Distribution of target variable

app_train.TARGET.value_counts()

0 282686

1 24825

Name: TARGET, dtype: int64 plt.style.use('fivethirtyeight') plt.rcParams["figure.figsize"] = [8,5] plt.hist(app_train.TARGET) plt.show()

Let me remind you that 1 means problems of any kind with a return, 0 means no problems. As you can see, mostly borrowers have no problems with return, the proportion of problem ones is about 8%. This means that the classes are not balanced and this may need to be taken into account when building the model.

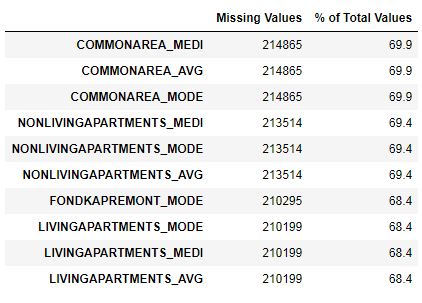

Research missing data

We have seen that the lack of data is quite substantial. Let's see in more detail where and what is missing.

122 .

67 .

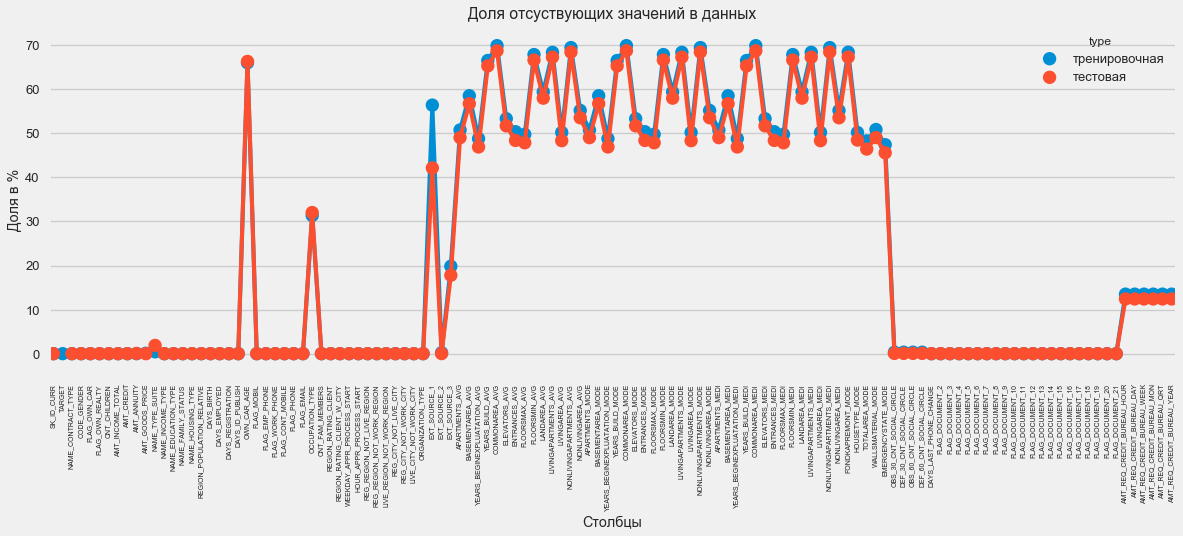

In graphic format:

plt.style.use('seaborn-talk') fig = plt.figure(figsize=(18,6)) miss_train = pd.DataFrame((app_train.isnull().sum())*100/app_train.shape[0]).reset_index() miss_test = pd.DataFrame((app_test.isnull().sum())*100/app_test.shape[0]).reset_index() miss_train["type"] = "" miss_test["type"] = "" missing = pd.concat([miss_train,miss_test],axis=0) ax = sns.pointplot("index",0,data=missing,hue="type") plt.xticks(rotation =90,fontsize =7) plt.title(" ") plt.ylabel(" %") plt.xlabel("")

There are many answers to the question “what to do with all this”. You can fill it with zeros, you can use median values, you can just delete strings without the necessary information. It all depends on the model that we plan to use, as some perfectly cope with the missing values. For now, remember this fact and leave everything as it is.

Types of columns and coding of categorical data

As we remember. part of the columns is of type object, that is, it has not a numeric value, but reflects some category. Let's look at these columns more closely.

app_train.dtypes.value_counts()

float64 65

int64 41

object 16

dtype: int64 app_train.select_dtypes(include=[object]).apply(pd.Series.nunique, axis = 0)

NAME_CONTRACT_TYPE 2

CODE_GENDER 3

FLAG_OWN_CAR 2

FLAG_OWN_REALTY 2

NAME_TYPE_SUITE 7

NAME_INCOME_TYPE 8

NAME_EDUCATION_TYPE 5

NAME_FAMILY_STATUS 6

NAME_HOUSING_TYPE 6

OCCUPATION_TYPE 18

WEEKDAY_APPR_PROCESS_START 7

ORGANIZATION_TYPE 58

FONDKAPREMONT_MODE 4

HOUSETYPE_MODE 3

WALLSMATERIAL_MODE 7

EMERGENCYSTATE_MODE 2

dtype: int64We have 16 columns, in each of which from 2 to 58 different options of values. In general, machine learning models cannot do anything with such columns (except for some, such as LightGBM or CatBoost). Since we plan to try out different models on dataset, we need to do something about it. There are basically two approaches here:

- Label Encoding - categories are assigned numbers 0, 1, 2, and so on, and are recorded in the same column.

- One-Hot-encoding - one column is decomposed into several by the number of options and in these columns it is noted which option is in this record.

Of the popular ones, the

target target encoding is also worth noting (thanks to the

roryorangepants for clarifying).

There is a small problem with Label Encoding - it assigns numeric values that have nothing to do with reality. For example, if we are dealing with a numerical value, then the borrower's income of 100,000 is definitely greater and better than the income of 20,000. But can we say that, for example, one city is better than another because one is assigned the value 100 and the other is 200 ?

One-Hot-encoding, on the other hand, is safer, but can produce “extra” columns. For example, if we encode the same gender with One-Hot, we will have two columns, “male for gender” and “female for gender”, although one would suffice, “is it a man”.

On good for this dataset, it would be necessary to encode the signs with low variability with the help of Label Encoding, and everything else - One-Hot, but to simplify, we will encode everything on One-Hot. On the speed of calculation and the result is almost no effect. The pandas encoding process itself is very simple.

app_train = pd.get_dummies(app_train) app_test = pd.get_dummies(app_test) print('Training Features shape: ', app_train.shape) print('Testing Features shape: ', app_test.shape)

Training Features shape: (307511, 246)

Testing Features shape: (48744, 242)Since the number of options in the sample columns is not equal, the number of columns now does not match. Alignment is required - it is necessary to remove columns that are not in the test one from the training sample. This makes the align method, you need to specify axis = 1 (for columns).

: (307511, 242)

: (48744, 242)Data correlation

A good way to understand the data is to calculate the Pearson correlation coefficients for the data relative to the target feature. This is not the best method to show the relevance of features, but it is simple and allows you to get an idea of the data. Interpret the coefficients as follows:

- 00-.19 “very weak”

- 20-.39 “weak”

- 40-.59 “average”

- 60-.79 “strong”

- 80-1.0 “very strong”

:

DAYS_REGISTRATION 0.041975

OCCUPATION_TYPE_Laborers 0.043019

FLAG_DOCUMENT_3 0.044346

REG_CITY_NOT_LIVE_CITY 0.044395

FLAG_EMP_PHONE 0.045982

NAME_EDUCATION_TYPE_Secondary / secondary special 0.049824

REG_CITY_NOT_WORK_CITY 0.050994

DAYS_ID_PUBLISH 0.051457

CODE_GENDER_M 0.054713

DAYS_LAST_PHONE_CHANGE 0.055218

NAME_INCOME_TYPE_Working 0.057481

REGION_RATING_CLIENT 0.058899

REGION_RATING_CLIENT_W_CITY 0.060893

DAYS_BIRTH 0.078239

TARGET 1.000000

Name: TARGET, dtype: float64

:

EXT_SOURCE_3 -0.178919

EXT_SOURCE_2 -0.160472

EXT_SOURCE_1 -0.155317

NAME_EDUCATION_TYPE_Higher education -0.056593

CODE_GENDER_F -0.054704

NAME_INCOME_TYPE_Pensioner -0.046209

ORGANIZATION_TYPE_XNA -0.045987

DAYS_EMPLOYED -0.044932

FLOORSMAX_AVG -0.044003

FLOORSMAX_MEDI -0.043768

FLOORSMAX_MODE -0.043226

EMERGENCYSTATE_MODE_No -0.042201

HOUSETYPE_MODE_block of flats -0.040594

AMT_GOODS_PRICE -0.039645

REGION_POPULATION_RELATIVE -0.037227

Name: TARGET, dtype: float64Thus, all the data are weakly correlated with the target (except for the target itself, which, of course, is equal to itself). However, age and some “external data sources” are distinguished from the data. This is probably some additional data from other credit institutions. It is funny that although the goal is declared as independence from such data in making a credit decision, in fact we will be based primarily on them.

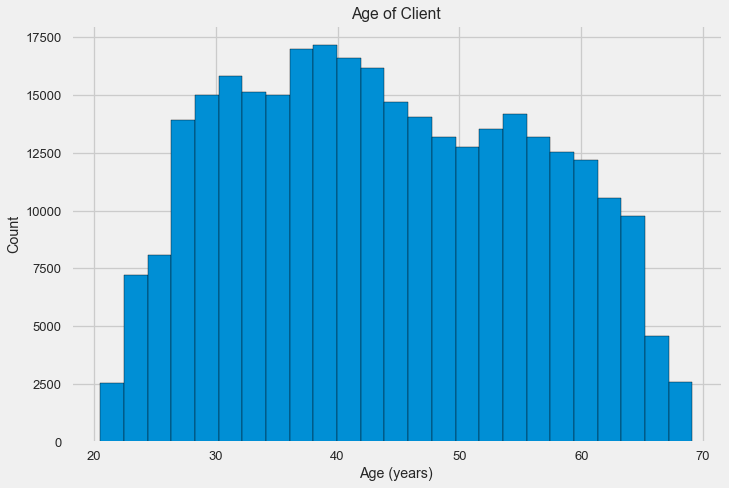

Age

It is clear that the older the client, the higher the probability of return (up to a certain limit, of course). But for some reason the age is indicated on negative days before the loan is issued, therefore, it positively correlates with non-return (which looks somewhat strange). Let's bring it to a positive value and look at the correlation.

app_train['DAYS_BIRTH'] = abs(app_train['DAYS_BIRTH']) app_train['DAYS_BIRTH'].corr(app_train['TARGET'])

-0.078239308309827088Let's look at the variable more carefully. Let's start with the histogram.

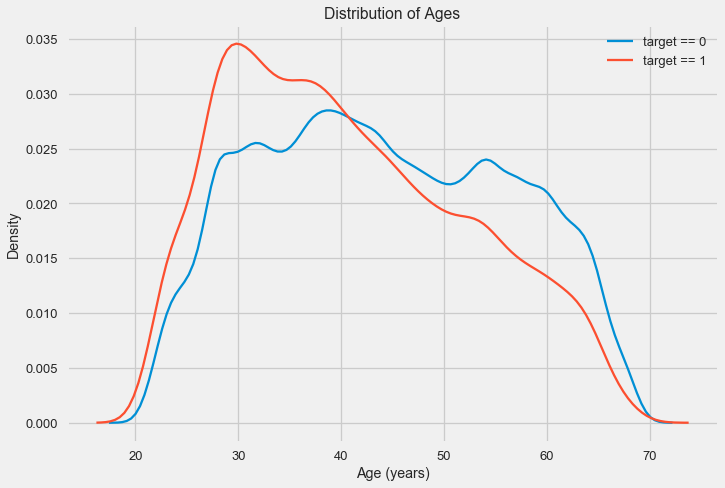

The distribution histogram itself may say something useful, except that we do not see any particular emissions and everything looks more or less plausible. To show the effect of age on the result, you can construct a graph of kernel density estimation (KDE) - the distribution of nuclear density, painted in the colors of the target feature. It shows the distribution of one variable and can be interpreted as a smoothed histogram (calculated as the Gaussian core over each point, which is then averaged to smooth).

As can be seen, the non-return rate is higher for young people and decreases with increasing age. This is not a reason to reject young people in a loan at all times, such a “recommendation” will only lead to a loss of income and a market for the bank. This is an occasion to think about a more thorough tracking of such loans, assessment, and perhaps even some kind of financial education for young borrowers.

External sources

Let's take a closer look at the “external data sources” EXT_SOURCE and their correlation.

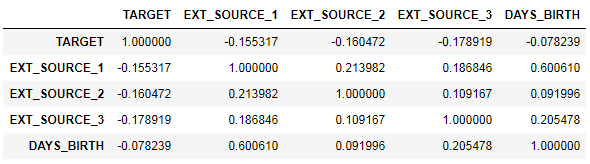

ext_data = app_train[['TARGET', 'EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3', 'DAYS_BIRTH']] ext_data_corrs = ext_data.corr() ext_data_corrs

It is also convenient to display the correlation using heatmap.

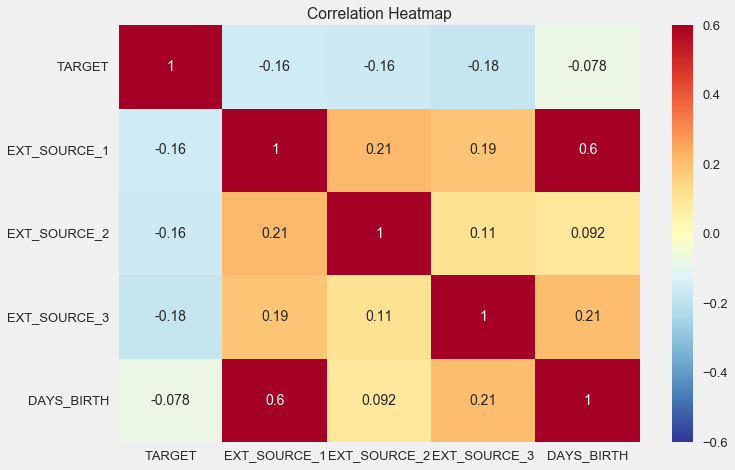

sns.heatmap(ext_data_corrs, cmap = plt.cm.RdYlBu_r, vmin = -0.25, annot = True, vmax = 0.6) plt.title('Correlation Heatmap');

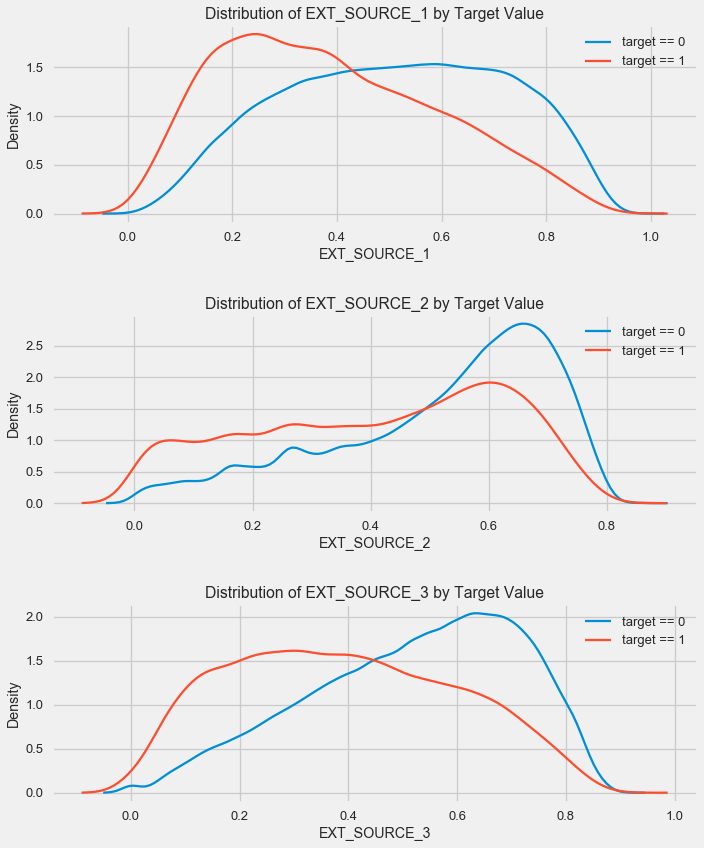

As you can see, all sources show a negative correlation with the target. Look at the KDE distribution for each source.

plt.figure(figsize = (10, 12))

The picture is similar to the distribution by age - with the growth of the indicator, the probability of repayment of a loan increases. The third source is strongest in this regard. Although in absolute terms the correlation with the target variable is still in the “very low” category, external data sources and age will have the highest value in building the model.

Pair schedule

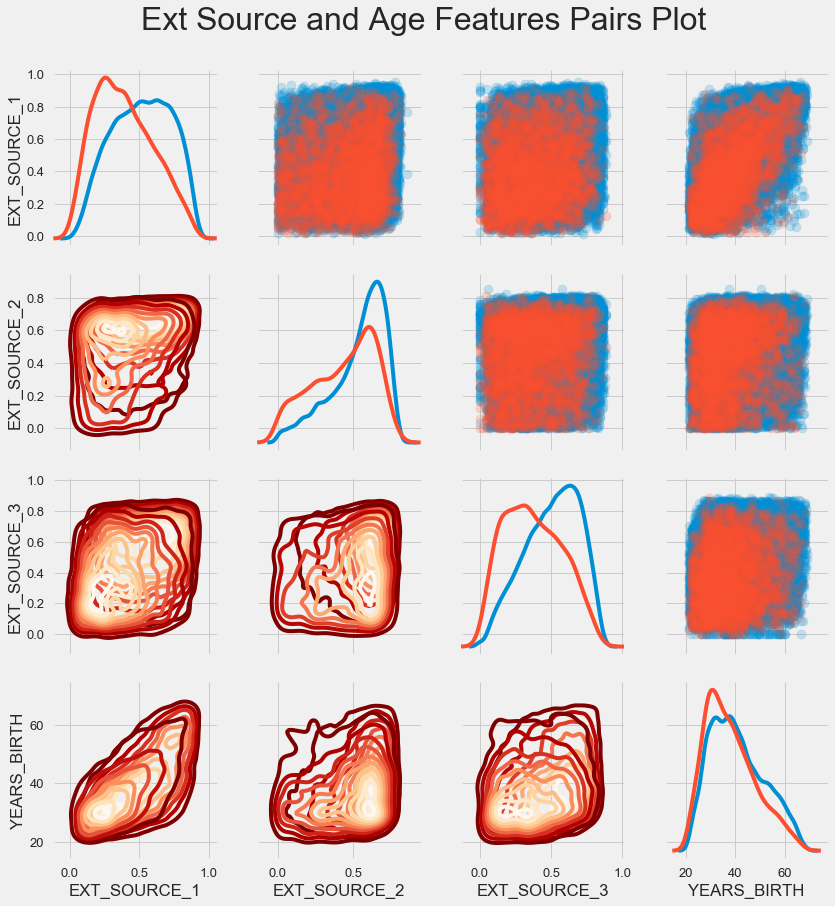

For a better understanding of the relationships between these variables, you can build a pair chart, in which we will be able to see the relationships of each pair and the histogram of the diagonal distribution. Above the diagonal you can show the scattering diagram, and below - 2d KDE.

Blue shows returnable loans, red - non-returnable. It is rather difficult to interpret this, but a good print on a T-shirt or a picture in a museum of modern art can come out of this picture.

Research other signs

Let us consider in more detail other signs and their dependence on the target variable. Since there are many categorical ones among them (and we have already managed to encode them), we will need the original data again. Let's call them a little differently to avoid confusion.

application_train = pd.read_csv(PATH+"application_train.csv") application_test = pd.read_csv(PATH+"application_test.csv")

We also need a couple of functions to beautifully display the distributions and their effect on the target variable. Many thanks

to the author of this

kernel here

. def plot_stats(feature,label_rotation=False,horizontal_layout=True): temp = application_train[feature].value_counts() df1 = pd.DataFrame({feature: temp.index,' ': temp.values})

So, we will consider the main signs of kolientov

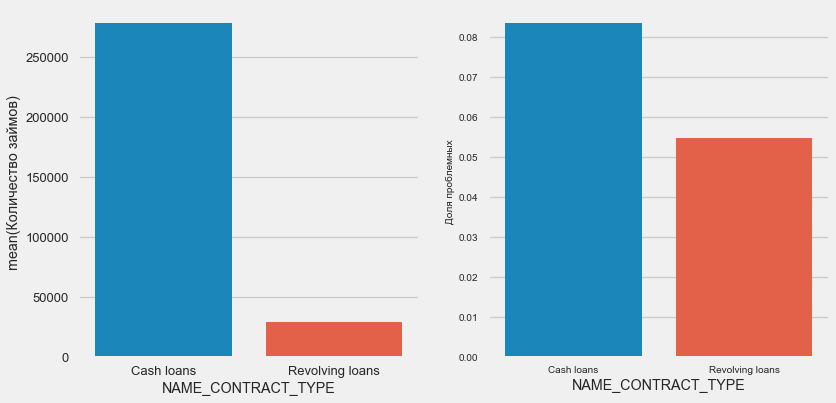

Type of loan

plot_stats('NAME_CONTRACT_TYPE')

Interestingly, revolving loans (probably overdrafts or something like that) make up less than 10% of the total number of loans. At the same time, the percentage of no return among them is much higher. It is a good reason to review the methodology of working with these loans, and maybe even abandon them altogether.

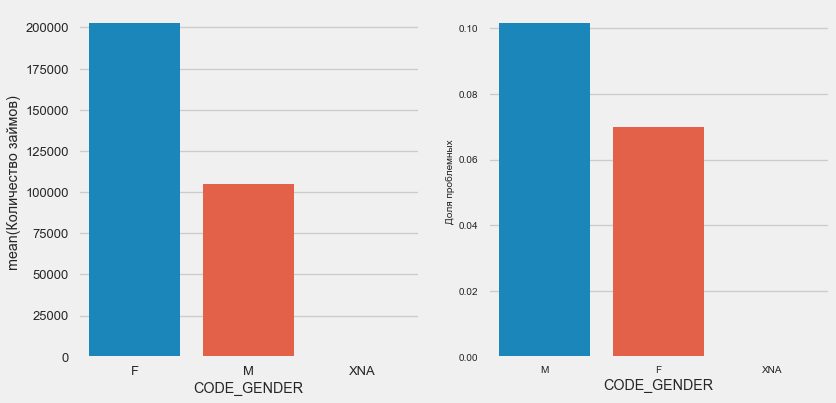

Customer gender

plot_stats('CODE_GENDER')

Women clients are almost twice as many men, while men show a much higher risk.

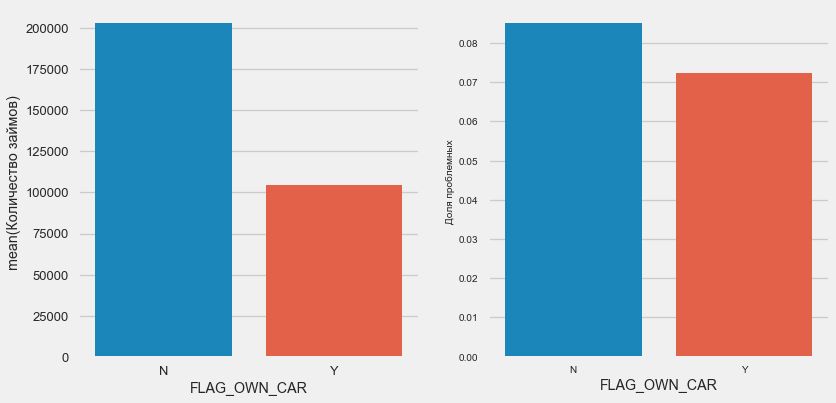

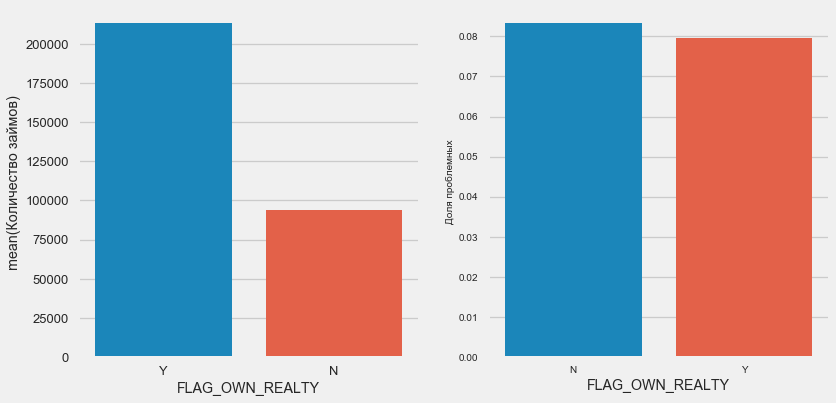

Owning a car and real estate

plot_stats('FLAG_OWN_CAR') plot_stats('FLAG_OWN_REALTY')

Clients with a machine half as "horseless". The risk on them is almost the same, customers with a machine pay a little better.

Real estate is the opposite picture - customers without it are half as much. The risk for property owners is also slightly less.

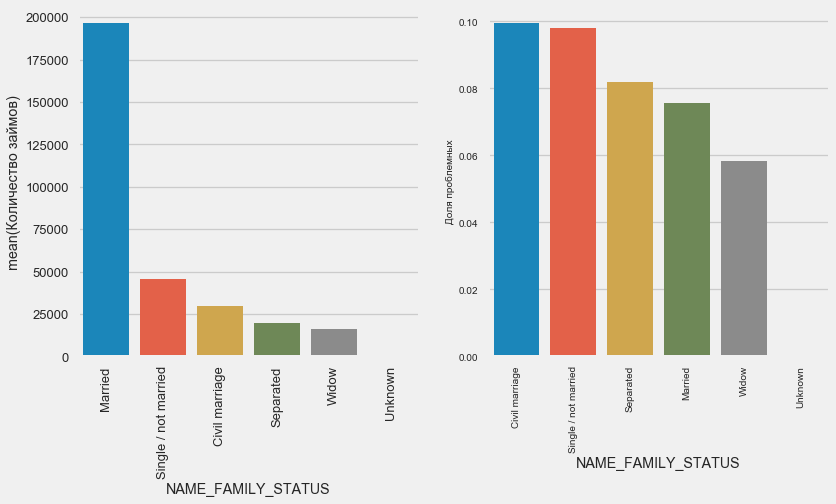

Family status

plot_stats('NAME_FAMILY_STATUS',True, True)

While the majority of clients are married, clients are unmarried and single-handed. Widowers show minimal risk.

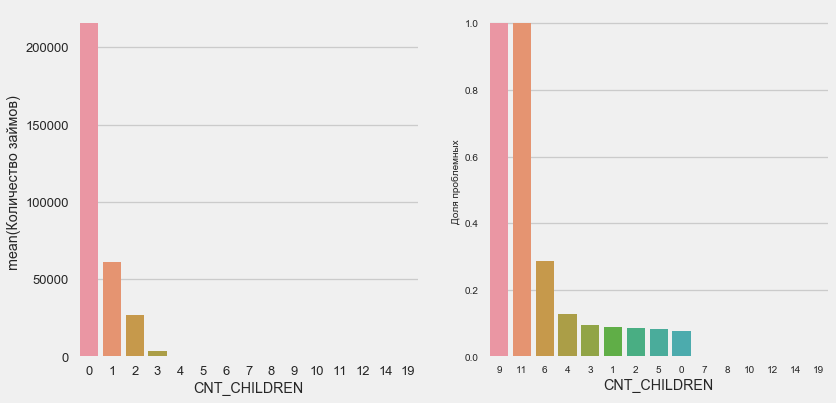

Amount of children

plot_stats('CNT_CHILDREN')

Most customers are childless. At the same time, customers with 9 and 11 children show complete non-return.

application_train.CNT_CHILDREN.value_counts()

0 215371

1 61119

2 26749

3 3717

4 429

5 84

6 21

7 7

14 3

19 2

12 2

10 2

9 2

8 2

11 1

Name: CNT_CHILDREN, dtype: int64As the calculation of values shows, these data are statistically insignificant - only 1-2 clients of both categories. However, all three were defaulted, as were half of the clients with 6 children.

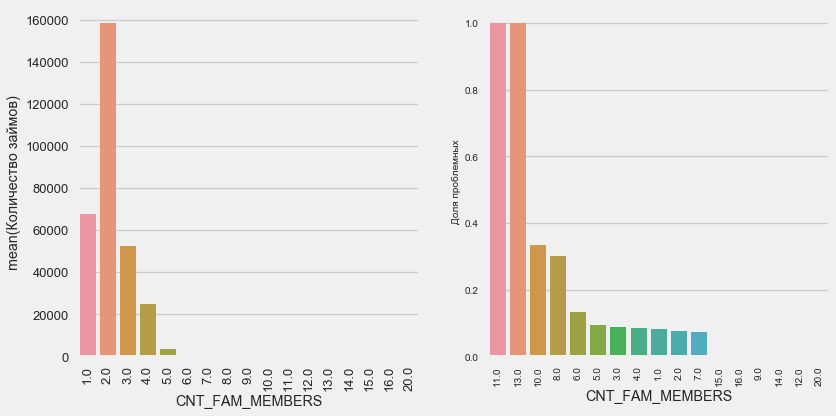

Number of family members

plot_stats('CNT_FAM_MEMBERS',True)

The situation is similar - the smaller the mouths, the higher the recurrence.

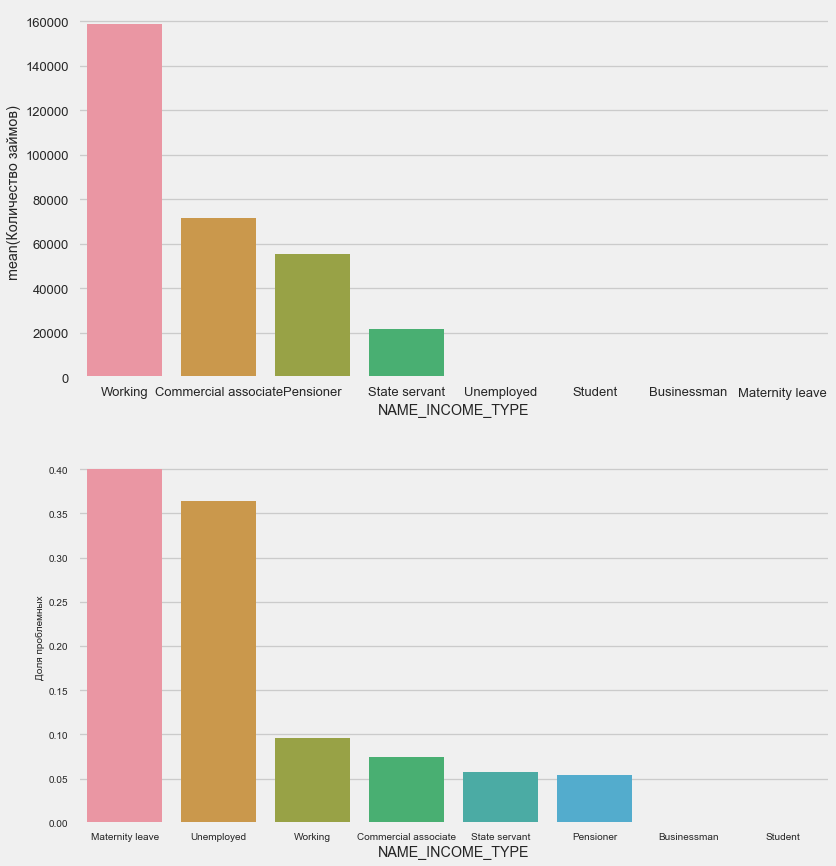

Type of income

plot_stats('NAME_INCOME_TYPE',False,False)

Single mothers and the unemployed are likely to be cut off at the application stage - there are too few of them in the sample. But consistently show problems.

Kind of activity

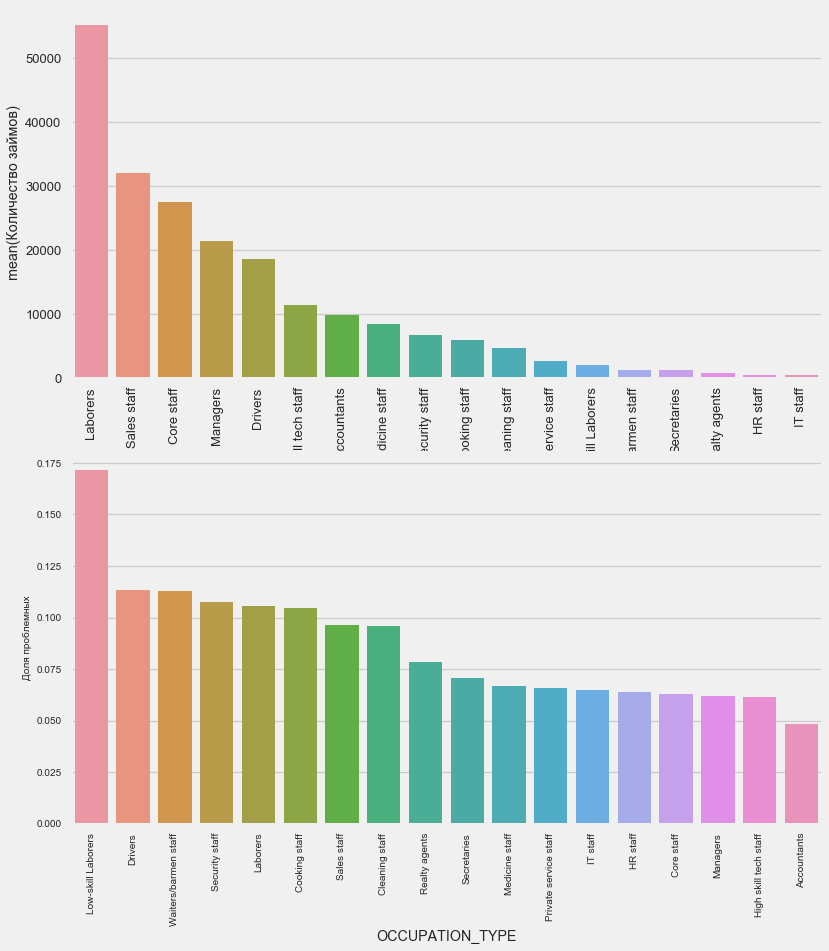

plot_stats('OCCUPATION_TYPE',True, False)

application_train.OCCUPATION_TYPE.value_counts()

Laborers 55186

Sales staff 32102

Core staff 27570

Managers 21371

Drivers 18603

High skill tech staff 11380

Accountants 9813

Medicine staff 8537

Security staff 6721

Cooking staff 5946

Cleaning staff 4653

Private service staff 2652

Low-skill Laborers 2093

Waiters/barmen staff 1348

Secretaries 1305

Realty agents 751

HR staff 563

IT staff 526

Name: OCCUPATION_TYPE, dtype: int64Here, drivers and security officers are of interest, which are quite numerous and come to the problem more often than other categories.

Education

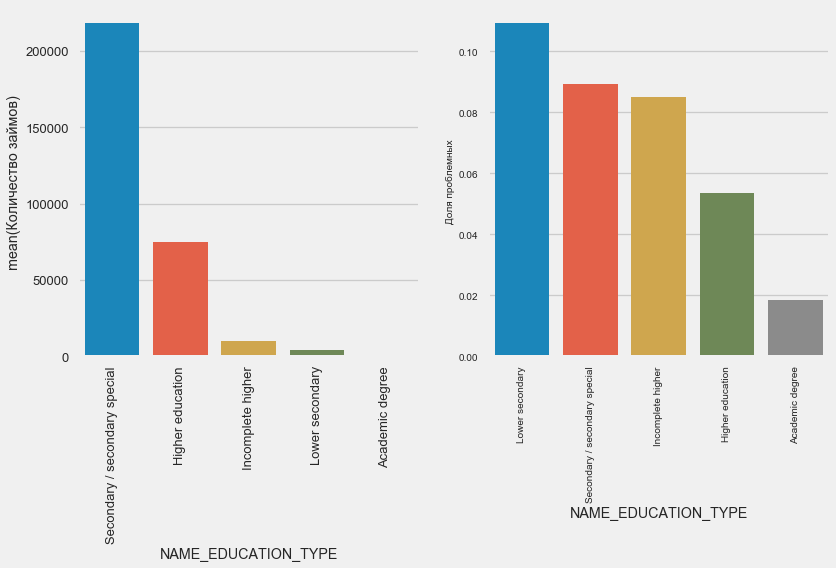

plot_stats('NAME_EDUCATION_TYPE',True)

The higher the education, the better the reflexivity is obvious.

Type of organization - employer

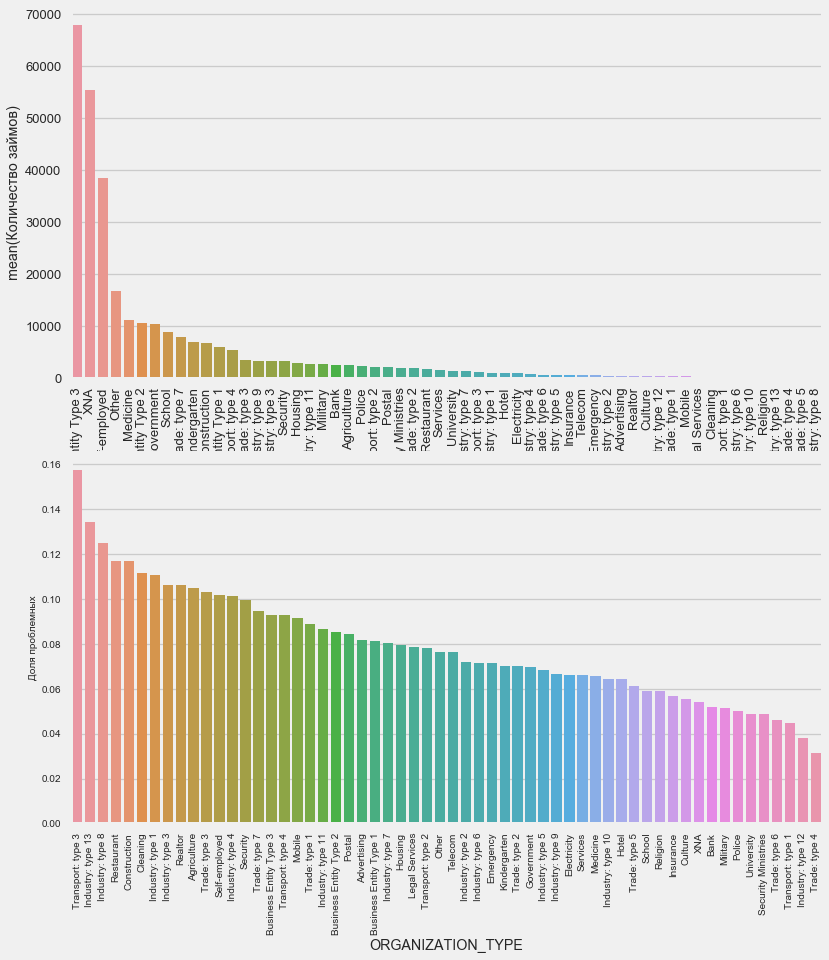

plot_stats('ORGANIZATION_TYPE',True, False)

The highest percentage of non-return is observed in Transport: type 3 (16%), Industry: type 13 (13.5%), Industry: type 8 (12.5%) and in Restaurant (up to 12%).

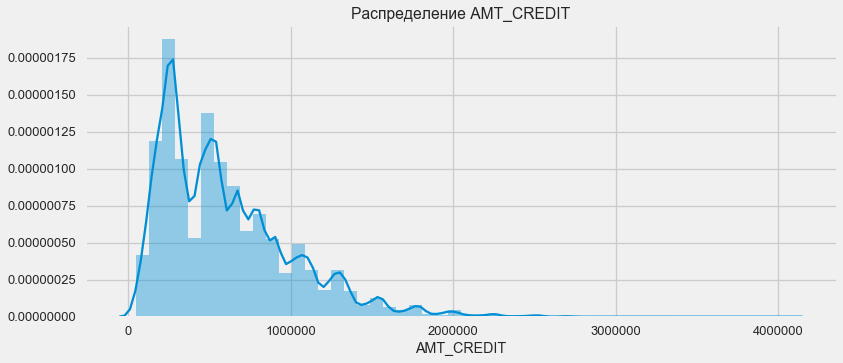

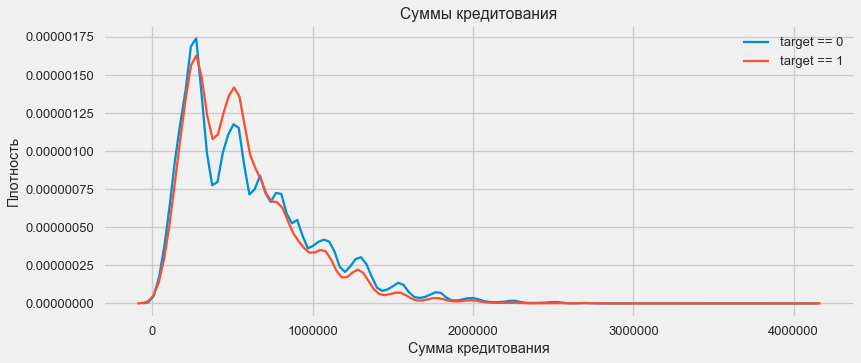

Loan Amount Distribution

Consider the distribution of loan amounts and their impact on repayment

plt.figure(figsize=(12,5)) plt.title(" AMT_CREDIT") ax = sns.distplot(app_train["AMT_CREDIT"])

plt.figure(figsize=(12,5))

As the density plot shows, strong sums come back more often.

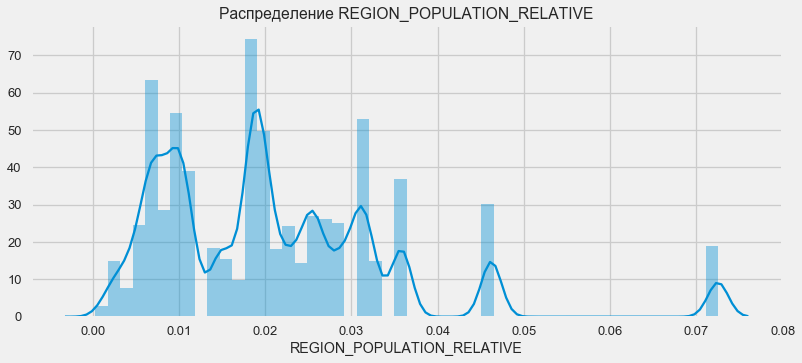

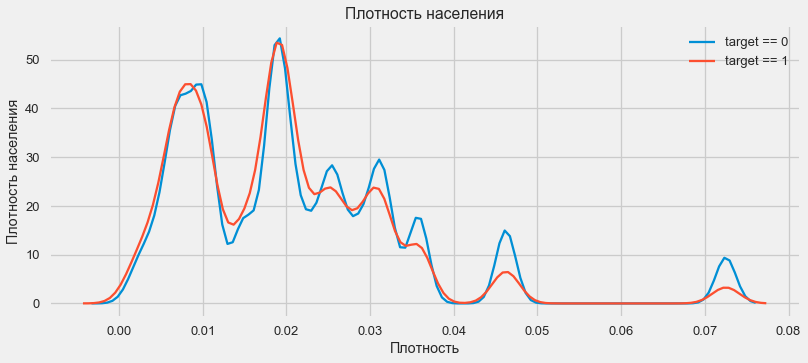

Density distribution

plt.figure(figsize=(12,5)) plt.title(" REGION_POPULATION_RELATIVE") ax = sns.distplot(app_train["REGION_POPULATION_RELATIVE"])

plt.figure(figsize=(12,5))

Customers from more populated regions tend to pay off loans better.

Thus, we got an idea about the main features of dataset and their influence on the result. Specifically, with the listed in this article, we will not do anything, but they can be very important in future work.

Feature Engineering - feature conversion

Competitions on Kaggle are won by the transformation of signs - the one who was able to create the most useful signs from the data wins. At least for structured data, winning models are now basically different versions of gradient boosting. Most often, it is more efficient to spend time converting signs than setting up hyper parameters or selecting models. The model can still be trained only by the data that is transferred to it. Ensuring that the data is relevant to the task is the primary responsibility of the date of the scientist.

The process of converting attributes can include creating new data from existing ones, choosing the most important ones from the available ones, etc. Let's try out this time polynomial signs.

Polynomial features

The polynomial method of constructing features consists in the fact that we simply create features that are the degree of the features available and their works. In some cases, such constructed features may have a stronger correlation with the target variable than their “parents”. Although such methods are often used in statistical models, they are much less common in machine learning. However. nothing prevents us from trying them, especially since Scikit-Learn has a class specifically for this purpose - PolynomialFeatures - which creates polynomial features and their works, you only need to specify the source features themselves and the maximum degree to which they need to be built. We use the most powerful effects on the result of 4 signs and degree 3 in order not to complicate the model too much and avoid overfitting (overtraining of the model - its excessive adjustment to the training set).

: (307511, 35)

get_feature_names poly_transformer.get_feature_names(input_features = ['EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3', 'DAYS_BIRTH'])[:15]

['1',

'EXT_SOURCE_1',

'EXT_SOURCE_2',

'EXT_SOURCE_3',

'DAYS_BIRTH',

'EXT_SOURCE_1^2',

'EXT_SOURCE_1 EXT_SOURCE_2',

'EXT_SOURCE_1 EXT_SOURCE_3',

'EXT_SOURCE_1 DAYS_BIRTH',

'EXT_SOURCE_2^2',

'EXT_SOURCE_2 EXT_SOURCE_3',

'EXT_SOURCE_2 DAYS_BIRTH',

'EXT_SOURCE_3^2',

'EXT_SOURCE_3 DAYS_BIRTH',

'DAYS_BIRTH^2']Total 35 polynomial and derived attributes. Let us check their correlation with the target.

EXT_SOURCE_2 EXT_SOURCE_3 -0.193939

EXT_SOURCE_1 EXT_SOURCE_2 EXT_SOURCE_3 -0.189605

EXT_SOURCE_2 EXT_SOURCE_3 DAYS_BIRTH -0.181283

EXT_SOURCE_2^2 EXT_SOURCE_3 -0.176428

EXT_SOURCE_2 EXT_SOURCE_3^2 -0.172282

EXT_SOURCE_1 EXT_SOURCE_2 -0.166625

EXT_SOURCE_1 EXT_SOURCE_3 -0.164065

EXT_SOURCE_2 -0.160295

EXT_SOURCE_2 DAYS_BIRTH -0.156873

EXT_SOURCE_1 EXT_SOURCE_2^2 -0.156867

Name: TARGET, dtype: float64

DAYS_BIRTH -0.078239

DAYS_BIRTH^2 -0.076672

DAYS_BIRTH^3 -0.074273

TARGET 1.000000

1 NaN

Name: TARGET, dtype: float64So, some signs show a higher correlation than the original ones. It makes sense to try learning with and without them (like so much else in machine learning, this can be determined experimentally). To do this, create a copy of the data frames and add new features there.

: (307511, 277)

: (48744, 277)Model training

A basic level of

In the calculations, you need to make a start from some basic level of the model, below which it is no longer possible to fall. In our case, this could be 0.5 for all test clients - this shows that we absolutely can’t imagine whether the client will return the loan or not. In our case, preliminary work has already been done and more complex models can be used.Logistic regression

To calculate the logistic regression, we need to take tables with coded categorical features, fill in the missing data and normalize them (lead to values from 0 to 1). All this executes the following code: from sklearn.preprocessing import MinMaxScaler, Imputer

: (307511, 242)

: (48744, 242)We use logistic regression from Scikit-Learn as the first model. Let's take the defol model with one amendment - lower the regularization parameter C to avoid overfitting. The usual syntax is to create a model, train it and predict the probability using predict_proba (we need probability, not 0/1) from sklearn.linear_model import LogisticRegression

Now you can create a file to upload to Kaggle. Create a dataframe from customer IDs and the likelihood of non-return and unload it. submit = app_test[['SK_ID_CURR']] submit['TARGET'] = log_reg_pred submit.head()

SK_ID_CURR TARGET

0 100001 0.087954

1 100005 0.163151

2 100013 0.109923

3 100028 0.077124

4 100038 0.151694 submit.to_csv('log_reg_baseline.csv', index = False)

So, the result of our titanic work: 0.673, with the best result for today 0.802.Improved model - random forest

, — . , . 100 . , — , . .

from sklearn.ensemble import RandomForestClassifier

— 0,683, . - — . .

poly_features_names = list(app_train_poly.columns)

— 0,633. .Gradient boosting is a “serious model” for machine learning. Practically all last competitions "are dragged" precisely. Let's build a simple model and check its performance. from lightgbm import LGBMClassifier clf = LGBMClassifier() clf.fit(train, train_labels) predictions = clf.predict_proba(test)[:, 1]

The result of LightGBM is 0.735, which strongly leaves behind all other models.Interpreting the model - the importance of signs

The simplest method for interpreting a model is to look at the importance of attributes (which not all models can do). Since our classifier processed the array, it will take some work to re-set the column names in accordance with the columns of this array.

As might be expected, the most important to model all the same 4 features. The importance of attributes is not the best method for interpreting a model, but it allows you to understand the main factors that the model uses for predictions.feature importance

28 EXT_SOURCE_1 310

30 EXT_SOURCE_3 282

29 EXT_SOURCE_2 271

7 DAYS_BIRTH 192

3 AMT_CREDIT 161

4 AMT_ANNUITY 142

5 AMT_GOODS_PRICE 129

8 DAYS_EMPLOYED 127

10 DAYS_ID_PUBLISH 102

9 DAYS_REGISTRATION 69

0.01 = 158

. . , .

import gc

,

data = pd.read_csv('../input/application_train.csv') test = pd.read_csv('../input/application_test.csv') prev = pd.read_csv('../input/previous_application.csv') buro = pd.read_csv('../input/bureau.csv') buro_balance = pd.read_csv('../input/bureau_balance.csv') credit_card = pd.read_csv('../input/credit_card_balance.csv') POS_CASH = pd.read_csv('../input/POS_CASH_balance.csv') payments = pd.read_csv('../input/installments_payments.csv')

. , , . — , , , .

categorical_features = [col for col in data.columns if data[col].dtype == 'object'] one_hot_df = pd.concat([data,test]) one_hot_df = pd.get_dummies(one_hot_df, columns=categorical_features) data = one_hot_df.iloc[:data.shape[0],:] test = one_hot_df.iloc[data.shape[0]:,] print (' ', data.shape) print (' ', test.shape)

(307511, 245)

(48744, 245).

buro_balance.head()

MONTHS_BALANCE — . «»

buro_balance.STATUS.value_counts()

C 13646993

0 7499507

X 5810482

1 242347

5 62406

2 23419

3 8924

4 5847

Name: STATUS, dtype: int64:

— closed, . X — . 0 — , . 1 — 1-30 , 2 — 31-60 5 — .

: buro_grouped_size — buro_grouped_max — buro_grouped_min —

( unstack, buro, SK_ID_BUREAU .

buro_grouped_size = buro_balance.groupby('SK_ID_BUREAU')['MONTHS_BALANCE'].size() buro_grouped_max = buro_balance.groupby('SK_ID_BUREAU')['MONTHS_BALANCE'].max() buro_grouped_min = buro_balance.groupby('SK_ID_BUREAU')['MONTHS_BALANCE'].min() buro_counts = buro_balance.groupby('SK_ID_BUREAU')['STATUS'].value_counts(normalize = False) buro_counts_unstacked = buro_counts.unstack('STATUS') buro_counts_unstacked.columns = ['STATUS_0', 'STATUS_1','STATUS_2','STATUS_3','STATUS_4','STATUS_5','STATUS_C','STATUS_X',] buro_counts_unstacked['MONTHS_COUNT'] = buro_grouped_size buro_counts_unstacked['MONTHS_MIN'] = buro_grouped_min buro_counts_unstacked['MONTHS_MAX'] = buro_grouped_max buro = buro.join(buro_counts_unstacked, how='left', on='SK_ID_BUREAU') del buro_balance gc.collect()

buro.head()

( 7 )

, , -, One-Hot-Encoding', SK_ID_CURR, , ,

buro_cat_features = [bcol for bcol in buro.columns if buro[bcol].dtype == 'object'] buro = pd.get_dummies(buro, columns=buro_cat_features) avg_buro = buro.groupby('SK_ID_CURR').mean() avg_buro['buro_count'] = buro[['SK_ID_BUREAU', 'SK_ID_CURR']].groupby('SK_ID_CURR').count()['SK_ID_BUREAU'] del avg_buro['SK_ID_BUREAU'] del buro gc.collect()

prev.head()

, ID.

prev_cat_features = [pcol for pcol in prev.columns if prev[pcol].dtype == 'object'] prev = pd.get_dummies(prev, columns=prev_cat_features) avg_prev = prev.groupby('SK_ID_CURR').mean() cnt_prev = prev[['SK_ID_CURR', 'SK_ID_PREV']].groupby('SK_ID_CURR').count() avg_prev['nb_app'] = cnt_prev['SK_ID_PREV'] del avg_prev['SK_ID_PREV'] del prev gc.collect()

POS_CASH.head()

POS_CASH.NAME_CONTRACT_STATUS.value_counts()

Active 9151119

Completed 744883

Signed 87260

Demand 7065

Returned to the store 5461

Approved 4917

Amortized debt 636

Canceled 15

XNA 2

Name: NAME_CONTRACT_STATUS, dtype: int64 le = LabelEncoder() POS_CASH['NAME_CONTRACT_STATUS'] = le.fit_transform(POS_CASH['NAME_CONTRACT_STATUS'].astype(str)) nunique_status = POS_CASH[['SK_ID_CURR', 'NAME_CONTRACT_STATUS']].groupby('SK_ID_CURR').nunique() nunique_status2 = POS_CASH[['SK_ID_CURR', 'NAME_CONTRACT_STATUS']].groupby('SK_ID_CURR').max() POS_CASH['NUNIQUE_STATUS'] = nunique_status['NAME_CONTRACT_STATUS'] POS_CASH['NUNIQUE_STATUS2'] = nunique_status2['NAME_CONTRACT_STATUS'] POS_CASH.drop(['SK_ID_PREV', 'NAME_CONTRACT_STATUS'], axis=1, inplace=True)

credit_card.head()

( 7 )

credit_card['NAME_CONTRACT_STATUS'] = le.fit_transform(credit_card['NAME_CONTRACT_STATUS'].astype(str)) nunique_status = credit_card[['SK_ID_CURR', 'NAME_CONTRACT_STATUS']].groupby('SK_ID_CURR').nunique() nunique_status2 = credit_card[['SK_ID_CURR', 'NAME_CONTRACT_STATUS']].groupby('SK_ID_CURR').max() credit_card['NUNIQUE_STATUS'] = nunique_status['NAME_CONTRACT_STATUS'] credit_card['NUNIQUE_STATUS2'] = nunique_status2['NAME_CONTRACT_STATUS'] credit_card.drop(['SK_ID_PREV', 'NAME_CONTRACT_STATUS'], axis=1, inplace=True)

payments.head()

( 7 )

— , .

avg_payments = payments.groupby('SK_ID_CURR').mean() avg_payments2 = payments.groupby('SK_ID_CURR').max() avg_payments3 = payments.groupby('SK_ID_CURR').min() del avg_payments['SK_ID_PREV'] del payments gc.collect()

data = data.merge(right=avg_prev.reset_index(), how='left', on='SK_ID_CURR') test = test.merge(right=avg_prev.reset_index(), how='left', on='SK_ID_CURR') data = data.merge(right=avg_buro.reset_index(), how='left', on='SK_ID_CURR') test = test.merge(right=avg_buro.reset_index(), how='left', on='SK_ID_CURR') data = data.merge(POS_CASH.groupby('SK_ID_CURR').mean().reset_index(), how='left', on='SK_ID_CURR') test = test.merge(POS_CASH.groupby('SK_ID_CURR').mean().reset_index(), how='left', on='SK_ID_CURR') data = data.merge(credit_card.groupby('SK_ID_CURR').mean().reset_index(), how='left', on='SK_ID_CURR') test = test.merge(credit_card.groupby('SK_ID_CURR').mean().reset_index(), how='left', on='SK_ID_CURR') data = data.merge(right=avg_payments.reset_index(), how='left', on='SK_ID_CURR') test = test.merge(right=avg_payments.reset_index(), how='left', on='SK_ID_CURR') data = data.merge(right=avg_payments2.reset_index(), how='left', on='SK_ID_CURR') test = test.merge(right=avg_payments2.reset_index(), how='left', on='SK_ID_CURR') data = data.merge(right=avg_payments3.reset_index(), how='left', on='SK_ID_CURR') test = test.merge(right=avg_payments3.reset_index(), how='left', on='SK_ID_CURR') del avg_prev, avg_buro, POS_CASH, credit_card, avg_payments, avg_payments2, avg_payments3 gc.collect() print (' ', data.shape) print (' ', test.shape) print (' ', y.shape)

(307511, 504)

(48744, 504)

(307511,), , !

from lightgbm import LGBMClassifier clf2 = LGBMClassifier() clf2.fit(data, y) predictions = clf2.predict_proba(test)[:, 1]

the result is 0.770.OK, finally, we’ll try a more complicated procedure with fold separation, cross-validation and selection of the best iteration. folds = KFold(n_splits=5, shuffle=True, random_state=546789) oof_preds = np.zeros(data.shape[0]) sub_preds = np.zeros(test.shape[0]) feature_importance_df = pd.DataFrame() feats = [f for f in data.columns if f not in ['SK_ID_CURR']] for n_fold, (trn_idx, val_idx) in enumerate(folds.split(data)): trn_x, trn_y = data[feats].iloc[trn_idx], y.iloc[trn_idx] val_x, val_y = data[feats].iloc[val_idx], y.iloc[val_idx] clf = LGBMClassifier( n_estimators=10000, learning_rate=0.03, num_leaves=34, colsample_bytree=0.9, subsample=0.8, max_depth=8, reg_alpha=.1, reg_lambda=.1, min_split_gain=.01, min_child_weight=375, silent=-1, verbose=-1, ) clf.fit(trn_x, trn_y, eval_set= [(trn_x, trn_y), (val_x, val_y)], eval_metric='auc', verbose=100, early_stopping_rounds=100

Full AUC score 0.785845Final fast on kaggle 0.783Where to go next

Definitely continue to work with signs. Investigate the data, select some of the features, combine them, attach additional tables in a different way. You can experiment with hyper parameters of the mozheli - there are many directions.I hope this small compilation has shown you modern methods of data research and the preparation of predictive models. Learn datasens, participate in competitions, be cool!And once again references to the kernels that helped me prepare this article. The article is also posted in the form of a laptop on Github , you can download it, dataset and run and experiment.Will Koehrsen. Start Here: A Gentle Introductionsban. HomeCreditRisk: Extensive EDA + Baseline [0.772]Gabriel Preda. Home Credit Default Risk Extensive EDAPavan Raj. Loan repayers v/s Loan defaulters — HOME CREDITLem Lordje Ko. 15 lines: Just EXT_SOURCE_xShanth. HOME CREDIT — BUREAU DATA — FEATURE ENGINEERINGDmitriy Kisil. Good_fun_with_LigthGBM