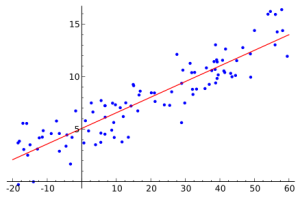

In simple terms, the regression model in mathematical statistics is based on well-known data, which are pairs of numbers. The number of such pairs is predetermined. If we imagine that the first number in a pair is the value of the coordinate x and the second is y , then the set of such pairs of numbers can be represented on a plane in the Cartesian coordinate system as a set of points. These pairs of numbers are not taken randomly. In practice, as a rule, the second number depends on the first. To build a regression is to pick up such a line (more precisely, a function), which as closely as possible brings closer to itself (approximates) the set of the above points.

What is all this for? First of all, it is necessary to compile the so-called. forecasts. Often you need to know

y knowing only

x , if it differs from those X, on the basis of which the regression was built. I will give a simple example. There is a statistics of dependence of a person's height on his age on the basis of 100 different people studied. Thus, we have 100 pairs of numbers {age; growth}. At the same time, “growth” is a dependent quantity, and “age” is an independent one. Having built the regression model competently, we can “predict” growth with any certainty for any age value.

In practice, depending on the situation, linear, parabolic, power, and other types of functions are used in the construction of regression models. In the course of mathematical statistics, the linear regression model is most often considered. Sometimes the case is more complicated - a parabolic model. Making a generalization, it is easy to guess that linear and parabolic models are special cases of a more complex model - polynomial. To build a regression model is to find the parameters of the function that will appear in it. For linear regression, there are two parameters: a coefficient and a free term.

Polynomial regression can be applied in mathematical statistics when modeling trend components of time series. A time series is essentially a series of numbers that depend on time. For example, the average values of air temperature by day over the past year, or enterprise income by months. The order of the simulated polynomial is estimated by special methods, for example, the criterion of the series. The goal of building a polynomial regression model in the time series domain is the same — forecasting.

To begin, consider the problem of polynomial regression in general. All reasoning is based on the generalization of reasoning in linear and parabolic regression problems. After these arguments, I will proceed to a special case - the consideration of this model for time series.

Let two series of observations be given. xi (independent variable) and yi (dependent variable) i= overline1,n . The polynomial equation has the form

y= sum limitskj=0bjxj, (1)

Where

bj - the parameters of this polynomial,

j= overline0,k . Among them

b0 - free member. Find the least squares (OLS) method parameters

bj this regression.

By analogy with linear regression, OLS is also based on minimizing the following expression:

S= sum limitsni=1 left( hatyi−yi right)2 to min (2)

Here hatyi - theoretical values, which are the values of the polynomial (1) at the points xi . Substituting (1) into (2), we obtain

S= sum limitsni=1 left( sumkj=0bjxji−yi right)2 to min.

Based on the necessary condition of the extremum function (k+1) variables S=S(b0,b1, dots,bk) we equate its partial derivatives to zero, i.e.

S′bp=2 sum limitsni=1xpi left( sum limitskj=0bjxji−yi right)=0, p= overline0,k.

Dividing the left and right sides of each equality by 2, we open the second sum:

sum limitsni=1xpi left(b0+b1xi+b2x2i+ dots+bkxki right)− sum limitsni=1xpiyi=0, p= overline0,k.

Opening brackets, we transfer in each

p th term last term with

yi right and divide both parts into

n . As a result, we got

(k+1) expressions forming a system of linear normal equations with respect to

bp . It has the following form:

\ left \ {\ begin {array} {l} b_0 + b_1 \ overline x + b_2 \ overline {x ^ 2} + \ dots + b_k \ overline {x ^ k} = \ overline y \\ b_0 \ overline x + b_1 \ overline {x ^ 2} + b_2 \ overline {x ^ 3} + \ dots + b_k \ overline {x ^ {k + 1}} = \ overline {xy} \\ b_0 \ overline {x ^ 2} + b_1 \ overline {x ^ 3} + b_2 \ overline {x ^ 4} + \ dots + b_k \ overline {x ^ {k + 2}} = \ overline {x ^ 2y} \\ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \\ b_0 \ overline {x ^ k} + b_1 \ overline {x ^ {k + 1}} + b_2 \ overline {x ^ {k + 2}} + \ dots + b_k \ overline {x ^ {2k}} = \ overline {x ^ ky} \ end {array} \ right. \ \ \ \ \ (3)

\ left \ {\ begin {array} {l} b_0 + b_1 \ overline x + b_2 \ overline {x ^ 2} + \ dots + b_k \ overline {x ^ k} = \ overline y \\ b_0 \ overline x + b_1 \ overline {x ^ 2} + b_2 \ overline {x ^ 3} + \ dots + b_k \ overline {x ^ {k + 1}} = \ overline {xy} \\ b_0 \ overline {x ^ 2} + b_1 \ overline {x ^ 3} + b_2 \ overline {x ^ 4} + \ dots + b_k \ overline {x ^ {k + 2}} = \ overline {x ^ 2y} \\ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \ ldots \\ b_0 \ overline {x ^ k} + b_1 \ overline {x ^ {k + 1}} + b_2 \ overline {x ^ {k + 2}} + \ dots + b_k \ overline {x ^ {2k}} = \ overline {x ^ ky} \ end {array} \ right. \ \ \ \ \ (3)

You can rewrite the system (3) in matrix form: AB=C where

A = \ left (\ begin {array} {ccccc} 1 & \ overline x & \ overline {x ^ 2} & \ ldots & \ overline {x ^ k} \\ \ overline x & \ overline {x ^ 2 } & \ overline {x ^ 3} & \ ldots & \ overline {x ^ {k + 1}} \\ \ overline {x ^ 2} & \ overline {x ^ 3} & \ overline {x ^ 4} & \ ldots & \ overline {x ^ {k + 2}} \\ \ vdots & \ vdots & \ vdots & \ ddots & \ vdots \\ \ overline {x ^ k} & \ overline {x ^ {k + 1} } & \ overline {x ^ {k + 2}} & \ ldots & \ overline {x ^ {2k}} \ end {array} \ right), \ \ B = \ left (\ begin {array} {c} b_0 \\ b_1 \\ b_2 \\\ vdots \\ b_k \ end {array} \ right), \ \ C = \ left (\ begin {array} {c} \ overline y \\ overline {xy} \\ \ overline {x ^ 2y} \\\ vdots \\\ overline {x ^ ky} \ end {array} \ right).

A = \ left (\ begin {array} {ccccc} 1 & \ overline x & \ overline {x ^ 2} & \ ldots & \ overline {x ^ k} \\ \ overline x & \ overline {x ^ 2 } & \ overline {x ^ 3} & \ ldots & \ overline {x ^ {k + 1}} \\ \ overline {x ^ 2} & \ overline {x ^ 3} & \ overline {x ^ 4} & \ ldots & \ overline {x ^ {k + 2}} \\ \ vdots & \ vdots & \ vdots & \ ddots & \ vdots \\ \ overline {x ^ k} & \ overline {x ^ {k + 1} } & \ overline {x ^ {k + 2}} & \ ldots & \ overline {x ^ {2k}} \ end {array} \ right), \ \ B = \ left (\ begin {array} {c} b_0 \\ b_1 \\ b_2 \\\ vdots \\ b_k \ end {array} \ right), \ \ C = \ left (\ begin {array} {c} \ overline y \\ overline {xy} \\ \ overline {x ^ 2y} \\\ vdots \\\ overline {x ^ ky} \ end {array} \ right).

We now turn to the application of the above facts in the case of time series. Let given a time series xt where t= overline1,n . Requires build polynomial trend of order k which approximates a given time series as precisely as possible. As an independent variable x we will take t based on the definition of a time series. These X's are a series of natural numbers denoting a period of time. As y time series values are taken xt . In this case, it is clear that the values of elements aij system matrices A do not depend on xt . Since in the general case, obviously,

aij= overlinexi+j−2= frac1n sum limitsnr=1xi+j−2r,

then in the case of time series

aij= frac1n sum limitsnr=1ri+j−2,

Where

i,j= overline1,(k+1).Items cj free vector matrixes C generally obtained as

cj= overlinexj−1y= frac1n sum limitsnr=1xj−1ryr.

And in the case of time series

cj= frac1n sum limitsnr=1rj−1xr,

Where

j= overline1,(k+1).Thus, having solved the system (3), we can find the desired parameters of the polynomial trend b0, dots,bk.

To fill the matrices of the system and to solve it, you can use one of the numerical methods for modeling the trend on the computer. In this case, the result of the calculation will be fairly accurate.

As a result, the trend component will look like:

Tt= sum limitski=0biti, t=0,1,2, dots.

It is also worth noting that the modeled trend component

Tt not defined only for current periods

[1;n] but also for future periods

t>n .

Immediately, I note that polynomial regression models only the trend component of the time series. The full time series model also implies other components, which is beyond the scope of this article.

In practice, I personally did not encounter time series with a polynomial trend order of more than 2. This explains the prevalence of linear and prabolic regression models as particular polynomial cases.