Tomorrow there will be official press releases about the merger of the old-timers Silicon Valley, MIPS, with the young AI company Wave Computing. Information about this event was leaked to the media yesterday, and soon CNet, Forbes, EE Times and a bunch of high-tech websites published articles about the event. Therefore, today Derek Meyer, the president of the merged company (in the photo below on the right), said “okay, spread info among friends” and I decided to write a few words about the technologies and people associated with this event.

The main investor in MIPS and Wave is the billionaire Dado Banatao (pictured below in the center on the left), who in the 1980s founded the company Chips & Technoilogies, which made the chipsets for early personal computers. There are other celebrities in Wave + MIPS, such as Stephen Johnson (pictured above right), the author of the most popular C-compiler of the early 1980s. MIPS is well known in Russia. In the hands of the designer Smriti (in the photo on the left) there is a board from Zelenograd, where MIPS licensees Elvis-NeoTek and Baikal Electronics are located.

Wave has already released a chip that consists of thousands of computing units, essentially simplified processors. This design is optimized for very fast computation of neural networks. Wave has a compiler that turns the dataflow graph into a configuration file for this structure.

The combined company will create a chip that consists of a mixture of such computing units and multi-threaded MIPS cores. Now Wave sells its technology in the form of a box for data centers, for computing neural networks in the cloud. The following chips will be used in embedded devices.

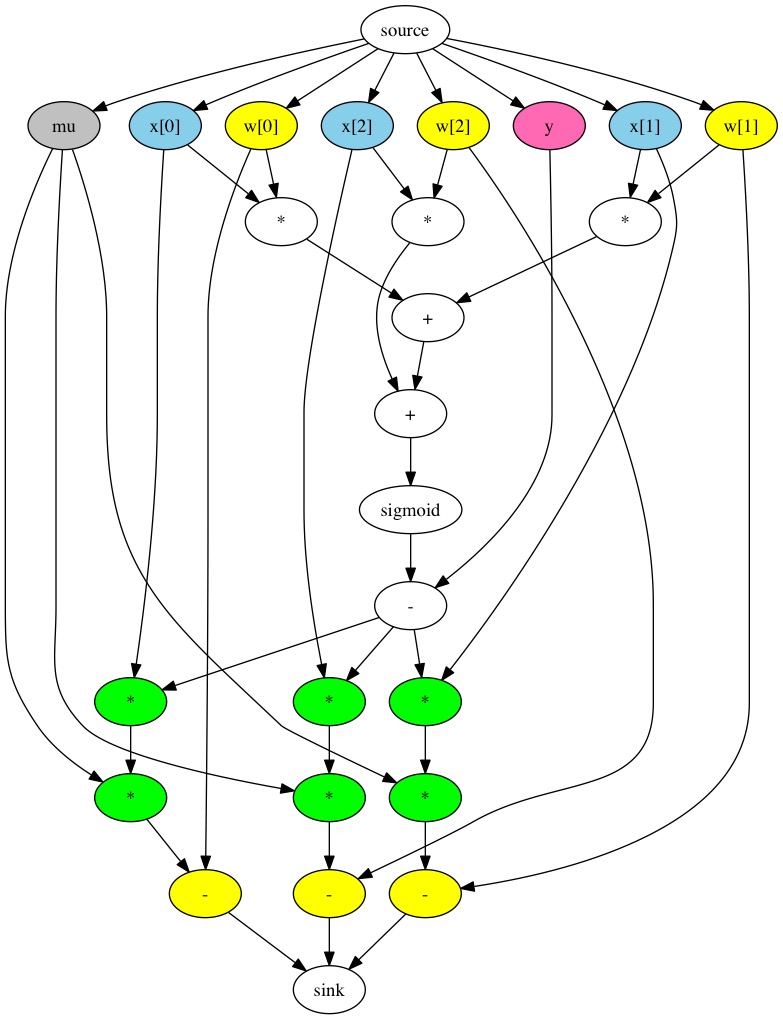

Neural networks are traditionally represented as a dataflow graph. This is a graph whose nodes contain constants, variables, and arithmetic operations on scalars, vectors, and matrices:

Google created the TensorFlow library, which is an API for building such graphs and running computations on the grid — both inference and training with backpropfgftion. This API is most often used with python, the code on which looks like this:

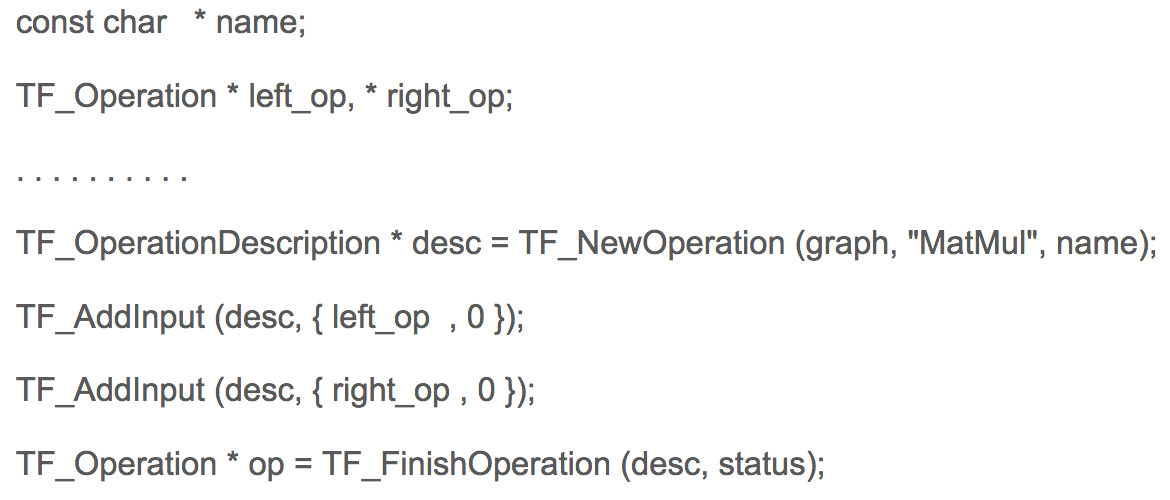

In this case, the python in the example above uses the redefinition of arithmetic operations, which in fact do not compute, but build a graph in memory. On C, the code for building a graph in TensorFlow looks like this:

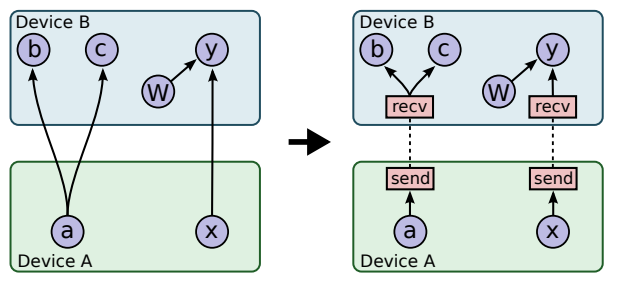

In Google, I have a friend Ukrainian programmer Mikhail Simbirsky, who uses TensorFlow on python. Google neural networks are used, for example, to analyze user behavior in order to target advertising. Some calculations for training neural networks in Google take days and weeks, despite the fact that Google uses the NVidia GPU and its own Google accelerators. This is not an easy task, since data transfer between processors and the GPU takes a lot of time:

One of the design problems of processors and GPUs is that the GPU is idle for a long time:

Another problem is insufficient memory bandwidth of interfaces. Wave in combination with MIPS is going to solve one and the other problem. In new products, not the processor will use the accelerator as a coprocessor, but they will work together.

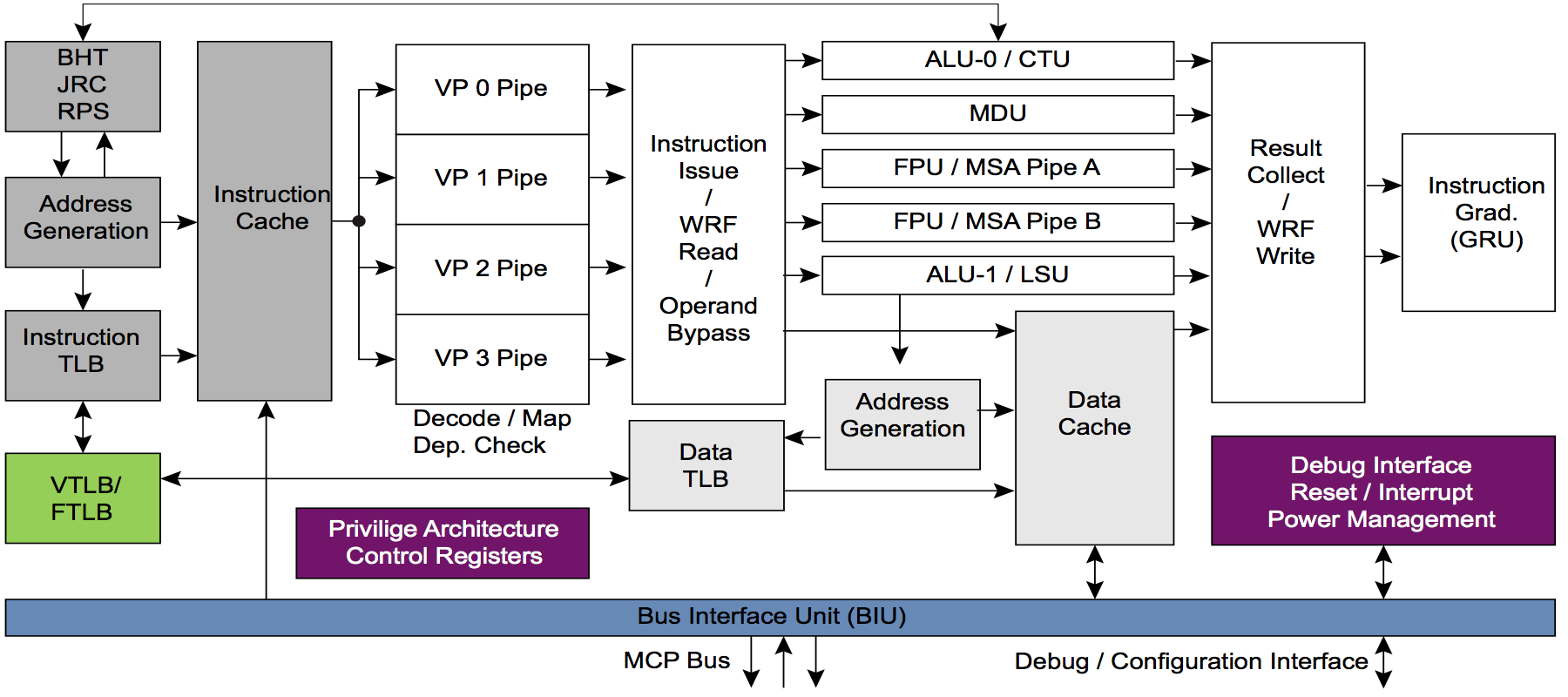

For this, MIPS cores will be modified to ultimately create a standard AI hardware platform. The advantage of the MIPS I6400 / I6500 (Samurai / Daimio) and MIPS I7200 (which was licensed by MediaTek) is multithreading. ARM does not have multithreading. Here is what a multithreaded pipeline looks like on the MIPS I6400 core:

And now attention to the question of the most savvy commentators: what do you think, is the advantage of multithreading for a combination of CPU and hardware accelerator? In particular, the accelerator from Wave, which is a variant of the so-called CGRA - Coarse Grained Reconfigurable Array - coarse-grained reconfigurable arrays.

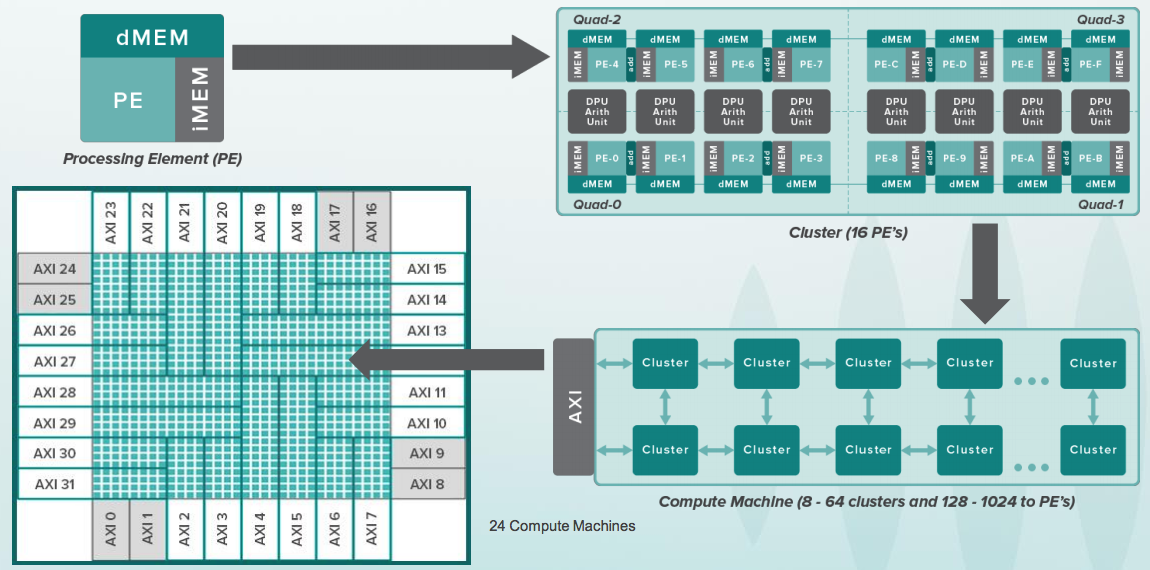

If you are familiar with FPGA (Field Programmable Gate Array) / FPGA (Programmable Logic Integrated Circuits), then the idea of CGRA is somewhat similar, but they work not with individual bits, but with whole buses of 8-64 bits and in each cell there is an ALU, and for several cells an arithmetic coprocessor. This is what the whole hierarchy looks like:

This is how one reconfigurable cell looks like. It has a small buffer with instructions that resemble simple commands of 8-bit rechargeable microcontrollers, for example 6502 which stood in the first Apple computers. In this case, the processors in ancient Apple worked with a frequency of a couple of megahertz, and the cells in CGRA operate with a frequency of several gigahertz. In addition, Apple had one processor, and there are 16,000 such cells:

The Wave crystal is clearly huge, so you have to use local-synchronous circuits with a clock signal for each group of cells. But the biggest problem is not hardware, but software. The graph for computing the grid has to be scattered on this pile of devices with precise knowledge of which cycle will be what is calculated. This is called static scheduling. Therefore, Wave hired a bunch of compilers, including the most famous bison - Stephen Johnson, who stood at the origins with Kernighan and Richie. Here is what Dennis Ritchie wrote about Stephen Johnson:

In the 1980s, C quickly gained popularity and compilers became available on virtually every machine and operating system; in particular, it became popular as a programming language for personal computers, and at the same time as for developers of commercial software for these machines, and for ordinary users who are fond of programming. At the beginning of the decade, virtually every compiler was based on pc Johnson; by 1985 there were already many compilers created by independent developers.

When I was 18 (in 1988) and I was a student at MIPT, Stephen Johnson was my God. I participated in the development of two compilers based on its Portable C Compiler. One compiler was for SS Electronics BIS, "Red Kreu", the Soviet analogue of the vector supercomputer Cray-1. The second compiler was for Orbit 20-700, an embedded computer in Soviet MiG-29 fighters and others in the early 1980s.

So I just had to take a picture with Stephen Johnson. He told me about other tools he did for both Unix and for design automation, automatic profiling, etc.

And, of course, he was photographed with the investor in the whole thing, Dado Banatao. Long ago, Dado Banatao created the chipset for the first pisishki. He debugged the drivers along with Ballmer. “Sometimes Bill Gates came in the room, which interfered with us,” says Dado Banatao. Now he has, according to the Internet, five billion dollars. He is the most famous high-tech Filipino, creates an AI center and leads other educational programs in his homeland.

Dado Banatao made the most money at Marvell. Here is her office in Santa Clara in the rays of the evening sun:

In Wave, there are many people who used to work at MIPS. And some of the MIPS were in Silicon Graphics, since MIPS was part of Silicon Graphics in the 1990s. At that time, MIPS processors were stationed in graphic stations that were used in Hollywood to shoot the first realistic graphic films like “Jurassic Park”. These graphic stations along with the Siberian girl Irina at the Museum of Computer History in Mountain View, California:

At the end of today's party in honor of tomorrow's official announcement and yesterday's publications in the press, eating cakes and drinking champagne took place:

Tomorrow there will be a lot of work - from Verilog RTL (my direct responsibilities) to discussing architecture, applications and even conversations with a data scientist (they feel themselves from a different Universe, and this is mutual with electronic engineers and compilers).