A year ago, I

collected a data center from a stack of Intel NUC . There was a software data storage system that a cleaning lady could not crush, a couple of times she collapsed the cluster.

And now we have decided to drive vSAN on several servers in a very good configuration in order to fully evaluate the performance and fault tolerance of the solution. Of course, we had a number of successful implementations in production at our customers, where vSAN successfully solves the problems posed, but it was not possible to carry out comprehensive testing. Actually, I want to share the test results today.

We will torment the storage load, drop it and test fault tolerance in every possible way. For the details of all invite under the cat.

What is VMware vSAN in general and why did we get into this?

There is a normal server cluster for virtual machines. It has a bunch of independent components, the hypervisor runs directly on the hardware, and the storage is configured separately on the basis of a DAS, NAS or SAN. Slow data on the HDD, hot - on the SSD. Everything is familiar. But this raises the problem of the deployment and administration of this zoo. It becomes especially fun in situations where individual elements of the system are from different vendors. In case of problems, docking tickets for technical support from different manufacturers has its own special atmosphere.

There are separate pieces of iron, which from the point of view of the server look like disks for writing.

And there are hyperconvergent systems. They give you a universal unit, which takes on all the headaches of the interaction of the network, disks, processors, memory, and those virtual machines that run on them. All data flows into one control panel, and if necessary, you can simply add a couple more units to compensate for the increased load. Administration is greatly simplified and standardized.

VMware vSAN is just about solutions that are deployed

hyperconvergent infrastructure. The key feature of the product is tight integration with the VMware vSphere virtualization platform, which is the leader among virtualization solutions, which allows software storage for virtual machines to be deployed on virtualization servers in minutes. vSAN directly takes control of I / O operations at a low level, optimally distributes the load, caches read / write operations and does a bunch of things with minimal load on the memory and processor. The transparency of the system is somewhat reduced, but as a result, everything works, so to speak, automagically vSAN can be configured as a hybrid storage and as an all-flash-version. It is scaled both horizontally by adding new nodes to the cluster, and vertically, increasing the number of disks in individual nodes. Management with the help of their vSphere web client is very convenient due to tight integration with other products.

We stopped at a clean all-flash configuration, which according to calculations should be optimal in terms of price and performance. It is clear that the total capacity is somewhat lower compared to the hybrid configuration using magnetic disks, but here we decided to check how it can be partially circumvented using erasure coding, as well as deduplication and compression on the fly. As a result, storage efficiency becomes closer to hybrid solutions, but significantly faster with a minimum overhead.

As tested

To test the performance, we used

HCIBench v1.6.6 software, which automates the process of creating a set of virtual machines and the subsequent reduction of the results. The performance testing itself is performed by means of the Vdbench software, one of the most popular synthetic load testing software. Iron was in the following configurations:

- All-flash - 2 disk groups: 1xNVMe SSD Samsung PM1725 800 GB + 3xSATA

- SSD Toshiba HK4E 1.6 TB.

- All-flash - 1 disk group: 1xNVMe SSD Samsung PM1725 800 GB + 6xSATA SSD Toshiba HK4E 1.6 TB.

- All-flash - 1 disk group: 1xNVMe SSD Samsung PM1725 800GB + 6xSATA SSD Toshiba HK4E 1.6 TB + Space Efficiency (deduplication and compression).

- All-flash - 1 disk group: 1xNVMe SSD Samsung PM1725 800GB + 6xSATA SSD Toshiba HK4E 1.6 TB + Erasure Coding (RAID 5/6).

- All-flash - 1 disk group: 1xNVMe SSD Samsung PM1725 800GB + 6xSATA SSD Toshiba HK4E 1.6 TB + Erasure Coding (RAID 5/6) + Space Efficiency (deduplication and compression).

In the process of testing, we emulated three different amounts of active data used by applications: 1 TB (250 GB per server), 2 TB (500 GB each per server) and 4 TB (1 TB each, respectively).

For each configuration, the same set of tests was performed with the following load profiles:

- 0 read / 100 write, randomly 50%, block size - 4k.

- 30 read / 70 write, randomly 50%, block size - 4k.

- 70 read / 30 write, randomly 50%, block size - 4k.

- 100 read / 0 write, randomly 50%, block size - 4k.

The first and fourth options were needed to understand how the system will behave under maximum and minimum loads. But the second and third are as close as possible to the real typical use case: for example, 30 read / 70 write - VDI. Those loads that I encountered in production were very close to them. In the process, we tested the effectiveness of the vSAN data management mechanism.

In general, the system proved to be very good. According to the test results, we realized that we can count on indicators in the area of 20 thousand IOPS per node. For ordinary and high-loaded tasks, these are good indicators taking into account delays of 5 ms. Below I have the charts with the results:

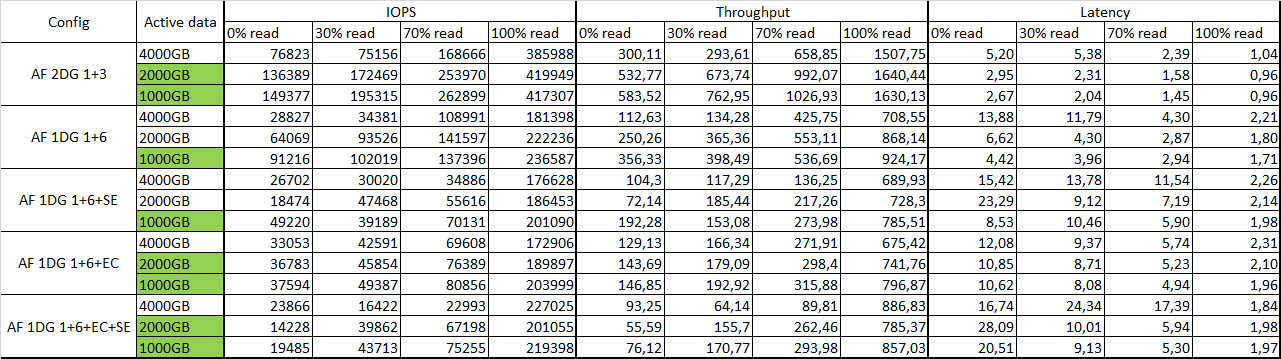

Summary table with test results:

Green color indicates active data completely falling into the cache.

fault tolerance

I cut down one knot after another, despite the perturbation of the machine, and looked at the reaction of the system as a whole. After disconnecting the first node, nothing happened at all, except for a small drop in performance, by about 10–15%. I put out the second node - some of the virtual machines disconnected, but the rest continued to work with a slight performance degradation. In general, vitality pleased. Restarted all the nodes - the system a little thought and again synchronized without any problems, all virtual machines started without any problems. As in his time on the NUC'ah. The integrity of the data is also not affected, which is very pleased.

General impressions

Software-defined storage systems (SDS) is already quite mature technology.

Today, one of the key stop factors standing in the way of implementing vSAN is the relatively high cost of a license in rubles. If you build the infrastructure from scratch, it may turn out that traditional storage in a similar configuration will cost about the same. But at the same time it will be less flexible both in terms of administration and scaling. So today, when choosing a storage solution for virtual machines on the vSphere virtualization platform, it is very good to weigh all the pros and cons of using traditional solutions and implementing software-defined storage technology.

You can build a solution on the same Ceph or GlusterFS, but when working with VMware infrastructure, the tight integration of vSAN with individual components, as well as the simplicity of administration, deployment, and noticeably high performance, especially on a small number of nodes, captivate. Therefore, if you are already working on a VMware infrastructure, then it will be much easier with deployment. You really make a dozen clicks and get a working SDS out of the box.

Another motivation for the deployment of vSAN can be its use for branches, which allows you to mirror nodes in remote divisions with a witness host in the data center. This configuration allows you to get fault-tolerant storage for virtual machines with all the technologies and vSAN performance on just two nodes. By the way, to use vSAN there is a separate licensing scheme for the number of virtual machines, which allows to reduce costs in comparison with the traditional vSAN licensing scheme for processors.

Architecturally, a solution requires 10 Gb Ethernet with two links per node for adequate traffic distribution when using an all-flash solution. Compared to traditional systems, you save rack space, save on SAN networks by eliminating Fiber Channel in favor of a more versatile Ethernet standard. To ensure fault tolerance, at least three nodes are required, on two replicas of objects with data will be stored, and on the third - witness objects for these data, solves the problem of splitbrain.

Now a few questions for you:

- When you decide on a storage system, what criteria are most important to you?

- What stop factors do you see on the path of software-defined storage systems?

- What software-defined storage systems are you basically considering as an option for implementation?

UPD: You completely forgot to write the stand configuration and load parameters:

1. Description of iron. For example:

Servers - 4xDellR630, in each:

• 2xE5-2680v4

• 128GB RAM

• 2x10GbE

• 2x1GbE for management / VM Network

• Dell HBA330

Storage Config # 1:

2xPM1725 800GB

6xToshiba HK4E 1.6TB

Storage Config # 2:

1xPM1725 800GB

6xToshiba HK4E 1.6TB

2. Description of software versions:

vSphere 6.5U1 (7967591) (vSAN 6.6.1), i.e. patches after Meltdown / Specter

vCenter 6.5U1g

Drivers for vSAN and ESXi for all components

LACP for vSAN and vMotion traffic (with NIOC shares / limits / reservation enabled)

All other setting are default

3. Load parameters:

• HCIBench 1.6.6

• Oracle Vdbench - 04/04/06

• 40VM per cluster (10 per node)

• 10 vmdk per VM

• 10GB size of vmdk and 100/50/25% Workload set percentage

• Warmup time-1800sec (0.5 hour), Test time 3600 (1 hour)

• 1 Threads per vmdk