(for the idea of the headline thanks to Sergey G. Brester

sebres )

Colleagues, the purpose of this article is to share the experience of a one-year test operation of a new class of IDS-solutions based on Deception-technologies.

In order to preserve the logical coherence of the presentation of the material, I consider it necessary to begin with the premises. So, the problematics:

- Direct attacks are the most dangerous type of attacks, despite the fact that their share in the total number of threats is small.

- Some kind of guaranteed effective means of protecting the perimeter (or a complex of such means) has not yet been invented.

- As a rule, targeted attacks take place in several stages. Overcoming the perimeter is only one of the initial stages, which (you can throw stones at me) doesn’t bear much damage to the “victim”, unless it is a DEoS (Destruction of service) attack (cryptographers, etc.). The real “pain” begins later, when the seized assets start to be used for pivoting and the development of the attack “in depth”, but we did not notice this.

- Since we begin to incur real losses when the attackers do get to the attack targets (application server, DBMS, data storage, repositories, elements of critical infrastructure), it is logical that one of the tasks of the information security service is to interrupt the attacks to this sad event. But in order to interrupt something, you need to know about it first. And the sooner - the better.

- Accordingly, for successful risk management (that is, reducing damage from directional attacks), it is critical to have tools that ensure the minimum TTD (time to detect - the time from the moment of invasion until the moment of attack detection). Depending on the industry and region, this period, with an average of 99 days in the United States, 106 days in the EMEA region, 172 days in the APAC region (M-Trends 2017, A View From the Front Lines, Mandiant).

- What does the market offer?

- "Sandboxes". Another preventive control that is far from ideal. There are plenty of effective techniques for detecting and circumventing sandboxes or whitelisting solutions. The guys from the "dark side" here are still one step ahead.

- UEBA (Behavioral Behavioral Behavioral Behavioral Behavioral Behavioral Behavior Behavior Behavior Behavior Behavioral Behavioral Behavioral Behavioral Behavioral Behavioral Behavioral Surveillance But, in my opinion, this is sometime in the distant future. In practice, this is still very expensive, unreliable, and requires a very mature and stable IT and IB infrastructure, which already has all the tools that will generate data for behavioral analysis.

- SIEM is a good tool for investigations, but it is not in a position to show anything in time, because the correlation rules are the same signatures.

- As a result, there is a need for a tool that would:

- successfully worked in conditions of already compromised perimeter,

- found successful attacks in near real-time mode regardless of the toolkit and the vulnerabilities that are used,

- did not depend on signatures / rules / scripts / policies / profiles and other static things,

- did not require large amounts of data and their sources for analysis,

- would allow to define attacks not as some kind of risk-scoring as a result of the work of “the world's best, patented and therefore closed mathematics”, which requires additional investigation, but practically as a binary event - “Yes, they attack us” or “No, everything is OK”,

- It was universal, efficiently scalable and actually implemented in any heterogeneous environment, regardless of the physical and logical network topology used.

So-called deception solutions are now claiming the role of such a tool. That is, solutions based on the good old concept of hanipots, but with a completely different level of implementation. This topic is now definitely on the rise.

According to the results of

Gartner Security & Risc management summit 2017, Deception-decisions are included in the TOP-3 strategies and tools that are recommended to be used.

According to the report

TAG Cybersecurity Annual 2017 Deception is one of the main directions of development of IDS Intrusion Detection Systems solutions.

The entire section of the latest

Cisco IT security status report on SCADA is based on data from one of the leaders in this market, TrapX Security (Israel), whose solution has been operating in our test area for a year now.

TrapH Deception Grid allows you to cost and operate massive distributed IDS centrally, without increasing the licensing load and hardware requirements. In fact, TrapH is a designer that allows you to create one large mechanism for detecting attacks across the enterprise, a kind of distributed network "signaling" from the elements of the existing IT infrastructure.

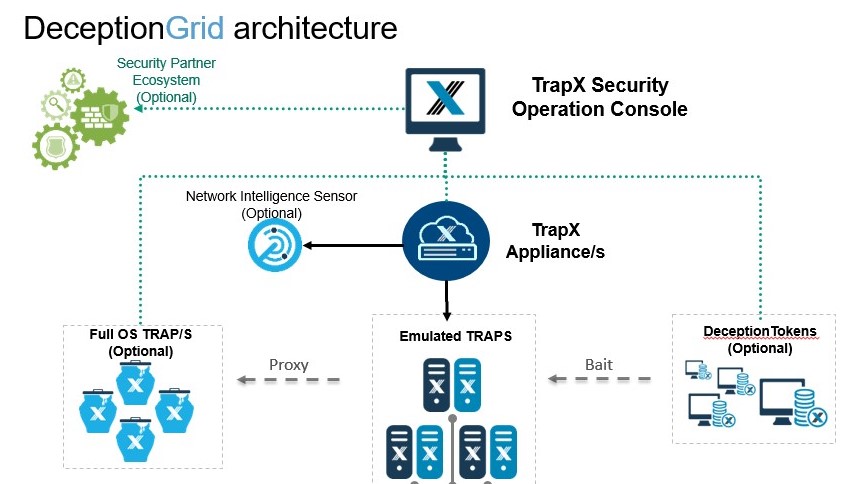

Solution Structure

In our laboratory, we constantly study and test various innovations in the field of IT security. About 50 different virtual servers are deployed here, including the components of the TrapX Deception Grid.

So, from top to bottom:

- TSOC (TrapX Security Operation Console) - the brain of the system. This is the central management console, with which you can configure, deploy solutions and all daily work. Since this is a web service, it can be deployed anywhere - in the perimeter, in the cloud or at the MSSP provider.

- TrapX Appliance (TSA) is a virtual server to which we connect the subnets we want to monitor with the help of the trunk port. Also here all our network sensors actually "live".

One TSA (mwsapp1) is deployed in our laboratory, but in fact there may be a lot of them. This may be necessary in large networks where there is no L2-connectivity between segments (a typical example is “Holding and subsidiaries” or “Bank head office and branches”) or if there are isolated segments in the network, for example, an automated process control system. In each such branch / segment, you can deploy your TSA and connect it to a single TSOC, where all information will be centrally processed. This architecture allows you to build distributed monitoring systems without the need for a radical network restructuring or disruption of the existing segmentation.

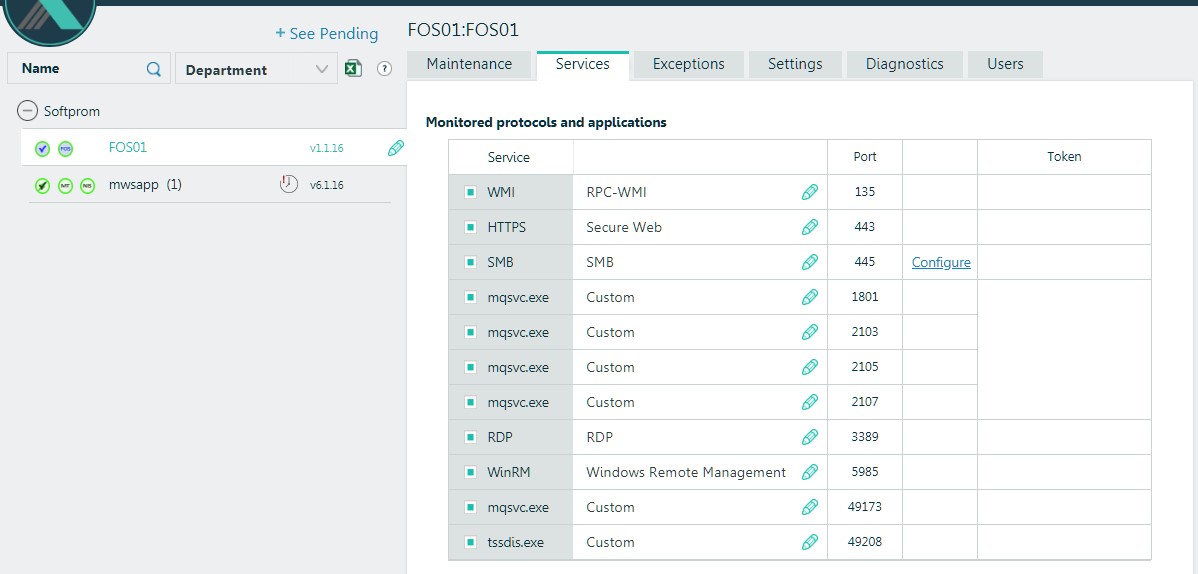

Also, at TSA we can submit a copy of outgoing traffic through TAP / SPAN. In case of detection of connections with known botnets, command servers, TOR sessions, we will also get the result in the console. Network Intelligence Sensor (NIS) is responsible for this. In our environment, this functionality is implemented on the firewall, so here we did not use it. - Application Traps (Full OS) - traditional hanipot based on Windows – servers. They do not need much, since the main task of these servers is to provide IT services to the next level of sensors or detect attacks against business applications that can be deployed in a Windows environment. We have one such server installed in the laboratory (FOS01)

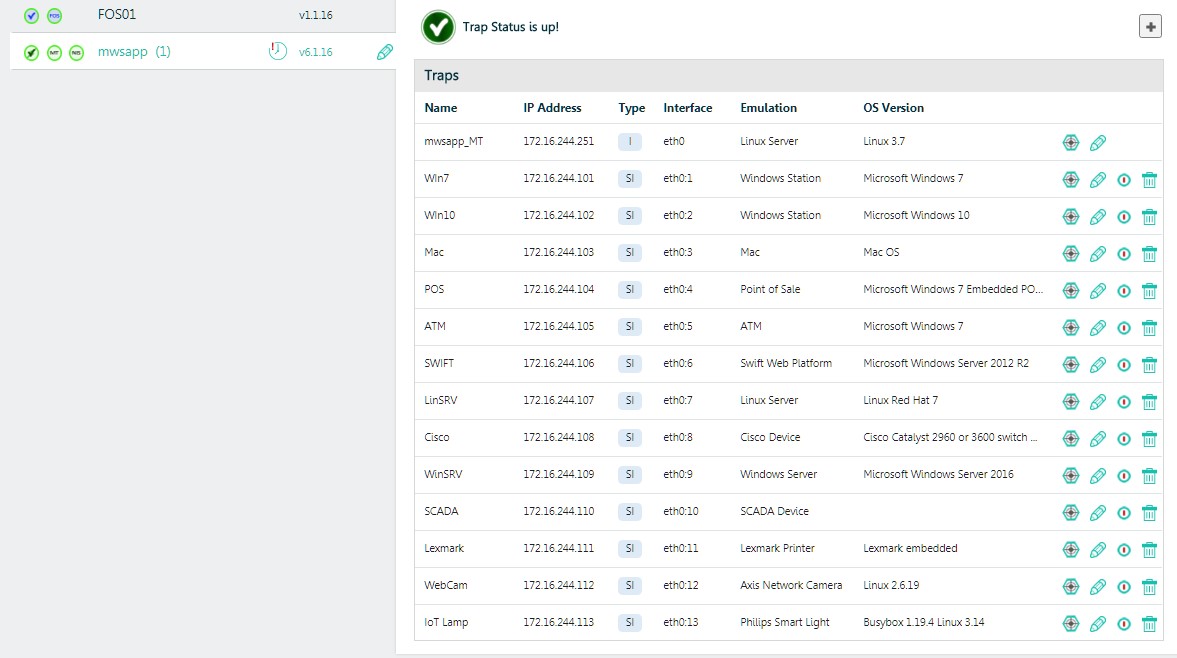

- Emulated traps is the main component of the solution, which allows us using a single virtual machine to create a very dense "mine" field for the attackers and saturate the enterprise network, all its vlans, with our sensors. The attacker sees such a sensor, or a phantom host, like a real Windows PC or server, a Linux server, or another device that we decide to show it.

For the benefit of business and curiosity, we deployed “each pair of creatures” - Windows PCs and servers of different versions, Linux servers, an ATM with Windows embedded, SWIFT Web Access, a network printer, a Cisco switch, an Axis IP camera, a MacBook, PLC -device and even smart light bulb. Total - 13 hosts. In general, the vendor recommends deploying such sensors in an amount of at least 10% of the number of real hosts. The top bar is the available address space.

A very important point is that each such host is not a full-fledged virtual machine that requires resources and licenses. This is “snag”, emulation, one process on TSA, which has a set of parameters and an IP address. Therefore, using even a single TSA, we can fill the network with hundreds of such phantom hosts that will act as sensors in the alarm system. It is this technology that makes it possible to cost-effectively scale the concept of “hanipot” across the scale of any large distributed enterprise.

From the point of view of the attacking side, these hosts are attractive because they contain vulnerabilities and look like relatively light targets. The attacker sees the services on these hosts and can interact with them, attack them using standard tools and protocols (smb / wmi / ssh / telnet / web / dnp / bonjour / Modbus, etc.). But it is impossible to use these hosts to develop an attack and launch your own code.

- The combination of these two technologies (FullOS and emulated traps) makes it possible to achieve a high statistical probability that the attacker will, all the same, sooner or later collide with any element of our signal network. But how to make so that this probability was close to 100%?

The so-called tokens (Deception tokens) enter the battle. Thanks to them, we can include in our distributed IDS all available PCs and enterprise servers. Tokens are hosted on real PC users. It is important to understand that tokens are not an agent that consumes resources and can cause conflicts. Tokens are passive information elements, a kind of bread crumbs for the attacking side, which lead it into a trap. For example, mapped network drives, bookmarks for fake web admins in the browser and saved passwords to them, saved ssh / rdp / winscp sessions, our traps with comments in the hosts files, saved passwords, credentials of non-existing users, office files, opening which will trigger the system, and much more. Thus, we place the attacker in a distorted environment, saturated with those attack vectors that do not actually pose a threat to us, but rather the opposite. And it is not possible for him to determine where the truthful information is, and where it is false. Thus, we not only provide a quick definition of attack, but also significantly slow down its progress.

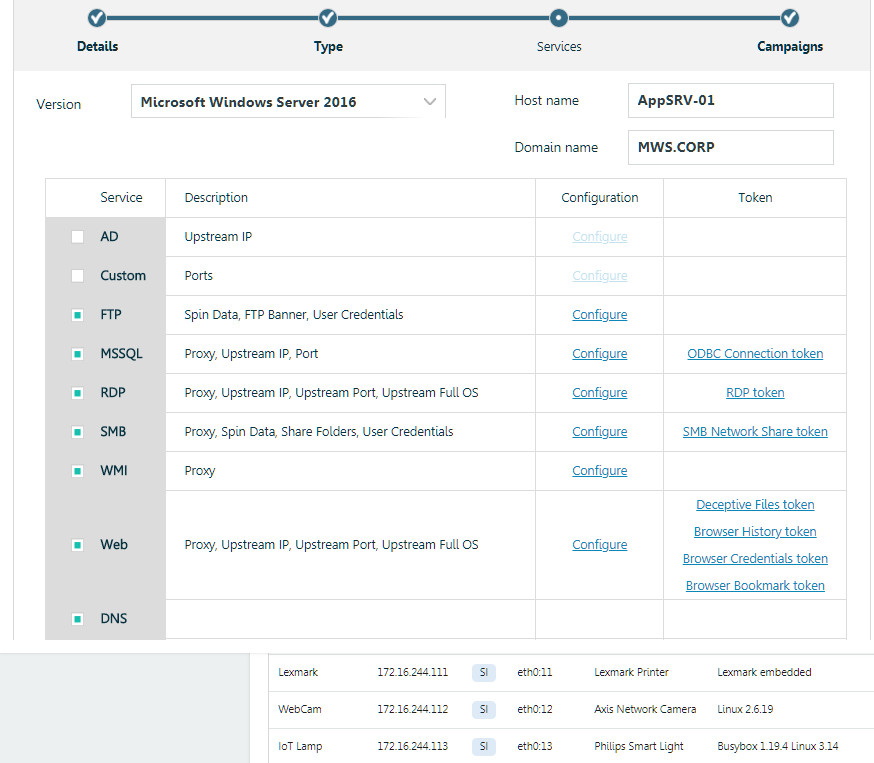

An example of creating a network trap and setting tokens. Friendly interface and manual editing of configs, scripts, etc.

An example of creating a network trap and setting tokens. Friendly interface and manual editing of configs, scripts, etc.In our environment, we configured and placed a number of such tokens on FOS01 running Windows Server 2012R2 and a test PC under Windows 7. We run RDP on these machines and we periodically “hang out” them in the DMZ, which also features a number of our sensors (emulated traps). Thus, we get a steady stream of incidents, so to speak, in a natural way.

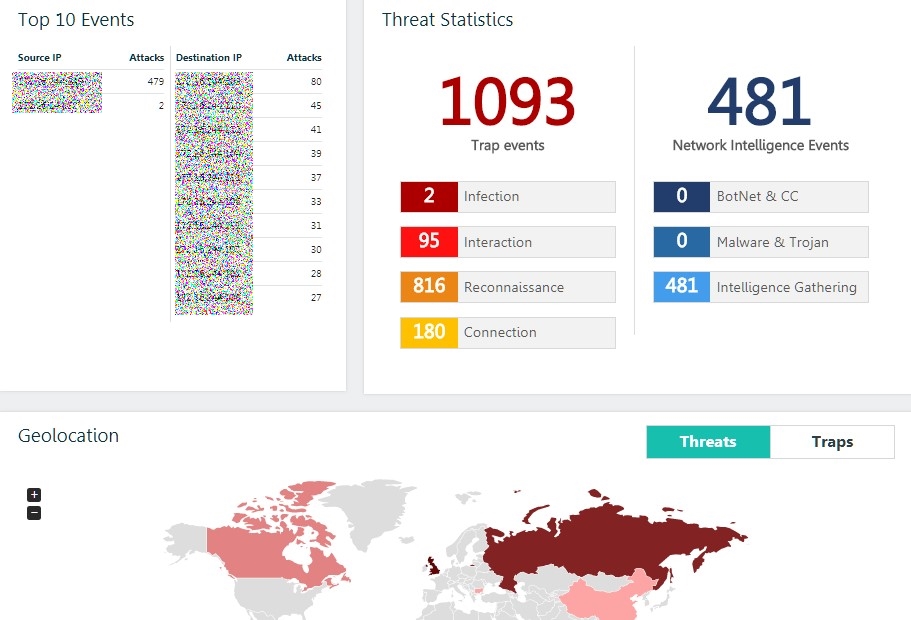

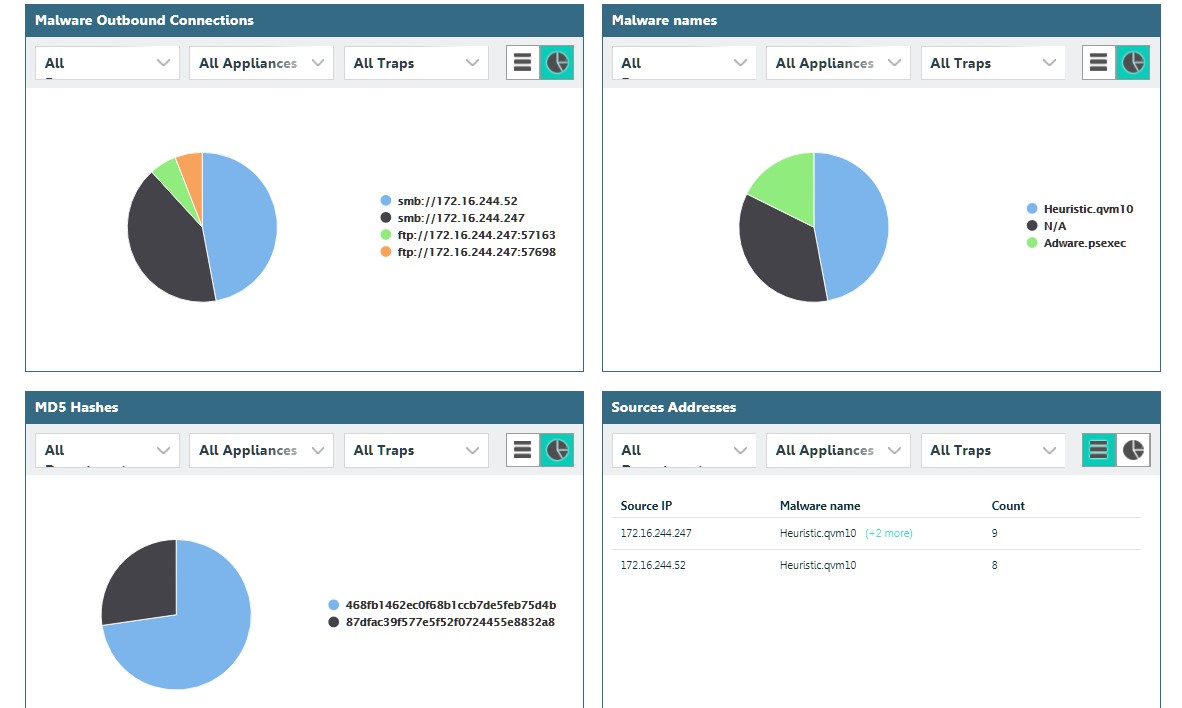

So, a brief statistic for the year:

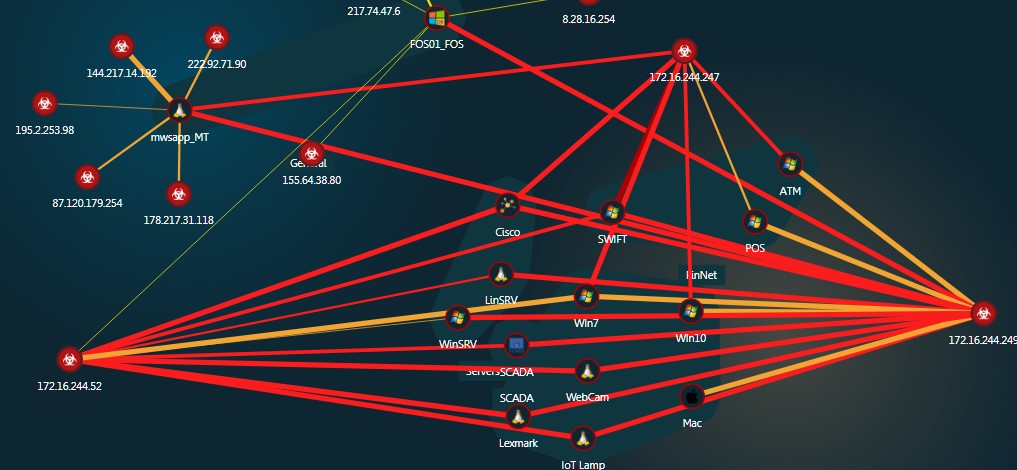

56 208 - incidents recorded,

2 912 - host attack sources detected.

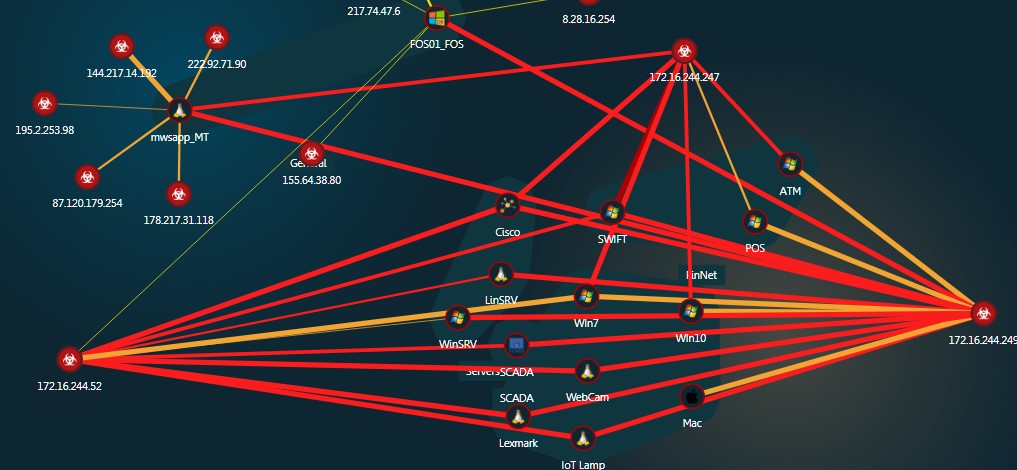

Interactive, clickable attack map

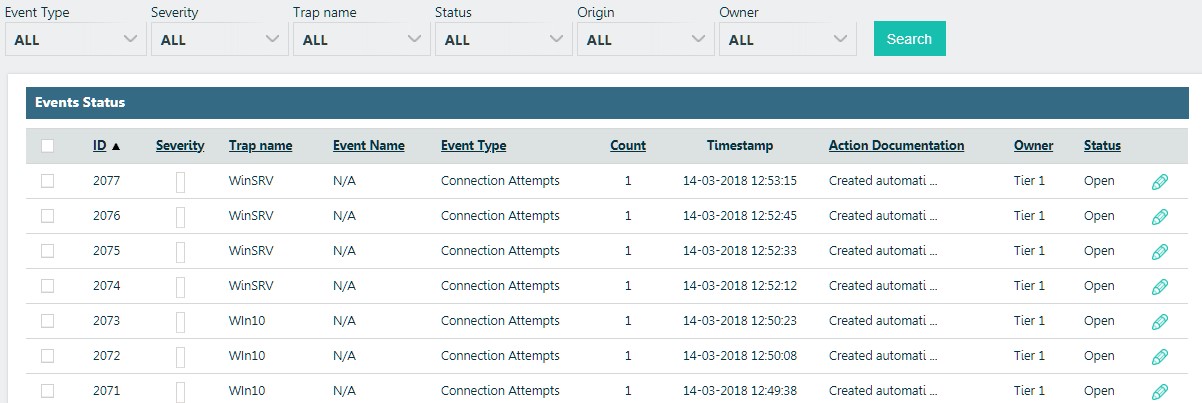

Interactive, clickable attack mapAt the same time, the solution does not generate some kind of mega-log or tape of events, which you need to understand for a long time. Instead, the solution itself classifies events according to their types and allows the information security team to focus primarily on the most dangerous ones - when the attacker tries to raise control sessions (interaction) or when we have binary payloads (traffic) in traffic.

All information about events is readable and seems, in my opinion, in an easy-to-understand form, even to a user with basic knowledge in the field of information security.

Most of the recorded incidents are attempts to scan our hosts or single connections.

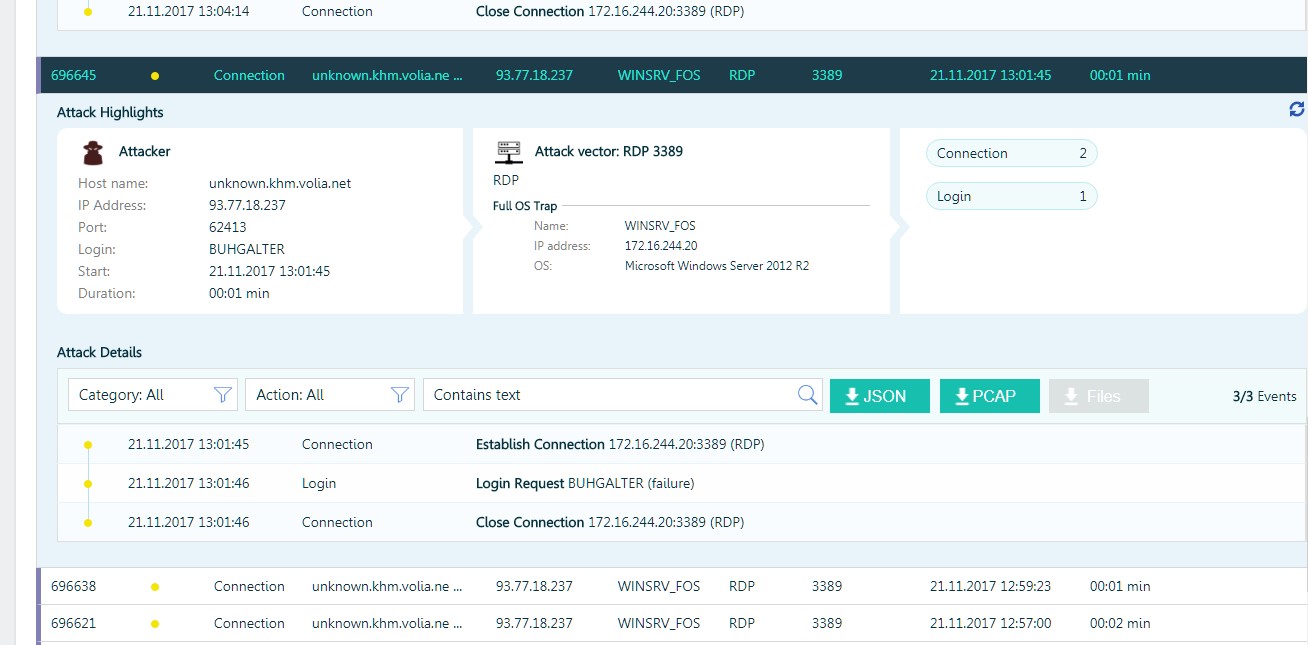

Or attempts to passwords for RDP

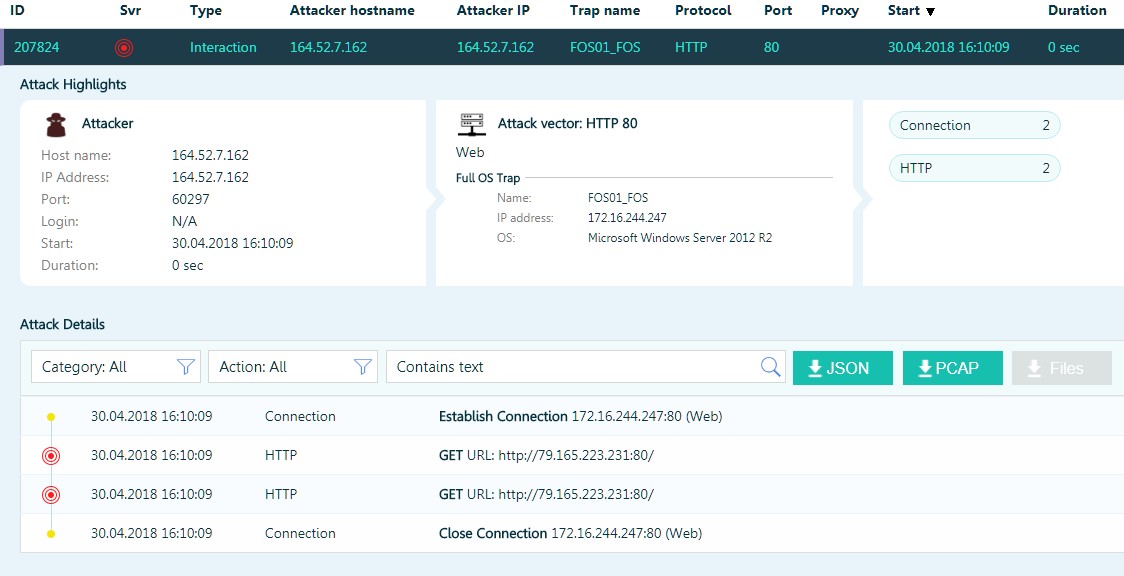

But there were also more interesting cases, especially when the attackers "managed" to pick up a password for the RDP and get access to the local network.

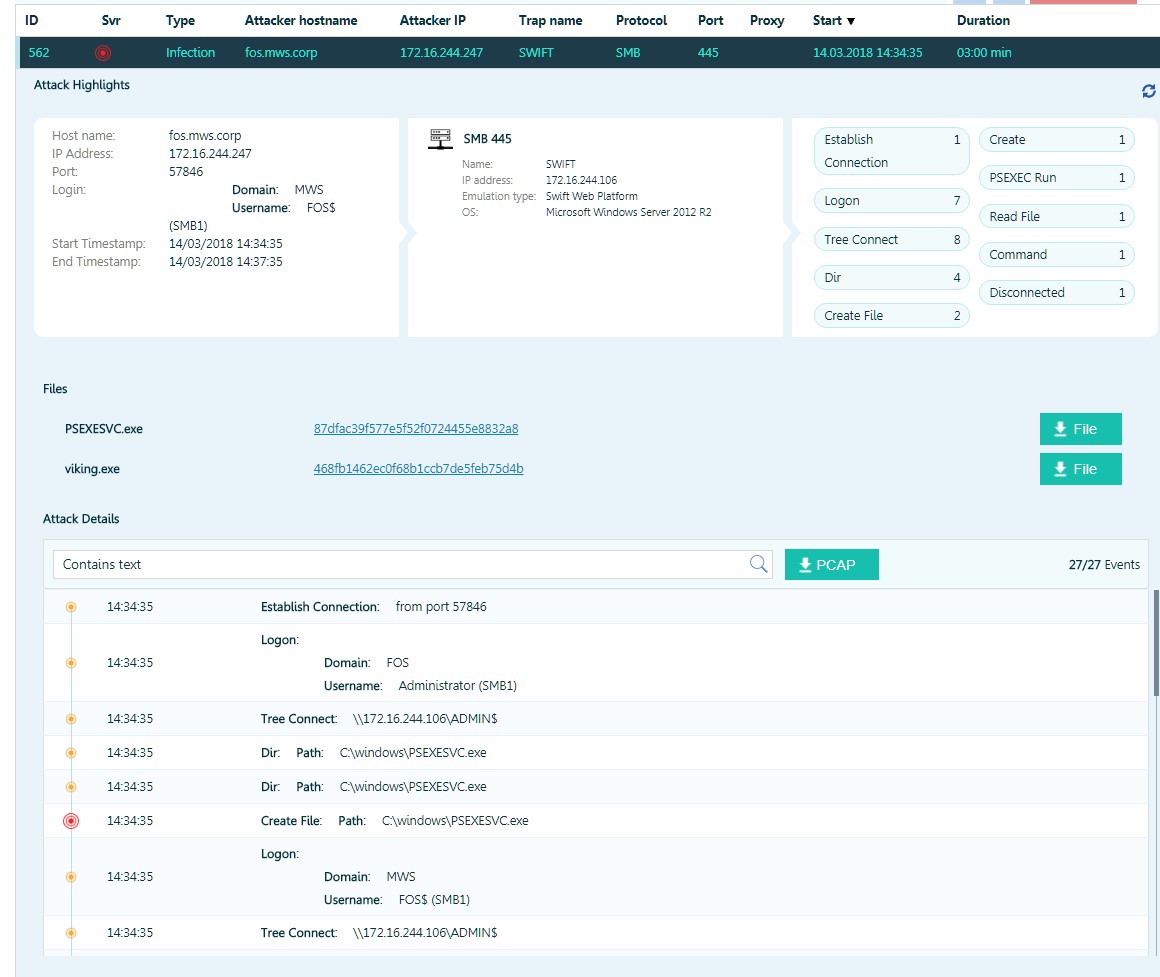

An attacker attempts to execute code with psexec.

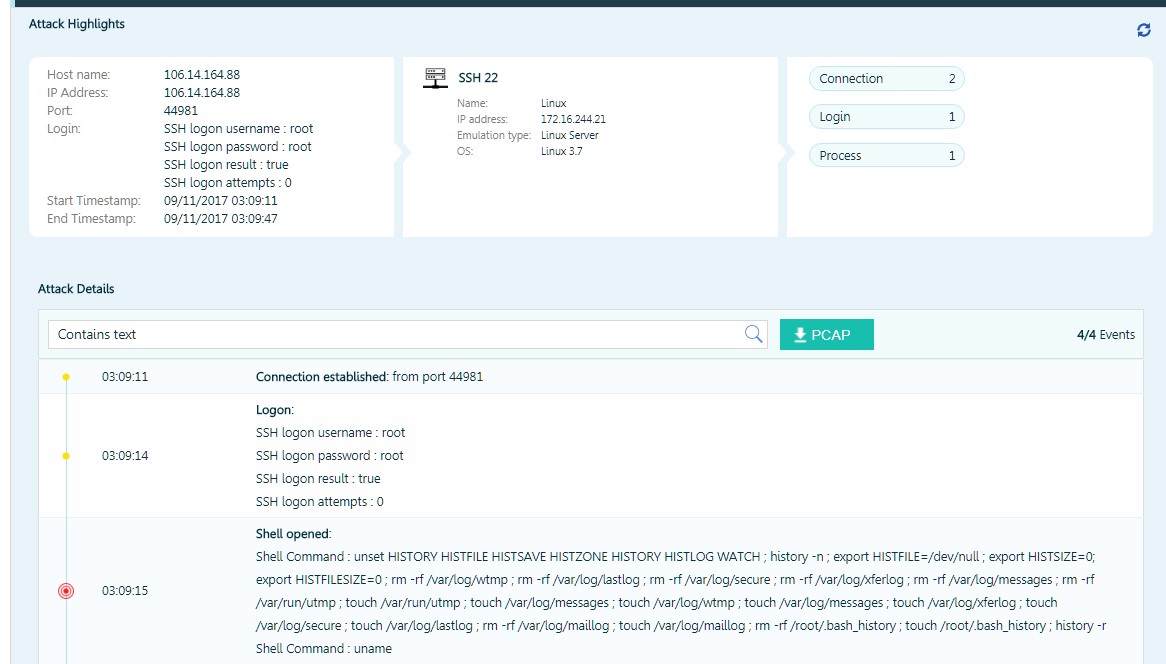

The attacker found a saved session, which led him into a trap in the form of a Linux server. Immediately after connecting one set of commands in advance, he tried to destroy all the log files and the corresponding system variables.

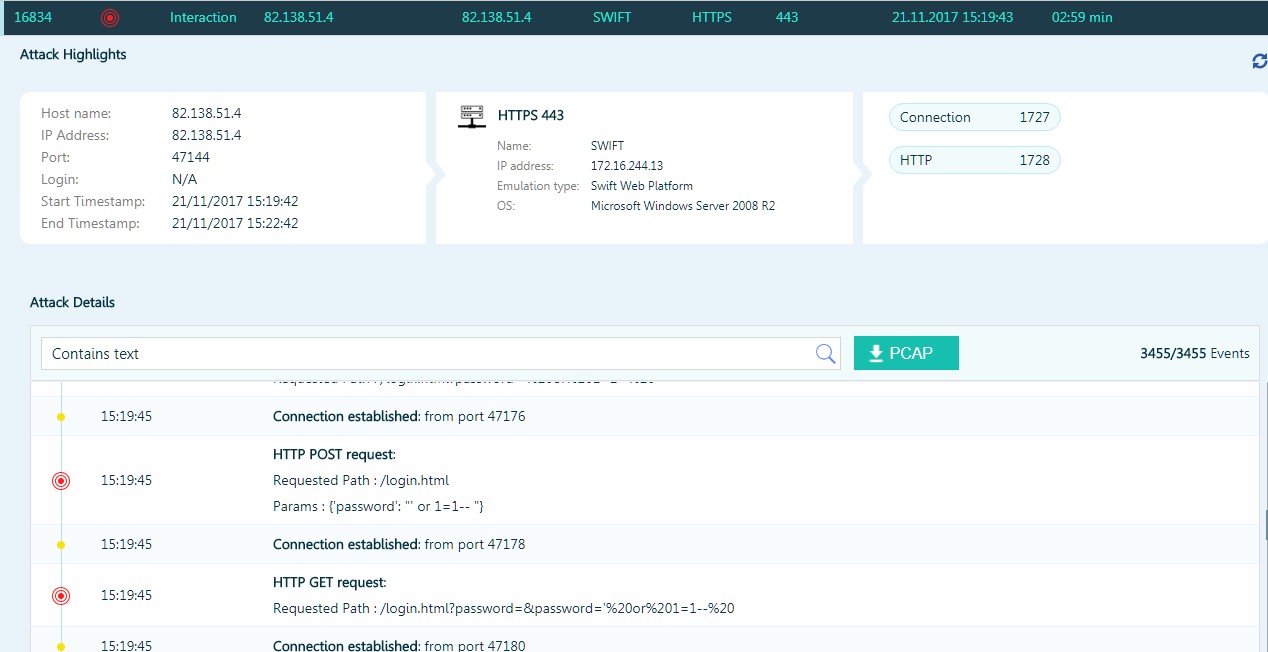

The attacker is trying to conduct a SQL injection on a trap that simulates SWIFT Web Access.

In addition to such "natural" attacks, we conducted a number of our own tests. One of the most illustrative is testing the detection time of a network worm on a network. To do this, we used a tool from GuardiCore, which is called

Infection Monkey . This is a network worm that can capture Windows and Linux, but without some kind of "useful" load.

We deployed a local command center, started the first instance of the worm on one of the machines and received the first alert in the TrapX console in less than a minute and a half. TTD 90 seconds vs. 106 days on average ...

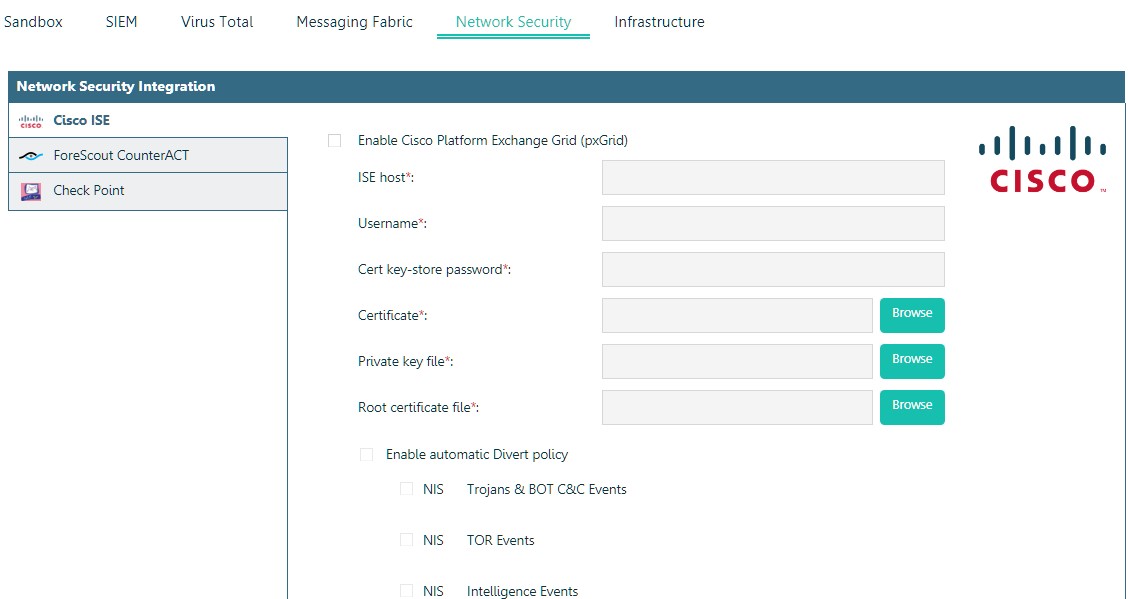

Thanks to the ability to integrate with other classes of solutions, we can only move from the rapid detection of threats to automatic response to them.

For example, integration with NAC (Network Access Control) systems or with CarbonBlack will automatically disable compromised PCs from the network.

Integration with sandboxes allows you to automatically transfer for analysis files involved in the attack.

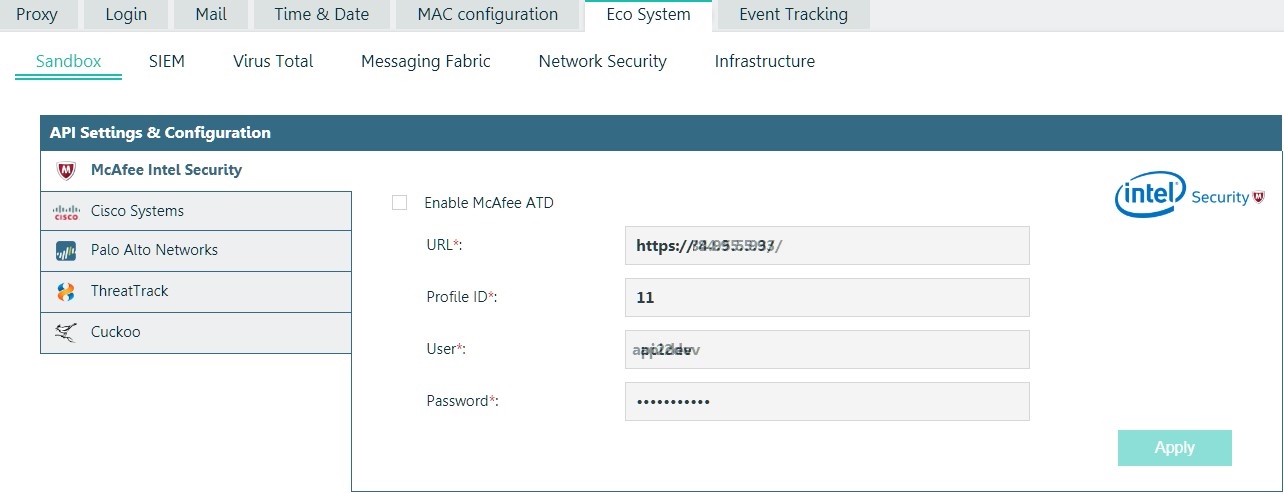

McAfee Integration

Also, the solution has its own built-in event correlation system.

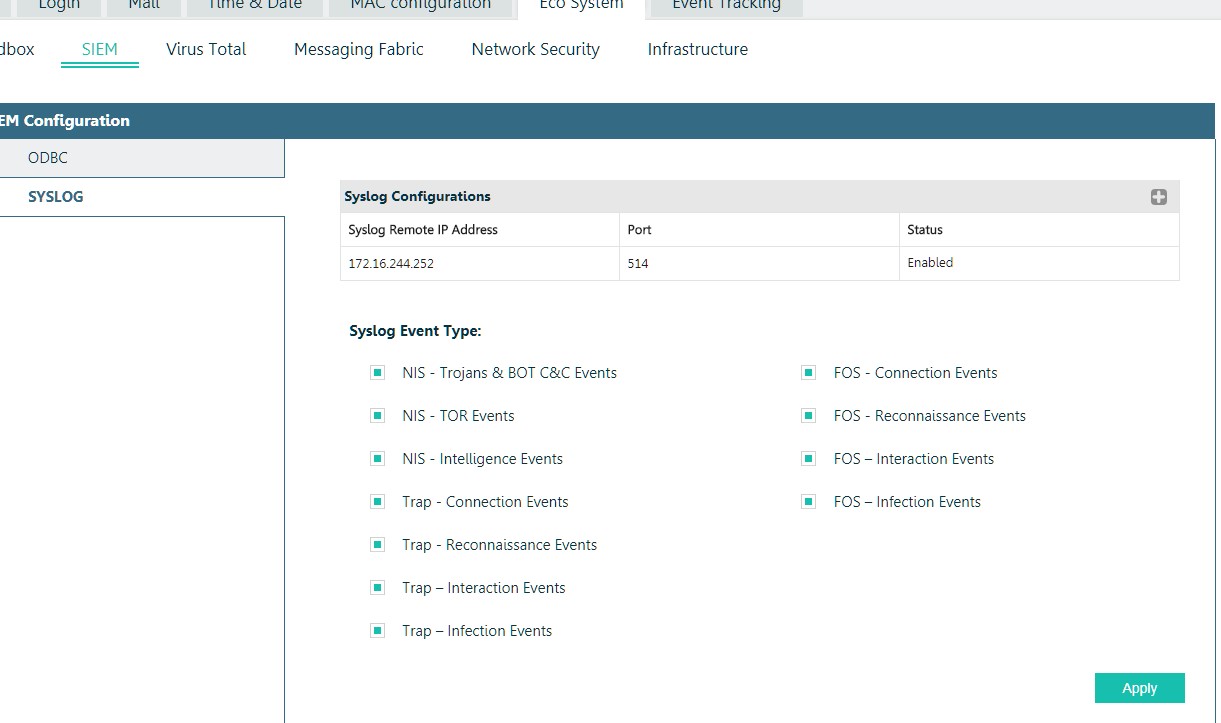

But we were not satisfied with its capabilities, so we integrated it with HP ArcSight.

The built-in ticketing system helps to cope with the detected threats “to the whole world”.

Since the solution “from the start” was developed for the needs of government agencies and a large corporate segment, there, of course, implemented a role-based access model, integration with AD, a developed system of reports and triggers (event alerts), orchestration for large holding structures or MSSP providers.

Instead of a resume

If there is a similar monitoring system, which, figuratively speaking, covers our backs, then with the compromise of the perimeter, everything is just beginning. The most important thing is that there is a real opportunity to deal with information security incidents, and not to deal with the elimination of their consequences.