This article discusses the methods used when collecting data with images in a music project with a slide show. There were restrictions that forced us to use the existing database of images, rather than images taken from Flickr. However, this article discusses both approaches so that the reader can learn how to extract data using the Flickr API.

Further, since the quality of a large part of the images collected from Flickr turned out to be low, it was decided to use images from existing image databases. In particular, images from three databases for psychological research were collected.

Recall that initially the following data sets were selected for this project:

- A training dataset containing 7000 emotionally-colored images from Flickr for an emotion extraction algorithm.

- A training dataset containing Bach works for the melody completion algorithm.

- A set of tunes that serve as templates for modulating emotions.

Now you need to collect the data sets. As will be shown in the article, the amount of work required for this varies significantly depending on the selected data set.

Data collection with images

This project required a set of images that evoke seven different emotions: happiness, sadness, fear, anxiety, awe, determination, anger. Flickr, a popular photo-sharing site, was decided to collect images due to its size and licensing by Creative Commons *.

Searching on Flickr 7000 images manually is a daunting task. Fortunately, Flickr has an

API that provides a set of methods that make it easy to exchange data with Flickr in a programming language. However, before using the API to collect images, it is important to know what to look for in order to evoke the appropriate emotions. A task on the

Amazon Mechanical Turk platform was used to determine the list of search terms.

Flickr api

To use the methods offered by the Flickr API, you will need to create a Flickr account and request an API key. To do this, be sure to have a Flickr or Yahoo! * Account. Next, you need to follow

this link and get the key.

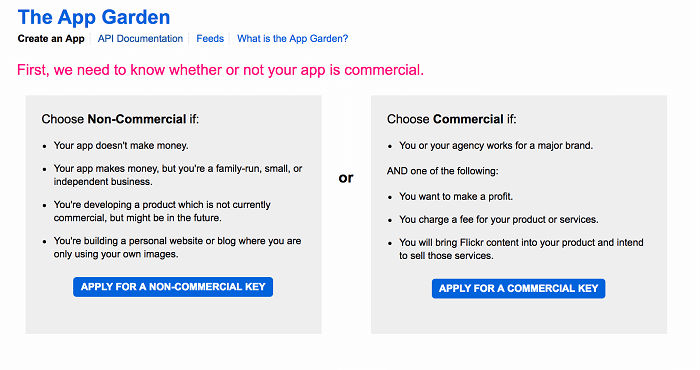

Screenshot of www.flickr.com/services/apps/create/apply

Screenshot of www.flickr.com/services/apps/create/applyThe process of processing a request for a non-commercial key is quite simple. It includes a description of the intended use and acceptance of the terms of use. An API key is a security measure and is used to prevent misuse of the API. In the methods provided by the API, it is a required parameter.

After receiving the API key, you can download and install an API toolkit for one of the programming languages from

The App Garden . This project uses

Beej's PythonI Flickr API , which can be used with Python 3. You must follow

the Flickr API

installation guide .

The code used to load the images is shown below. This mainly uses the API walk function, which searches for an image by tag. Tags are stored in a .txt file and are listed one per line. If an image is found, its URL is created from a template on

farm {farm-id} .staticflickr.com / {server-id} / {id} _ {secret} .jpg , where the contents of curly braces are replaced with image attributes. Then the top 30 images for each tag (sorted by relevance) are retrieved and organized into folders, depending on emotion and search conditions.

import flickrapi import urllib.request import os project_path = '/path/to/your/project' photos_per_tag = 30 filenames = ['Awe.txt', 'Happiness.txt', 'Fear.txt', 'Determination.txt', 'Anxiety.txt', 'Tranquility.txt', 'Sadness.txt'] def download_files(flickr, t, category, num_photos):

To use this code, you need to clone the repository by reference from

GitHub . After that follow the instructions contained in the README file. You need to replace the api_key and api_secret parameters with API keys obtained on Flickr. As mentioned above, this script only works on Python 3.

After the program runs, the folder looks like this:

A set of data on search results on Flickr.

A set of data on search results on Flickr.In total, about 8,800 images were collected. More images were received than required, as we planned to discard some of the low-quality images that cannot be used. The next step was to search for these images.

Image selection

The quality of the collected images was different. Some search conditions, such as flowers (shown in the figure) gave usable high quality images. However, less specific search terms often gave completely unsuitable images. For example, an image of a cake with a wonder woman * was obtained on the miracle tag (due to emotion of awe), and an image of Ambitious Farms cabbage was found on an ambitious tag (due to the emotion of determination).

Unsuitable images.Anyone planning to use the Flickr API to search for images is recommended to use specific nouns as search terms. Images found on them are much better than using adjectives or abstract nouns. For example, when searching for images that cause trepidation, you should use search conditions such as the ocean or the Grand Canyon, rather than awe or wonder.

After viewing the images, the team concluded that more than 40 percent of the images are unusable. As a result, the approach to the selection of the data set was revised. After discussing a number of possibilities, such as restricting the collection of images of people with relevant emotions, it was decided to use images from existing databases that are commonly used in psychological studies (Geneva Affective PicturE Database (

GAPED ), Open Affective Standardized Image Set (

OASIS ) and Image Stimuli for Emotion Elicitation (

ISEE )).

Despite the fact that images in existing databases are less diverse than they could be in a new data set, the choice was made in favor of existing databases due to higher image quality and the availability of information about parameters. Having information about the parameters is a huge advantage, because it eliminates the need for annotation using Amazon Mechanical Turk, which significantly reduces the cost.

Data source

The data collection process for the new data set was much simpler. In particular, no more steps were required with the Amazon Mechanical Turk and Flickr API. GAPED and OASIS data sets (including parameter markup) are available for download on the Internet. The ISEE data set became available after an email to the author with an access request. If the instructions for loading datasets are not clear enough, then most likely Google search * will help find contacts for authors who can directly request access to datasets.

Two data sets were created for this project. In the first, the Flickr API was used to load images by emotion tags, the second was a compilation of existing databases used in psychological research. Each of these datasets has its pros and cons; however, the second one was chosen for the project due to such advantages as image quality, the presence of marked up parameters and cost.

The method used to collect data directly depends on what data is required. However, the processes and methods described in this article are likely to be useful for many projects.

Now that the datasets have been created, the project is ready for the next steps — exploration and preprocessing.

Research data with images

Since the quality of a large part of the images collected from Flickr turned out to be low, it was decided to use images from existing image databases. In particular, images from three databases for psychological research were collected. Each image includes information on a rating of (in) amenity and intensity collected from several performers. The 1986 images from these databases were divided into 4 categories. These categories covered 87% of images and included 34% of animals, 28% of people, 13% of scenes, and 12% of objects. The remaining 13% were classified as "miscellaneous."

Animals

Sample images from the Animals category.About one-third of the images contain animals, either isolated or together with other animals, as shown above. In these examples, as you move from left to right, the pleasantness rating increases. Unpleasant images of hyenas eating their prey, and cockroaches can cause a response in the form of such emotions as: fear, sadness and disgust.

Images on the right - a sleeping cat, a smiling dog - on the contrary, can cause sympathy and happiness.

People

Sample images from the People category.The image category “People” includes images of individuals and groups of people, while images of groups of people often contain more information about the context. For example, the image of a marching orchestra seems to be taken against the background of a stadium filled with fans, suggesting that some kind of performance was captured in the image during sports competitions. The image of an evil woman, on the contrary, is devoid of context - the viewer does not have the opportunity to find out or guess the reason for her anger. It should be noted that not all images with many people or groups have additional information.

For example, the image of men lying in line on the floor, with visible wounds and in bloodied clothes, does not give an idea of what is happening. Nevertheless, even with such a lack of information, images with people cause various emotional reactions.

Scenes

The “Scenes” category of a set of images includes a variety of scenes - from man-made buildings and objects to scenes of nature and even space.

Sample images from the Scenes categoryObjects

Sample images from the Objects categoryThe “Objects” category of a set of images includes images focused on one object, as shown in the examples above. These images lack a situational context, especially when compared with other categories in the image set.

miscellanea

Sample images from the Miscellaneous categoryFinally, a subgroup of images remained in the set, which could not be assigned to any of the four categories. Often, as shown in the examples, these images were scenes with several objects, but without the context typical for images in the Scenes category. This type of image, as a rule, contained a neutral rating - they were neither pleasant nor unpleasant.

Emotion categories for image database

To define the categories of emotions for the image database, we relied on the normative subjective ratings of significance accompanying each image of the Geneva Affective PicturE Database (GAPED) and Open Affective Standardized Image Set (OASIS) databases. Since the Likert scale from 0 to 100 was used in GAPED, and the Likert scale from 1 to 7 was used in OASIS, a linear transformation was applied, resulting in all assessments on a continuous scale from 0 to 100. Then, two potential rules for categorizing emotions were investigated.

First, it is intuitively desirable to sort the images by the level of pleasantness, followed by dividing into three parts according to the rating scale — so that the images with 0–33.33 ratings represent a negative category, with 33.33–66.67 ratings being neutral, and with ratings of 66.67–100 is a positive category. To implement this rule of division into three categories, Python code was used:

import os import shutil import csv def organizeFolderGAPED(original, pos, neg, neut):

This approach made it possible to divide the database into categories: 417 negative images, 774 neutral and 442 positive. In this approach, division into three categories in equal shares unpleasant images, the rating of which did not reach the threshold value, were classified as neutral; for example, images of a dead body, a crying child, cemeteries were classified as neutral. Although these images were less unpleasant than others in the negative category, it was doubtful that they were neutral.

Therefore, it was decided to apply an optimized categorization rule based on the normal distribution of data, as well as to improve the separation of parameters into emotional categories. The values 0–39 were classified as negative, 40–60 as neutral, and 61–100 as positive. To implement this rule, Python code was used:

import os import shutil import csv def organizeFolderGAPED(original, pos, neg, neut):

With this categorization rule, 40–60–40,567 positive images were rated as more pleasant than 502 neutral, and 564 negative images were rated as less pleasant than neutral. Thus, the target value of the emotional categories was preserved and the distribution of images into categories was improved. The figure below illustrates the level of pleasantness associated with each of the categories. The different length of the whiskers on the dispersion chart indicates in which emotional category (positive or negative) there is a greater scatter of ratings compared to the neutral category.

Average pleasant ratings for each of the emotional categories

Average pleasant ratings for each of the emotional categoriesWe concluded that this categorization rule is sufficient for the classification of images based on emotions. Regarding the categories of parameters of the image database, the types of images representing each of the emotional categories are shown below. It should be noted that each category of parameters (animals, people, scenes, objects, different) is presented in each of the emotional categories.

Emotional Category 1: NegativeEmotional Category 2: NeutralEmotional Category 3: PositiveSummarize. We divided the image database into neutral, negative, and positive emotional categories, using normative significance ratings ranging from 0 to 100, relating 0–39 to negative, 40–60 to neutral, and 61–100 to positive. Images are appropriately distributed across these emotional categories. Finally, each emotional category includes images of animals, people, scenes, objects and various things.