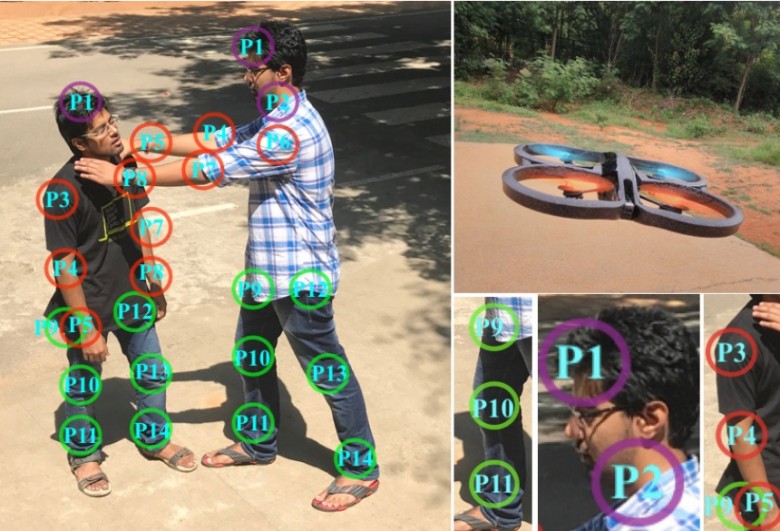

The illustration on the left shows 14 key points on the human body that are recognized by the machine vision system: head, neck, shoulders, elbows, wrists, hips, knees, ankles. Top right drone Parrot AR with violence detection system. At the bottom right, the individual elements of the photograph from the training dataset with key points

The illustration on the left shows 14 key points on the human body that are recognized by the machine vision system: head, neck, shoulders, elbows, wrists, hips, knees, ankles. Top right drone Parrot AR with violence detection system. At the bottom right, the individual elements of the photograph from the training dataset with key pointsNowadays, UAVs are increasingly being used by law enforcement and intelligence agencies. Usually for espionage, reconnaissance, border control, etc. The police are not very actively using drones to patrol city streets. But there is a huge potential. Patrol drones can significantly save on staff salaries. They cover large areas and see well in the dark.

In connection with the growth of crime and the threat of terrorism in many countries, the authorities are interested in strengthening control over the civilian population.

UAVs with automatic recognition of violence are new generation systems that open the door to even more autonomous and intelligent response systems for street riots and hooliganism.

Previously, UAVs were used mainly in the "manual" mode. So they are under the control of the operator, who simultaneously tracks the image from the video camera. But such a regime severely limits the

mass use of UAVs, since each UAV needs a separate operator.

Machine vision systems remove this restriction. They allow you to send hundreds and thousands of drones on the specified routes, and the operator only draws attention to the alarm signals that are triggered when certain signs are recognized. Such systems of automatic patrolling of objects for fire detection, pipeline damage, etc. have already been developed. In 2010, a

system was developed for law enforcement agencies

to identify “abandoned objects” , that is, bags and packages left in public places. The automatic violence detection system is the next logical step, allowing the use of UAVs to patrol crowds and public places.

In 2009, a scientific work was published describing

the computer vision system for automatic detection of crimes in public places using motion analysis. With an accuracy of about 85%, it determines such actions as snatching a purse from a passer-by, kidnapping a child, etc.

Such systems are very successful in identifying various criminal acts. Despite their impressive accuracy (in some cases more than 90% accuracy), they have a very limited scope.

In 2014, researchers proposed the first system for UAVs to automatically recognize violence in public places, the first of its kind to use a

model of deformable parts to assess a person’s posture with further identification of suspicious persons by their postures. This is an extremely difficult task of machine vision, because photos and videos from the drone can suffer from changes in lighting, shadows, low details and blur. In addition, people appear in different places of the frame and in different positions. The system defined violence with an accuracy of about 76%, which is much lower than that of the highly specialized systems described above.

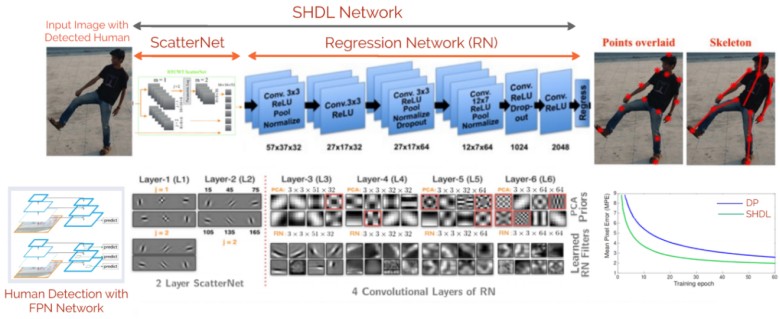

A new development by scientists from the University of Cambridge (UK), the National Institute of Technology (India) and the Indian Institute of Sciences in Bangalore presents an improved system for autonomous recognition of real-time violence using a feature pyramid (feature pyramid network, FPN), ScatterNet hybrid network of deep learning Hybrid Deep Learning, SHDL) and the calculation of the orientation between the limbs of the calculated pose using the support vector machine (SVM). The operation of the recognition pipeline is shown in detail in the illustration.

Conveyor prediction posture of a person who can be used to predict violence in crowds and public places. The framework first recognizes people in frames taken by the drone camera. Fragments of photos with images of people come as input to the SHDL network, where ScatterNet runs on the frontend to extract manually described attributes from the input images. The extracted features from the three layers are combined and fed to the input to the four convolutional layers of the regression network that works on the backend.

Conveyor prediction posture of a person who can be used to predict violence in crowds and public places. The framework first recognizes people in frames taken by the drone camera. Fragments of photos with images of people come as input to the SHDL network, where ScatterNet runs on the frontend to extract manually described attributes from the input images. The extracted features from the three layers are combined and fed to the input to the four convolutional layers of the regression network that works on the backend.The average accuracy of detecting violence in the new system is 88.8%, including 89% of punches with a hand, 94% with a foot, 82% with shooting, 85% with choking, and 92% with stabbings. This is significantly higher than the previous system in 2014.

The paper was

published on June 3, 2018 on the arXiv.org preprint site and will be presented at the IEEE Computer Vision and Pattern Recognition (CVPR) Workshops 2018 IEEE Computer Vision and Pattern Recognition Conference.