In this very late article, I will explain why, in my opinion, in most cases when developing an application data model, it is necessary to adhere to the "database first" approach. Instead of the "Java [any other language] first" approach, which will lead you to a long path full of pain and suffering as soon as the project starts to grow.

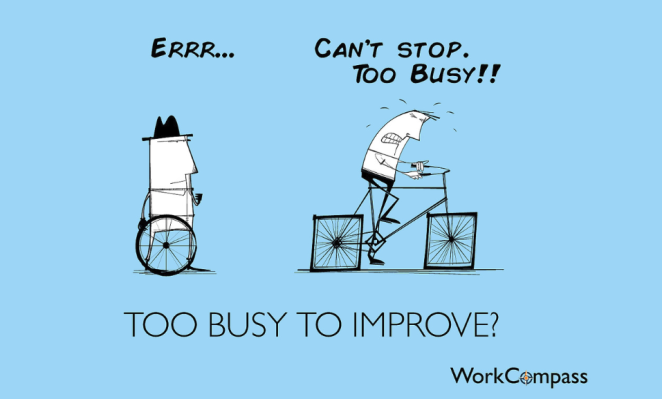

"Too busy to get better" Licensed CC by Alan O'Rourke / Audience Stack . Original image

This article is inspired by a recent question on StackOverflow .

Interesting reddit-discussions / r / java and / r / programming .

Code Generation

To my surprise, one small group of users seemed shocked by the fact that jOOQ was heavily tied to generating the source code.

While you can use jOOQ exactly as you like, the preferred way (according to the documentation) is to start with the already existing database schema, then generate the necessary client classes (corresponding to your tables) using jOOQ, and after that, write type-safe queries for these tables:

for (Record2<String, String> record : DSL.using(configuration)

Code can be generated either manually outside the assembly, or automatically with each assembly. For example, such generation can occur immediately after the installation of Flyway migrations , which can also be launched both manually and automatically.

Source Code Generation

There are different philosophies, advantages and disadvantages with regard to these approaches to code generation, which I do not want to discuss in this article. But in essence, the meaning of the generated code is that it is a Java representation of what we consider to be a kind of “reference” (both inside and outside our system). In some ways, compilers do the same thing when they generate bytecode, machine code, or some other source code from source — in the end we get an idea of our “reference” in another specific language.

There are many such code generators. For example, XJC can generate Java code from XSD or WSDL files . The principle is always the same:

- There is a certain standard (external or internal), such as a specification, a data model, etc.

- You need to get your own idea of this benchmark in our familiar programming language.

And it almost always makes sense to generate this view in order to avoid unnecessary work and unnecessary mistakes.

"Type providers" and annotation processing

It is noteworthy that another, more modern, approach to generating code in jOOQ is Type Providers, ( as it is done in F # ), where the code is generated by the compiler during compilation and never exists in its original form. A similar (but less complex) tool in Java is annotation handlers, for example, Lombok .

In both cases, everything happens the same as in conventional code generation, except:

- You do not see the generated code (perhaps for many it is already a big plus?)

- You must ensure that your "reference" is available at every compilation. This does not cause any problems in the case of Lombok, which directly annotates the source code itself, which is the “reference” in this case. A bit more complicated with database models that rely on an always-available “live” connection.

What is the problem with code generation?

In addition to the tricky question of whether to generate code manually or automatically, some people believe that the code does not need to be generated at all. The reason I hear most often is that this generation is difficult to implement in the CI / CD pipeline. And yes, it's true, because we get the overhead of creating and maintaining additional infrastructure, especially if you are new to the tools you use (jOOQ, JAXB, Hibernate, etc.).

If the overhead of studying the work of a code generator is too high, then there really will be little benefit. But this is the only argument against. In most other cases, there is absolutely no point in manually writing code that is the usual representation of the model of something.

Many people claim that they have no time for this, because right now you need as soon as possible to "roll out" the next MVP. And they will be able to finish their CI / CD pipeline sometime later. In such cases, I usually say: "You are too busy to get better."

"But Hibernate / JPA makes Java first development much easier."

Yes it's true. It is both a joy and a pain for Hibernate users. With it, you can simply write a few objects, like:

@Entity class Book { @Id int id; String title; }

And everything is almost ready. Next, Hibernate will take over the entire routine on how to define this object in the DDL and in the required SQL dialect:

CREATE TABLE book ( id INTEGER PRIMARY KEY GENERATED ALWAYS AS IDENTITY, title VARCHAR(50), CONSTRAINT pk_book PRIMARY KEY (id) ); CREATE INDEX i_book_title ON book (title);

This is really a great way to get started quickly - all that remains is to launch the application.

But not everything is so rosy. There are still a lot of questions:

- Will Hibernate generate the name I need for the primary key?

- Will I create the necessary index on the TITLE field?

- Will a unique ID value be generated for each record?

Looks like no. But while the project is under development, you can always throw away your current database and generate everything from scratch by adding the necessary annotations to the model.

So, the Book class in its final form will look something like this:

@Entity @Table(name = "book", indexes = { @Index(name = "i_book_title", columnList = "title") }) class Book { @Id @GeneratedValue(strategy = IDENTITY) int id; String title; }

But you pay for it, a little later.

Sooner or later, your application gets into production, and the described scheme will stop working:

In a live and real system, you can no longer just pick up and drop your database, because the data in it is used and can cost a lot of money.

From this point on, you need to write migration scripts for every change in the data model, for example, using Flyway . At the same time, what happens to your client classes? You can either adapt them manually (which will lead to double work), or ask Hibernate to generate them (but how good are the chances that the result of such a generation will meet the expectations?). As a result, you can expect big problems.

As soon as the code gets into production, it is necessary to fix it almost immediately, and as soon as possible.

And since The installation of database migrations is not built into your assembly line; you will have to install these patches manually at your own peril and risk. There is not enough time to go back and do it right. It is enough only to blame Hibernate for all its troubles.

Instead, you could have done completely differently from the very beginning. Namely, use round wheels instead of square ones.

Forward to "Database First"

Reference data schema and control over it is in the department of your DBMS. The database is the only place where the schema is defined, and all clients have a copy of this schema, but not vice versa. The data is in your database, not in your client, so it makes sense to ensure the control of the circuit and its integrity exactly where the data is located.

This is old wisdom, nothing new. Primary and unique keys are good. Foreign keys are beautiful. The constraint check on the DB side is wonderful. Assertion (when they are finally implemented) is great.

And that's not all. For example, if you are using Oracle, you can specify:

- What tablespace is your table in?

- What is the PCTFREE value it has

- What is the size of the sequence cache (sequence)

Perhaps all this does not matter in small systems, but in larger systems you do not have to follow the path of "big data" until you squeeze all the juices from your current storage. None of the ORMs I've ever seen (including jOOQ) will allow you to use the full set of DDL parameters that your DBMS provides. ORM offer only some tools to help write DDL.

Ultimately, a well-thought-out scheme should be written only by hand using a DBMS-specific DDL. The entire automatically generated DDL is only an approximation to this.

What about the client model?

As mentioned earlier, you will need some representation of the database schema on the client side. Needless to say, this presentation must be synchronized with the real model. How to do it? Of course using code generators.

All databases provide access to their meta-information through good old SQL. So, for example, you can get a list of all tables from different databases:

It is these queries (as well as similar queries for views, materialized views and table functions) that are executed when the DatabaseMetaData.getTables () method of a specific JDBC driver is called, or in the jOOQ-meta module.

From the results of such queries, it is relatively easy to create any client representation of the database model, no matter which data access technology is used.

- If you are using JDBC or Spring, you can create a group of String constants

- If you use JPA, you can create objects yourself

- If using jOOQ, you can create jOOQ metamodels

Depending on the number of functions offered by your data access API (jOOQ, JPA or something else), the generated metamodel can be really rich and complete. As an example, the implicit join function in jOOQ 3.11, which relies on meta-information about the relationship of foreign keys between your tables .

Now, any changes to the database schema will automatically update the client code.

Imagine renaming a column in a table:

ALTER TABLE book RENAME COLUMN title TO book_title;

Are you sure you want to do this job twice? Never. Simply zamkite this DDL, run the assembly and enjoy the updated object:

@Entity @Table(name = "book", indexes = {

The client also does not need to be compiled each time (at least until the next change in the database schema), which can be a big plus!

Most DDL changes are also semantic changes, not just syntactic. Thus, it is great to see in the generated client code what exactly the recent changes in the database have affected.

The truth is always the same

Regardless of which technology you use, there should always be only one model, which is the benchmark for the subsystem. Or, at a minimum, we should strive for this and avoid confusion in the business, where the “standard” is everywhere and nowhere else. It makes everything so much easier. For example, if you exchange XML files with any other system, you probably use XSD. Like INFORMATION_SCHEMA jOOQ metamodel in XML format: https://www.jooq.org/xsd/jooq-meta-3.10.0.xsd

- XSD is well understood

- XSD perfectly describes XML content and allows validation in all client languages.

- XSD makes it easy to manage versions and maintain backward compatibility

- XSD can be turned into Java code with XJC

Let us pay special attention to the last point. When communicating with the external system via XML messages, we must be sure that the messages are valid. And it really is very easy to do with things like JAXB, XJC and XSD. It would be crazy to think about the relevance of the Java-first approach in this case. The generated XML-based objects will be of poor quality, poorly documented and difficult to expand. And if there is an SLA for such interaction, then you will be disappointed.

Honestly, this is similar to what is happening with various APIs for JSON, but this is a completely different story ...

How are databases worse?

When working with a database, everything is the same here. The database owns the data, and it must also be the master of the data schema. All schema modifications must be performed by DDL directly in order to update the reference.

After updating the benchmark, all customers must update their ideas about the model. Some clients may be written in Java using either jOOQ and / or Hibernate or JDBC. Other clients can be written in Perl (good luck to them) or even in C #. It does not matter. The main model is in the database. While the models created using ORM are of poor quality, not well documented and difficult to expand.

Therefore, do not do it, and from the very beginning of development. Instead, start with a database. Create an automated CI / CD pipeline. Use code generation in it to automatically generate a database model for clients with each build. And stop worrying, everything will be fine. All that is required is a bit of initial effort in setting up the infrastructure, but as a result you will gain in the development process for the rest of your project for years to come.

No thanks.

Explanations

To consolidate: this article in no way asserts that the database model should apply to your entire system (to the subject area, business logic, etc.). My statements consist only in the fact that the client code interacting with the database should be only a representation of the database schema, but not to define and form it in any way.

In two-tier architectures, which still have a place to be, the database schema may be the only source of information about the model of your system. However, in most systems, I view the data access level as a “subsystem” that encapsulates the database model. Something like this.

Exceptions

As in any other good rule, ours also has its exceptions (and I have already warned that the database first approach and code generation is not always the right choice). These exceptions (perhaps the list is not complete):

- When the scheme is unknown in advance and it must be investigated. For example, you are a provider of a tool that helps users navigate through any scheme. Of course, there can be no code generation. But in any case, it is necessary to deal directly with the database itself and its schema.

- When for some task it is necessary to create a scheme "on the fly". This may be similar to one of the variations of the Entity-attribute-value pattern , since You do not have a well-defined scheme. As well as there is no certainty that the RDBMS in this case is the right choice.

The peculiarity of these exceptions is that they quite rarely meet in wildlife. In most cases, when using relational databases, the scheme is known in advance and is the “standard” of your model, and clients should work with a copy of this model generated using code generators.