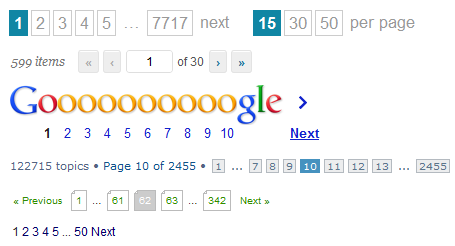

Hello, my name is Dmitry Karlovsky and I ... do not like to read books, because while you are turning over a page, you are breaking out of a fascinating story. And it is worth a little to linger, as you forget on what the last sentence of the previous page was cut off, and you have to scroll back to re-read it. And if it's not so scary with physical books, then with the issue of the rest server everything is much sadder - there are some data on the page now, and in a second they are completely different. Let's think about how it happened, who is to blame and the main thing - what to do.

Problem

So, we need to give all the messages on the request "padzhinatsiya", starting with the most recent ( last modified from the top ) or even in some tricky order. All is well, as long as we have less than a hundred of these messages - we simply make a select from the database and return the data:

Request from client:

GET /message/text=/

Request to database:

SELECT FROM Message WHERE text LICENE "" ORDER BY changed DESC

JSON response scheme to the client:

Array<{ id : number , text : string }>

But the number of messages is growing and we have the following troubles:

- Requests to the database are becoming more and more slow, as you have to scoop up more and more data.

- Data transfer over the network takes more time.

- Rendering this data on the client is getting longer and longer.

Starting from a certain threshold, the delays become so significant that it becomes impossible to use our site. If, of course, he has not yet laid down, tired of a large number of parallel heavy requests.

The simplest solution that may be the first one that comes to mind, and now you can meet it in any toaster - not all data in a crowd, but paginated pages. All we need to do is just to drop one additional parameter from the client into the database request:

GET /message/text=/page=5/

SELECT FROM Message WHERE text LICENE "" ORDER BY changed DESC SKIP 5 * 10 LIMIT 10

SELECT count(*) FROM Message WHERE text LICENE ""

{ pageItems : Array<{ id : number , text : string }> totalCount : number }

Well, yes, we still had to recount all the messages so that the client could draw a list of pages or calculate the height of the virtual scroll, but at least we don’t need to get all these 100,500 messages from the database.

And everything would be fine if we had some kind of non-popular forum on a topic that was no longer relevant. But they write and write to us, they write and write, and while the user reads the fifth page, the list of messages changes beyond recognition: new ones are added and old ones are deleted. Thus, we get problems of two types from the user's point of view:

- On the next page there may again appear messages that have already been on the previous one.

- The user will not see any messages at all, as they managed to move from 6 pages to 5 exactly between the user's transition from 5 to 6.

In addition, we still have performance issues. Each transition to the next page leads to the fact that we need to re-do as many as two search queries into the database with an increasing number of elements being skipped from the previous pages.

Yes, and a competent implementation on the client side is not that very simple - you should always be prepared for the fact that any server response can return a new total number of messages, which means we will need to redraw the Padginator and redirect to another page if the current suddenly turns out is empty. And of course you can not fall in the case of duplicates.

In addition, sometimes the client needs to update the search results, but all the same it will receive data that it may already have from previous queries.

As you can see, padzhinatsiya has many problems. Is there no better solution?

Decision

First, let's note that when working with a database there are 2 operations that are different in their essence:

- Search. Relatively heavy search for pointers to data on some query.

- Sample. Relatively simple operation of actually receiving data.

It would be ideal:

- Search once and memorize its results somewhere as a snapshot at a specific point in time.

- Quickly select data in small portions as needed.

Where to store snapshots? there are 2 options:

- On server. But then we clog it with a bunch of garbage with search results that need to be cleaned over time.

- Ka client. But then you have to immediately transfer the entire snapshot to the client.

Let's estimate the size of the snapshot, which is just a list of identifiers. It is doubtful that the user had enough patience to at least 100 pages, without using filtering and sorting. Suppose we have a page with 20 elements each. Each identifier will occupy no more than 10 bytes in our json view. Multiply and get no more than 20kb. And most likely much less. It is reasonable to set a hard limit on the size of the issue in, say, 1000 items.

GET /message/text=/

SELECT id FROM Message WHERE text LICENE "" ORDER BY changed DESC LIMIT 1000

Array<number>

Now the client can draw at least an padzhinator, at least a virtual scroll, requesting data only for identifiers of interest to him.

GET /message=49,48,47,46,45,42,41,40,39,37/

SELECT FROM Message WHERE id IN [49,48,47,46,45,42,41,40,39,37]

Array< { id : number , text : string } | { id : number , error : string } >

What we finally get:

- Normalized API: search separately, sample data separately.

- Minimize the number of search queries.

- You can either not request data that is already uploaded, or update it in the background.

- Relatively simple and universal code on the client side.

Among the shortcomings can be noted except:

- To show something the user needs to make at least 2 consecutive requests.

- It is necessary to handle the case when the identifier is, and the data on it is no longer available.