This story began on a frosty spring evening when the question came to my mind: is there a way to determine the

degree of filling an arbitrary geometric figure with paint (that is, by how many percent is it painted at the moment)? So much so that it does not just slow down, but

fly 60 fps on the weakest mobile devices.

For those who did not immediately understand what they were talking about, I will explain: the problem is possible as a raster approach, and ... not a raster approach.

In the first case, everything is simple, the topic of

flood fill and associated algorithms have been successfully studied and implemented in the

PL for every taste. There is an array of pixels to be filled, there are their boundaries. We count the number of filled points, divide by the total number, and voila - we have the coveted percentage of the output. But - with a large number of pixels (and you yourself know which ppi on modern devices), plus - if there are many such figures, we run into a bunch of calculations in each frame, which pleasantly heat the device, but not the soul.

Anyway, working with raster seemed to be unsportsmanlike. The gaze was directed towards the omnipotent polygons. A few exciting hours of relaxed-resistant coding proved the hypothesis: you can use such a thing as “

vertex color ” - vertex color.

A little bit about vertex colorNatively, the additional information channel available in the triangle data structure is that mesh.colors . Theoretically, it can be used for any purpose, depending on what is written in the shader, but in this case, the coveted byte will store exactly the current value of the color fullness for each vertex. Its same shader uses when drawing, and then with a single Unity material you can create an unlimited number of multi-colored meshes with one material for all. The most interesting thing is that the vertex color values are hardware interpolated between themselves, which makes it possible to create light gradients.

I think it is worth mentioning why it took the notorious percentage of filling, from which the article began. The main idea of the coloring application was the following: the final image consists of a set of polygons. The application will

consistently and automatically push the user element by element . Accordingly, until you paint one piece to the end, you will not proceed to the next. Such a decision seemed to me very elegant, enticing and in the light of the global dominance of the “pixel” colorings in the pages - also fresh.

The first steps

Needless to

say , in order to make a full coloring, it was necessary to

create many more intriguing solutions. First, I wanted, for all the polygonal nature of the application, coloring was perceived as the most raster, that is, the

paint had to spread under the finger , and have a more or less realistic look. The initial requirement for maximum performance did not disappear anywhere and continued to hang like a formidable cumulus cloud over the entire process.

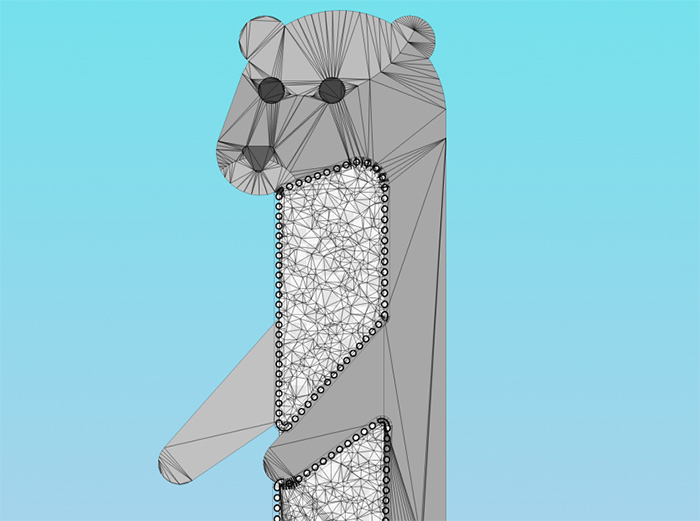

The first thing to do was to do a human

tessellation (splitting a large polygon consisting of a set of triangles into a stochastic pile of small triangles). After all, if we set up an array of vertices, and we write vertex color there as we paint, we can simply determine whether the figure is completely painted over and what other pieces are left blank —

just like a pixel algorithm, but with much more freedom .

Then began a fascinating journey into the world of shaders. As you understand, I cannot discover all the finds and secrets entirely, but I will say that by interacting with the Unity noise map and the old-school emission of Unity rays from the fingers, the brush effect was achieved, and even with some paint spreading along the triangles from the finger. Using vertex color provided the opportunity to do

one Unity material on absolutely all the component parts of the figure and therefore draw calls in the finished program does not exceed 5-7 (depending on the availability of menus and particles).

The stroke is made by the usual Unity Line Renderer, which is treacherously buggy on some figures, moving down and showing flaws at the joints. It could not be defeated, therefore the priority task is to rewrite the component from scratch. Fingerprinting is also a standard Trail Renderer, but its shader uses a z-check to prevent trace elements from overlapping each other, creating ugly artifacts. The “chess” texture of the background helps, in particular,

to estimate the size of the element being painted : the more it is, the smaller the size of the cells.

Functional, which is not expected

During testing, it turned out that often somewhere in the corners of the figure there were empty peaks, which was difficult to determine visually. Despite the fact that the trigger for switching to the next element worked with a fill rate of 97%, the situation “

and what to do next? "- with a degree of occupancy from 90% to 97% - there were quite often and confused users (who were mostly no more than 12 years old). I didn’t want to set the trigger to less than 97%, because then the effect “

I haven’t made it up yet, but it has already jumped ”.

So I reluctantly met

Madame Clustering . Imagine: a polygon, a bunch of points inside, there are some "special", sometimes separately, sometimes - in groups. It is necessary to find and designate the largest "group". The usual such mathematical problem. None of the

traditional algorithms I found came up for various reasons, I had to make my own. Hack on hack, but it worked - and the under-painted areas began to stand out with a beautiful dynamic circle. In order to optimize, this algorithm is triggered once every 3 seconds, and only after the user pulls his finger off the screen in the “what to do next” style. It looks quite organic.

After such a brainstorming session, making a varied

“color queue” for testers' requirements — namely, giving the user the opportunity to choose in which sequence he wants to paint the elements — was a matter of one evening. Just need to determine the geometric centers of each mesh and build them as we need: from left to right, from top to bottom, etc. For greater clarity, the particles were implemented on the background, which show the direction of the queue.

Illustration of a queue

This shows the default queue (as the artist intended). If you turn on the “

queue in direction ” mode by clicking on one of the buttons below, the coloring queue will change, and the particles will go to the indicated direction.

UX & UI

I am generally impressed by the idea of controlled automatism in applications, and therefore each element is centered and scaled so that it can be painted over with a finger

without having to scroll the screen . The disadvantage of this approach is that it is not always clear what part of the figure is now on the screen. As it turned out, users even like such a small challenge, as it trains short-term memory and correlation of information - you need to keep the big picture in mind. Well, you can get to the “bird's eye view of the figure” in two ways - with a pinch gesture or by pressing the zoom button.

Following the covenants of

Apple Interface Guidelines , it was decided to reduce the number of buttons on the screen to a minimum. In addition to the

zoom in / out button and the obvious exit button in the menu, there is also a call to the palette - you can paint it with the “default” color set by the artist, or of your choice.

In addition, in the “out of bird's eyes” mode, you can change the background gradient (each press is randomly generated) or enter the “repainting” mode, which allows you to correct an already painted element. Yes, I had to hide this functionality, but it is quite justified - for all the testing, no one has ever asked how to do it.

About the palette

By itself, the palette was altered twice. At first, I just placed on the screen some amount of squares with colors, but users asked for more colors. I didn’t want to scroll through the interface, and so the

“color-shade” scheme appeared, that is, the user first selects the base color by pressing, and then one of its shades. The palette is removed with a button or an imposing swipe down. And when it appears on the screen, the workspace of the artist is reduced by 1/3, which makes it necessary to “rescale” the current figure to the changed viewport size.

For sweet

The key missing link in the whole picture was the

reward - a kind of visual and psychological reward that the user receives upon completion of the coloring process. The idea

was spied on the surface: the figure was painted automatically and anew, in an accelerated mode, and exactly as the user did - in other words,

timelapse for 15-20 seconds . This is implemented through the recording of the sequence in which the user touched the vertices of the figures, and then the subsequent playback in the form of “feeding” this data to the drawing engine with delays (through coroutines). Each mesh is duplicated several times to achieve the effects of "manifestation" and "attenuation".

Of course, timelapse when playing is

recorded in a video file , and after the visual extravaganza, the user is prompted to save / share the newly created masterpiece. Fortunately, a plug-in appeared in the Asset Store just in the spring, which allows you to capture video from the screen fully and multiplatformly (after some settings), because writing such a tool from scratch goes far beyond my programming skills

and in general I am a designer .

Instead of conclusionOn this one thousand words, which I assigned to the first opus, ends. In the following parts, it is planned to tell about the heroic battles with Unity UI during the development of the second part of the application - the picture selection menu, and also to count the bumps in the difficult task of ASO.