Emotional artificial intelligence, in addition to the obvious connection with machine learning and neural networks, is directly related to psychology and, in particular, to the science of emotion. There are several challenges in this area today. One of them is the formation of an accurate and complete classification of emotional states, on which, among other things, the annotation process directly depends on comparing observed facial expressions and other non-verbal signals with certain emotions and affective states.

Emotion classification

Today, three approaches to categorizing emotional data are widely used: discrete and multidimensional models, as well as hybrid, combining the first two types.

The discrete approach is based on the categorization of emotions that we find in natural language. Each emotion is associated with a semantic field — a specific value or a set of values that we attribute to a certain emotional state. The theory of basic emotions is one of the most famous examples of the discrete approach.

The first mention of something similar to what is meant by

basic , or primary, emotions can be found in early philosophical texts, for example, the Greek or Chinese hereditary. Plato in the famous work "Republic" attributed emotions to the main components of the human mind. In the functional theory of Aristotle's emotions, mind, emotions, and virtues are interconnected, and the emotional life of every healthy person is always (or almost always) aligned with reason and virtues, whether it is aware of it or not. In Chinese Confucianism, we find from four to seven "Qing" - natural emotions for any person.

In the 20th century, the topic was in the focus of scientific interest, and a number of authors, including Paul Ekman, the author of the most common theory of basic emotions, suggested their own vision of the number of such emotions. Ekman suggested that basic emotions should be universal, in the sense that their manifestation is the same for all cultures. In different theories, we can find from 6 to 22 emotions (Ekman, Parrot, Frijda, Plutchik, Tomkins, Matsumoto - see Cambria et al., 2012 for details).

The existence of basic emotions today remains a controversial issue (see, for example, Barret & Wager, 2006; or Crivelli & Fridlund, 2018). A number of studies have shown a link between basic emotions and the activity of individual brain structures (for example, Murphy et al., 2003, and Phan et al., 2002), although in other studies this correlation is not confirmed (see Barrett & Wage, 2006). Interestingly, some studies of the perception of emotions in isolated ethnic groups do not support the hypothesis of intercultural universality of emotions. Trobrians from Papua New Guinea are one example (see Crivelli & Fridlund, 2018, and Gendron et al., In press). In the experiment, representatives of the tribe were shown a photograph of a person expressing fear, but the Trobrians perceived this expression as a signal of threat.

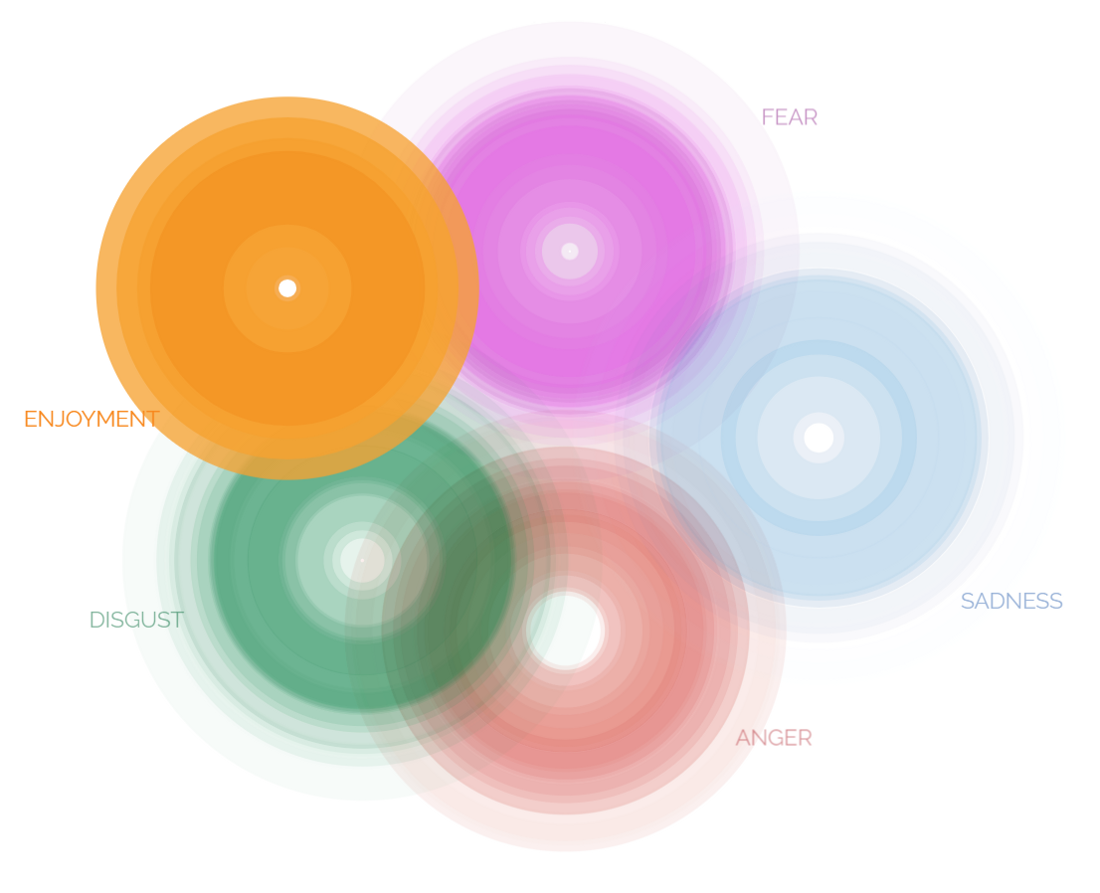

Atlas of emotions proposed by Paul Ekman: atlasofemotions.org . The original version of 1999 also included “surprise.”

Atlas of emotions proposed by Paul Ekman: atlasofemotions.org . The original version of 1999 also included “surprise.”Today, many emotional computing decisions are based on discrete models and include only basic emotions, most often in accordance with Ekman's theory (for example, solutions from Affectiva, the pioneer of emotional AI). This means that automatic systems are trained to recognize a rather limited number of affective states, although in life we constantly experience a large number of emotions, including complex mixed emotions, and in interpersonal communication we use numerous social cues (for example, gestures).

Another approach -

multidimensional - represents emotions in coordinate multidimensional space. Since this space is inseparable, there are emotions that have the same nature, but differ in a number of parameters. In affective science, these parameters (or measurements) are most often expressed by valence and activation (arousal), for example, in the RECOLA dataset by Ringeval et al. The intensity of the emotions is also often used. Thus, sadness can be viewed as a less intense version of grief and a more pronounced thoughtfulness, at the same time more like disgust than, for example, trust. The number of measurements may vary by model. Plutchik has only 2 dimensions in the wheel of emotions (similarity and intensity), while Fontaine postulates 4 dimensions (valence, potency, activation, unpredictability). Any emotion in this space will have a number of characteristics measured the amount with which it is present in a particular dimension.

Hybrid models combine both discrete and multidimensional approaches. A good example of a hybrid model is the Hourglass of Emotions, proposed by Cumbria, Livingstone, Hussein (Cambria et al., 2012). Each affective dimension is characterized by six levels of force with which emotions are expressed. These levels are also referred to as a set of 24 emotions. Thus, any emotion can be considered as a fixed state and as part of a continuum associated with other emotions by non-linear relationships.

Emotions in Emotional Computing

So why is the classification of emotions so important for emotional computing? At the beginning of the article we focused on the fact that the classification of emotions and the approach that we follow directly influence the process of annotation - the markup of audiovisual emotionally colored content. To train a neural network to recognize emotions, a dataset is needed. But the markup of this set completely depends on us, people, and on what emotions we associate, for example, with a specific facial expression.

Today, several annotation tools are common. These are ANNEMO (Ringeval et al.) Used for multidimensional models, ANVIL (Kipp) and ELAN (Max Planck Institute for Psycholinguistics) used for discrete systems. In ANNEMO, annotation is available in 2 affective dimensions: activation and valence, whose values range from -1 to +1. Thus, any emotional state can be assigned values that characterize its intensity and positivity / negativity. Social measurements can also be assessed on a 7-point scale in 5 dimensions: agreement (agreement), dominance (engagement), manifestation (performance) and mutual understanding (rapport).

ANVIL and ELAN allow you to use your own filters for markup of audiovisual emotional content. Filters, or markers, can be represented by words, sentences, comments, or any other text related to the description of the affective state. These markers are static and cannot be expressed by value.

The choice of approach and annotation system depends on the objectives. Multidimensional models avoid the known problem when some words exist in some languages, while others may not have words to describe these emotions. This makes the annotation process contextually and culturally dependent. Nevertheless, discrete models are a useful tool for categorizing emotions, since it is difficult to objectively evaluate changes in values as valence or activation, and different annotators will give different estimates of the severity of these values.

Bonus: robotics

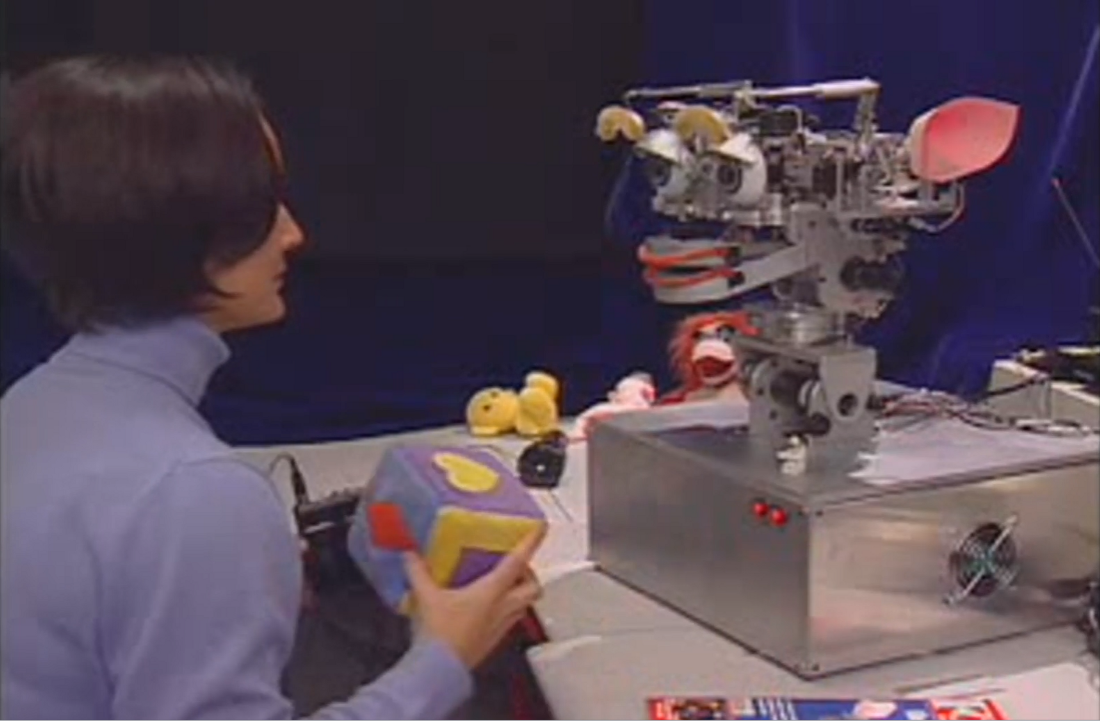

By the way, the classification of emotions is widely used not only in the field of recognition of emotions, but also for their synthesis. For example, in robotics. The emotional spectrum available to the robot can be integrated into the multidimensional space of emotions. Affect system - a system of emotional states, between which probably the sweetest robot in the AI industry - Kismet developed by MIT (MIT) can switch, is based on a multidimensional approach. Each dimension of the emotional space (activation, valence and state (stance), that is, readiness for communication) is compared with a set of facial expressions. As soon as the required value is reached, the robot will switch to the next emotion.

Video: How the Kismet robot works

Video: How the Kismet robot worksLinks- Barrett, LF & Wager, TD (2006). The pattern of emotion evidence from neuroimaging studies. Current Directions in Psychological Science, 15 (2), 79–83. doi: 10.1111 / j.0963–7214.2006.00411.x

- Cambria, E., Livingstone, A., Hussain, A. (2012) The Hourglass of Emotions. Cognitive Behavioural Systems, 144–157.

- Chew, A. (2009). Aristotle's Functional Theory of the Emotions. Organon F 16 (2009), No. 1, 5–37.

- Crivelli, C., & Fridlund, AJ (2018). Facial Displays Are Tools for Social Influence. Trends in Cognitive Sciences, 22 (5), 388–399. doi.org/10.1016/j.tics.2018.02.006

- Ekman, P. (1999). Basic Emotions. In T. Dalgleish and M. Power (Eds.). Handbook of Cognition and Emotion. Sussex, UK: John Wiley & Sons, Ltd.

- Fu Ching-Sheue (2012). What are emotions in Chinese Confucianism? www.researchgate.net/publication/267228910_What_are_emotions_in_Chinese_Confucianism ?

- Gendron, M., Crivelli, C., & Barrett, LF (in press). Universality reconsidered: Diversity in making meaning of facial expressions. Current Directions in Psychological Science.

- Harmon-Jones, E., Harmon-Jones, C., Summerell, E. (2017) On the Importance of Both Dimensional and Discrete Models of Emotion. Behav Sci (Basel). Sep 29; 7 (4)

- Murphy, FC, Nimmo-Smith, I., & Lawrence, AD (2003). Functional neuroanatomy of emotion: A meta-analysis. Cognitive, Affective, & Behavioral Neuroscience, 3, 207–233.

- Phan, KL, Wager, TD, Taylor, SF, & Liberzon, I. (2002). A meta-analysis of activation studies in PET and fMRI. Neuroimage, 16, 331-348.

- Plutchik, R. (2001) The Nature of Emotions. American Scientist 89 (4): 344

- Ringeval, F., Sonderegger, A., Sauer, J., & Lalanne, D. RECOLA & ANNEMO: diuf.unifr.ch/diva/recola/annemo.html

- Kipp, M. ANVIL: www.anvil-software.org

- Max Planck Institute for Psycholinguistics. ELAN: tla.mpi.nl/tools/tla-tools/elan

- Emotion, Stanford Encyclopedia of Philosophy: plato.stanford.edu/entries/emotion