In QEMU, there are several ways to connect a block device to a virtual machine. Initially this was implemented in the following way:

-hda /dev/sda1

Thus, virtual disks were connected in the old days of virtualization. You can use it today if we just want to test some live CDs. Unfortunately, it has its drawbacks :

- When connecting virtual disks, it is possible to use only that interface, which is considered in virtual virtual schema as

/dev/hda (hda, hdb, hdc, ..); CD has -cdrom - When a file (or device) is connected to a virtual machine, QEMU uses only default parameters.

drive

To set other parameters (bus type, cache usage, etc.), the -drive parameter has been added to -drive . Although initially it was used to set parameters for both the backend and the frontend , currently it is only used to set the parameters for the backend , that is, parameters that affect the connection of the virtual device inside the virtual machine

-drive file=/dev/sda1,if=ide,cache=writeback,aio=threads

device

Setting all the parameters of a block device with one option over time proved unreasonable. Therefore, the options were divided into two. Backend parameters, i.e. those used to set up the virtualization environment. And frontend , which affect how the device is connected in a virtual machine. For this parameter set, a new option -device was introduced, which contains the disk id , specified by the -drive parameter.

The following configuration example connects the disk with the identifier id0-hd0 , and the result is the same as connecting a virtual disk, as shown in the introduction.

-drive file=/dev/sda1,id=ide0-hd0,if=none,cache=writeback,aio=threads \ -device ide-drive,bus=ide.0,drive=ide0-hd0

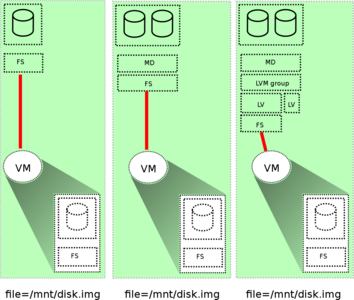

Local block devices in a virtual machine

The use of physical block devices for virtual machines today is not widely distributed due to the widespread use of virtual disks. Technically, we have the ability to run a virtual machine with some kind of proprietary system from the old hard disk. However, in this case it is better to first make a disk image (image) using dd and start the system from there.

Like a local block device, a virtual machine can be connected ..

- Local RAID - md type device

- Master DRBD (Network RAID1) - device type drbd

- Disk partition created in the LVM group - dm type device

- Regular file connected through a loop - loop device

Block device from another computer exported using NBD server - nbd type device

(It’s also worth mentioning about a Ceph device like rbd and a ZFS device like zvol - comment)

NBD

Consider the concept of connecting a block device from a remote computer over a network. See separate guide for NBD .

QEMU has an integrated NBD API, so that a remote block device shared via the NBD server can be directly connected to the virtual machine via QEMU - see the figure on the left.

QEMU has an integrated NBD API, so that a remote block device shared via the NBD server can be directly connected to the virtual machine via QEMU - see the figure on the left.

However, NBD is a fairly simple protocol that does not use authentication on a remote server and does not control the connection status. The NBD protocol assumes that the client can reconnect if the connection to the server is interrupted, but unfortunately QEMU does not do this.

Part of the situation can be solved by connecting several NBD devices and creating a virtual RAID array inside them. This approach has several advantages and one fatal flaw. If any of the devices turns off, then nothing terrible will happen. But if they vylyaty all at the same time = it will be bad. I / O operations in a virtual environment will be much faster, since requests will be executed in parallel to several physical machines (NBD servers) at once. But on the other hand, it will require more virtual processor resources and virtual memory.

The main drawback of building a RAID array from NBD devices in a virtual machine is the QEMU device; if the NBD device crashes, it will be reconnected only after a full restart of the machine, while an internal reload of the operating system inside the virtual machine will not be enough. But you can create a virtual machine without disks that will independently access the NBD server and connect the necessary NBD devices. In addition, failed devices must be re-added to the array and re-synchronized, this can be done manually or by using scripts inside the virtual machine.

It is better to contact the NBD server using the NBD device of the virtual machine. Especially well, in terms of I / O performance, building a RAID array of NBD devices.

It is better to contact the NBD server using the NBD device of the virtual machine. Especially well, in terms of I / O performance, building a RAID array of NBD devices.

This solution was absolutely the most productive of all tested. And the virtual machine was able to continue uninterrupted work even if the NBD server was turned off (or fails) over time.

Thus, it was possible to organize a relatively stable and at the same time productive virtualization environment on unstable hardware.

The main problem with RAID arrays over NBD devices is that you need to be very careful when connecting to a device from an NBD server that is part of a RAID array. This is a rather delicate process with a high probability of fatal error, which can lead to data loss. A small typo is enough. See the description of the fatal car accident of July 21, 2012, on the Peanuts page.

The disadvantage is that the block device with which one virtual machine is already working cannot be connected elsewhere if its file system does not allow it - this is similar to iSCSI ( i Internet S mall C omputer S ystem I nterface is a network version of SCSI) or AoE (technology A TA o ver E thernet).

Virtual disks

QEMU is able to connect not only block devices to a virtual machine, but also using different api, a regular file can be connected that will look like a block device inside the machine.

Qemu can work with virtual disks of various formats. For those virtual disks that are presented in the form of regular files, there is a standard utility qemu-img , which can be used both for converting and defining the format used and its parameters.

Retrieving information about a virtual disk saved as a regular file. In the same way you can get information about the virtual disk stored on GlusterFS:

root@stroj~

However, to obtain information about the virtual disk using the GlusterFS API, you need to use the same parameters that are used to connect the virtual disk to the virtual machine. So you can identify the virtual disk on the machine where the GlusterFS client is installed and used:

root@stroj~# qemu-img info gluster+tcp:

The VDI virtual disk can only be identified through the Sheepdog API:

root@stroj~

For a virtual disk exported from a NBD server, you must ensure that the correct server and the correct port are indicated, because NBD does not use any other identification or authentication mechanism that would eliminate confusion with other virtual disks (the new version of nbd-server uses only one port and identifies the device by name - comment) :

root@stroj~

192.168.0.2

The IP address of the node from which the virtual device is connected. If this is the same host on which qemu-img info runs, you can use localhost instead of the IP address

8,000

The port number on which the daemon or server is listening. By default, Sheepdog uses port 7000, but it can also be run on a different port to avoid conflicts with another application. The NBD server can listen to different ports if more than one device is exported.

raw

in general, it is simply a set of data recorded in the same format as on a regular block device. A 5G file will occupy this entire place, regardless of whether it contains useful data or just empty space. (which is not applicable to sparse files - comment)

qcow

It differs from the raw format in that it can be incremental, because it grows gradually - this is convenient for those file systems that do not support sparse files, such as FAT32. This format also allows you to create separate incremental copies from a single basic disk. Using this "template" saves time and disk space. In addition, it supports AES zlib encryption and compression. The disadvantage is that, unlike raw disks, such a file cannot be mounted on the machine directly on which it is located. Fortunately, there is a qemu-nbd utility that can export this file as a network block device and then connect it to an NBD device.

qcow2

is an updated version of the qcow format. The main difference is that it supports snapshots. Otherwise, they are not fundamentally different. It is also possible to meet qcow2, which is internally defined as QCOW of version 3, which was once included in the qcow2 format long ago. In fact, this is a modified qcow2 with the lazy_refcounts parameter, which is used for snapshots. Since the difference is only in one bit, qemu-img since version 1.7 has an "amend" option to change it. Earlier versions of qemu-img do not have this feature. If you wanted to change the format version, it was necessary to convert the virtual disk to a new file, during the conversion, the compat parameter was set to the version for which it was necessary to reduce instead of "1.1" "0.10". The presence of the "amend" option is convenient because there is no need to overwrite the data due to such minor changes.

qemu-img create -f qcow2 -o compat=1.1 test.qcow2 8G

http://blog.wikichoon.com/2014/12/virt-manager-10-creates-qcow2-images.html

qed

This is the incremental COW format of the virtual disk, which creates the least load on the host. It does not support any compression and uses two parallel tables to address data blocks (clusters). Unfortunately, no developer has been interested in its development for a long time, so its use has some problems that will be mentioned.

vdi

The virtual disk format used by the Oracle Virtualbox virtualization system.

vmdk

virtual disk format used by VMware products. This is also the format that allows the file to grow gradually. However, it has a great advantage over qcow2 and qed formats, which can also be used with diskless solutions or with network file systems. It allows you to have a virtual disk file divided into several small files with sizes of 2 GB each. It remains from the time when file systems could not create files larger than 2 GB. The advantage is that if such a virtual disk is replicated over the network, smaller amounts of data are transferred, and synchronization is much faster (for example, in the case of GlusterFS). In the case of a diskless solution, it is also used to store only small files with differences for each snapshot.

vhdx

virtual disk format used by the Microsoft Hyper-V virtualization system

vpc

virtual disk format used by the Microsoft VirtualPC virtualization system.

| Incre

Manty

prod

not

| Cipher

anxiety

| Com

pres

this

| Preal

location

| Chablis

He is from

ation

| Prop

butts

| Section

image

| Inside

night snapshots

you

| Verification agreed

to

| Note

|

|---|

| raw | not | not | not | Yes | not | not | not | not | not | Can be mounted through loop

|

| file | Yes | not | not | option

natively | not | not | not | not | not | For Btrfs, you should disable copy-on-write

|

| qcow | Yes | Yes | Yes | not | Yes | Yes | not | not | not | |

| qcow2 | Yes | Yes | Yes | option

natively | Yes | Yes | not | Yes | Yes | |

| qed | Yes | not | not | not | Yes | not | not | not | not | |

| vmdk | Yes | not | not | option

natively | Yes | yes | Yes | not | not | |

| vdi | Yes | not | not | option

static (static) | not | not | not | not | Yes | |

| vhdx | Yes | not | not | option

fixed | not | Yes one | not | not | not | |

| vpc | Yes | not | not | option

fixed | not | not | not | not | not | |

- ↑ Only use on preallocated images (fixed)

APIs used

In addition to file formats, qemu-img also works with “formats” that are provided via an API through which these block devices can be accessed remotely.

nfs

virtual disk file connected to QEMU via NFS protocol

iscsi

communication with the block device is via the iSCSI protocol. One device cannot be simultaneously used on more than one client.

nbd

Access via the NBD protocol is very fast. This is because the protocol is very simple. This is an advantage, but at the same time a disadvantage. This can be convenient when multiple clients can connect to the same NBD server if they use a local connection through the xnbd server. However, since nbd does not have any security or authentication mechanisms, it may easily happen that a client accidentally connects to the wrong device, which at that point in time can already be used and damage it.

ssh

connection to remote server is done via sshfs

gluster

uses the GlusterFS file system API to access the virtual disk file. If the file is part of a replicated or distributed volume, it will distribute the stored data among other nodes. This allows him, in the event of a failure, to be available on other nodes.

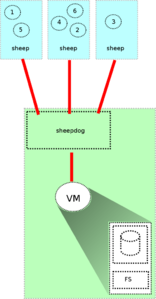

sheepdog

It is also a distributed file system with replication support. Unlike GlusterFS, a virtual disk through the API is not available as a file, but as a block device. This is advantageous in terms of performance, but it is not profitable if we need to go beyond the Sheepdog environment.

paralles

Virtual disks on network storage

The advantage of block devices located outside the virtualization system is that they are then immune to virtual machine failures.

In this case, it also provides high availability and sufficient volume for remote storage.

Using NFS

Sheepdog

GlusterFS

Virtual machines without block devices

Without block devices, operating systems that can boot via NFS or from the host file system thrown using Plan9 can work.