If you use the Internet, you probably interacted with neural networks. This is a kind of machine learning algorithm used in many areas, from language translation to financial modeling. One of the specialties of this approach is image recognition. Several companies - including Google, Microsoft, IBM and Facebook - have developed their own photo markup algorithms. But while these algorithms can make very strange errors.

The Microsoft Azure API for Computer Vision added the following signature to this image: “a flock of sheep graze on the hillside covered with lush vegetation” and tags: “graze, sheep, mountain, cattle, horse”. But there are no sheep in the photo. At all. I studied every spot.

tags: grass, field, sheep, stand, rainbow, man

tags: grass, field, sheep, stand, rainbow, manIn this photo, the computer also saw sheep. By chance, I know that several sheep really grazed near this place. But in the photo they are not visible.

tags: hillside, graze, sheep, giraffe, herd

tags: hillside, graze, sheep, giraffe, herdHere is another example. Neural networks generally seemed to be sheep every time she saw such images. What's happening?

Neural networks are trained by processing many examples. In this case, she was fed a lot of images, marked by people manually - and many of them had sheep. Starting with a complete lack of knowledge about what he saw, the neural network needs to create rules according to which images need to be assigned the label "sheep". Apparently, she did not understand that the word "sheep" means an animal, and not just grass without trees. Similarly, the second photo, she gave the label "rainbow", because the landscape is wet and rainy, not realizing that a multicolored strip is needed for a rainbow.

Maybe the neural networks are overly sensitive, and they see sheep everywhere? It turns out no. They see sheep only when they are expected to see them. They easily find sheep in the fields and on the slopes of the mountains, but as soon as the sheep begin to appear in unexpected places, it becomes obvious how much these algorithms rely on guessing and probabilities.

Get the sheep in the room, and it will be designated as a cat. Raise a sheep or a goat in your arms, and it will be designated as a dog.

“On the left: a man holds a dog in his hand. Right: a woman holds a dog in her hand. ”

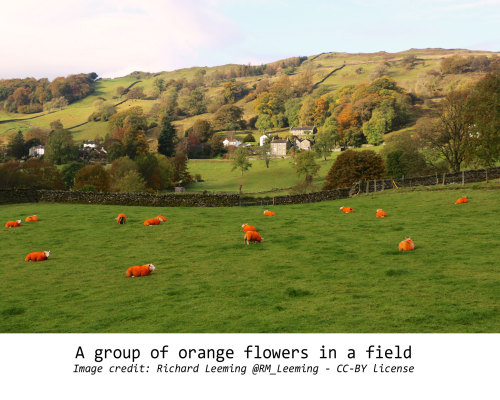

“On the left: a man holds a dog in his hand. Right: a woman holds a dog in her hand. ”Paint them orange and they will become flowers.

"Several orange flowers in the field"

"Several orange flowers in the field"Plant a sheep on a leash, and it will be designated as a dog. Put her in the car and it will be a dog or a cat. If they enter the water, they can be noted like birds or even polar bears.

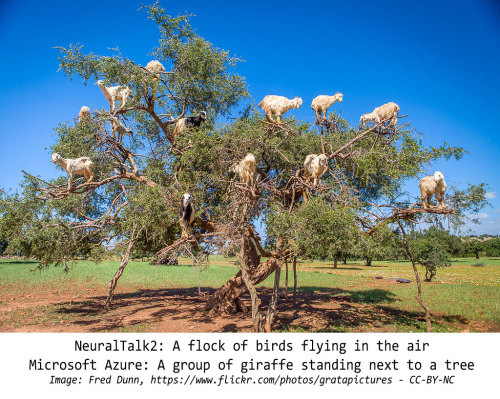

And if the goats climb a tree, they will turn into birds. Or giraffes (it turned out that Microsoft Azure is notorious for seeing giraffes everywhere, due to the excessive abundance of giraffes, which, according to rumors, was observed in the initial data set).

NeuralTalk2: a flock of birds flying in the air

NeuralTalk2: a flock of birds flying in the air

Microsoft Azure: several giraffes are standing next to a treeNeural networks are matching patterns. They see pieces of textures that look like fur, patches of green, and decide that there are sheep in the picture. If they see fur and kitchen-like shapes, they can decide what cats see.

If life goes by the rules, image recognition works as it should. But as soon as people or sheep do something unexpected, the algorithms immediately show weakness.

If you want to carry something imperceptibly past the neural network, then surrealism will help you almost in cyberpunk style. Perhaps in the future, undercover agents will dress in hens or drive cars that are painted like cows.

There are many examples of very funny errors in the Twitter thread that started with a simple question:

And you can check out the Microsoft Azure pattern recognition API and see for yourself that even the most advanced algorithms rely on luck and probability. Another algorithm, NeuralTalk2, I mostly used to process images from this comment thread on Twitter.